** I want to thank Christian Elsen and Clair Roberts for providing me with the content for this article **

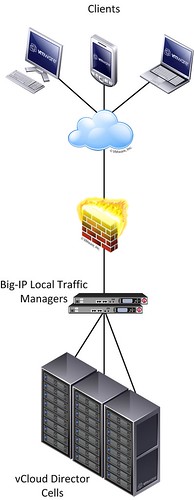

A while back Clair contacted me and asked me if I was interested in getting the info to write an article about how to setup F5’s Big IP LTM VE to front a couple of vCloud Director cells. As you know I used to be part of the VMware Cloud Practice and was responsible for architecting vCloud environments in Europe. Although I did design an environment where F5 was used I never actually was part of the team who implemented it, as it is usually the Network/Security team who takes on this part. Clair was responsible for setting this up for the VMworld Labs environment and couldn’t find many details around this on the internet, hence the reason for this article.

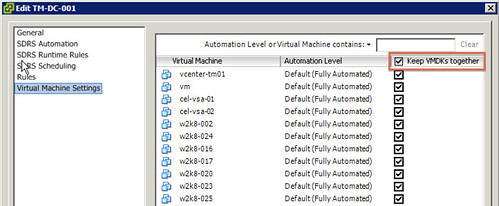

This post will therefore outline how to setup the below scenario of distributing user requests across multiple vCloud Director cells.

figure 1:

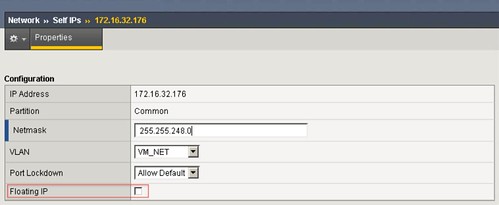

For this article we will assume that the basic setup of the F5 Big IP load balancers has already been completed. Besides the management and HA interface, one interface will reside on the external – end-user facing – part of the infrastructure and another interface on the internal – vCloud director facing – part of the infrastructure.

Configuring a F5 Big IP load balancer to front a web application usually requires a common set of configuration steps:

- Creating a health monitor

- Creating a member pool to distribute requests among

- Creating the virtual server accessible by end-users

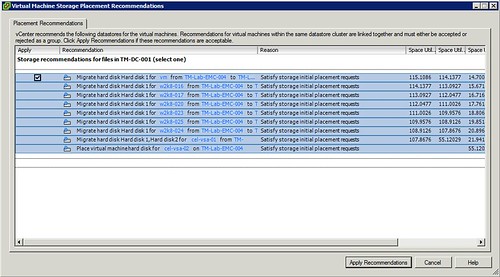

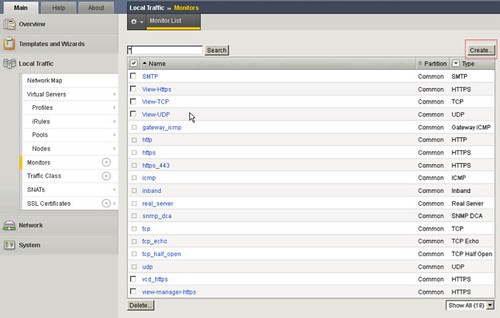

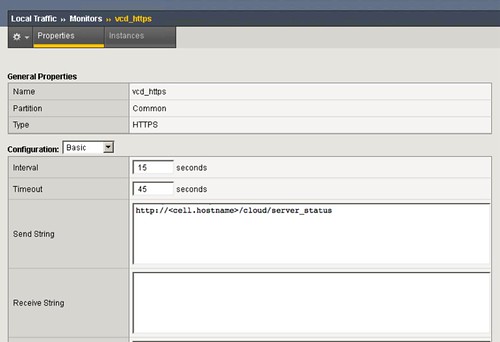

Let’s get started configuring the health monitor. A monitor is used to “monitor” the health of the service. Go to the Local Traffic page, then go to monitors. Add a monitor for vCD_https. This is unique to vCD, we recommend to use the following string “http://<cell.hostname>/cloud/server_status“ (figure 3). Everything else can be set to default.

figure 2:

figure 3:

figure 4:

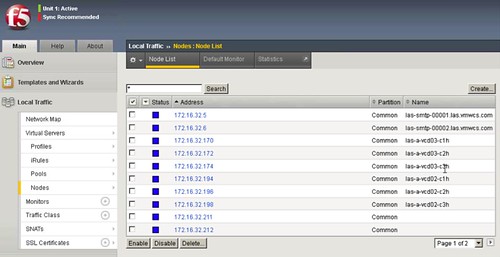

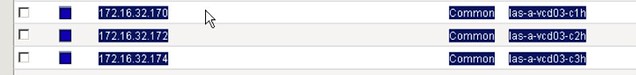

Next you will need to define the vCloud Director Cells as nodes of a member pool. The F5 Big IP will then distribute the load across these member pool nodes. You will need to type in the IP address, add the name and all the info. We suggest to use 3 vCloud Director Cells as a minimum. Go to Nodes and check your node list, depicted in figure 5. You should have three defined as shown in figure 5 and 6. You can create these by simply clicking “Create” and defining the ip-address and the name of the vCD Cell

figure 5:

figure 6:

figure 7:

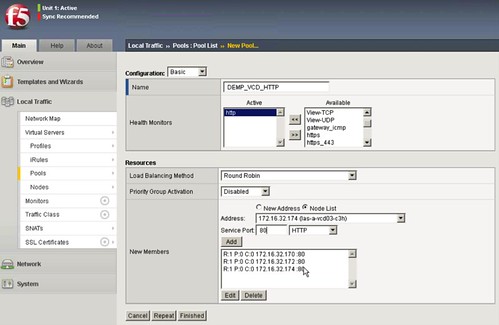

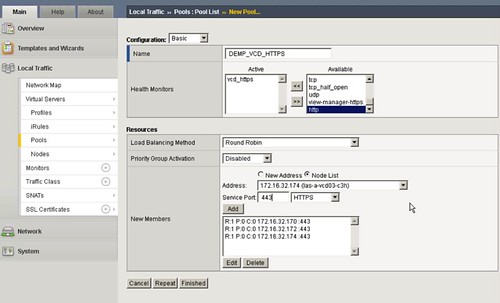

Now that you have defined the cells you will need to pool them. If vCloud Director needs to respond to both http and https (figure 8 and 9) you will need to configure two pools. Each pool will have the three cells added. We are going with most of the basics settings. (Pools menu) Don’t forget the Health Monitors.

figure 8:

figure 9:

Now validate if the health monitor has been able to successfully communicate with the vCD cells, you should see a green dot! The green dot means that the appliance can talk to the cells and that the health monitoring is fine and getting results on the query.

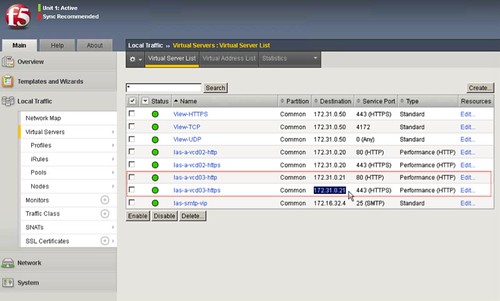

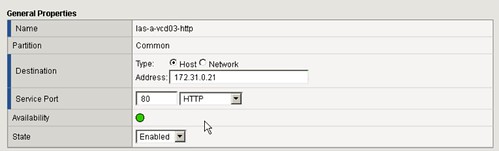

Last you will need to create a Virtual IP (VIP) per server. In this case two “virtual servers” (as the F5 appliance names them, figure 10) will have the same IP but with different ports!, http and https. These can be simply created by clicking “Create” and then define the IP Address which will be used to access the cells (figure 11).

figure 10:

figure 11:

Repeat the above steps for the Consoleproxy IP address of your vCD setup.

Last you will need to specify these newly created VIPs in the vCD environment.

See Hany’s post on how to do the vCloud Director part of it… it is fairly straight forward. (I’ll give you a hint: Administration –> System Settings –> Public Addresses)