** I want to thank Christian Elsen and Clair Roberts for providing me with the content for this article **

A while back Clair contacted me and asked me if I was interested in getting the info to write an article about how to setup F5’s Big IP LTM VE to front a couple of vCloud Director cells. As you know I used to be part of the VMware Cloud Practice and was responsible for architecting vCloud environments in Europe. Although I did design an environment where F5 was used I never actually was part of the team who implemented it, as it is usually the Network/Security team who takes on this part. Clair was responsible for setting this up for the VMworld Labs environment and couldn’t find many details around this on the internet, hence the reason for this article.

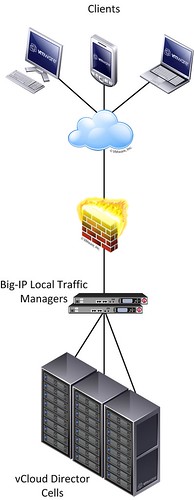

This post will therefore outline how to setup the below scenario of distributing user requests across multiple vCloud Director cells.

figure 1:

For this article we will assume that the basic setup of the F5 Big IP load balancers has already been completed. Besides the management and HA interface, one interface will reside on the external – end-user facing – part of the infrastructure and another interface on the internal – vCloud director facing – part of the infrastructure.

Configuring a F5 Big IP load balancer to front a web application usually requires a common set of configuration steps:

- Creating a health monitor

- Creating a member pool to distribute requests among

- Creating the virtual server accessible by end-users

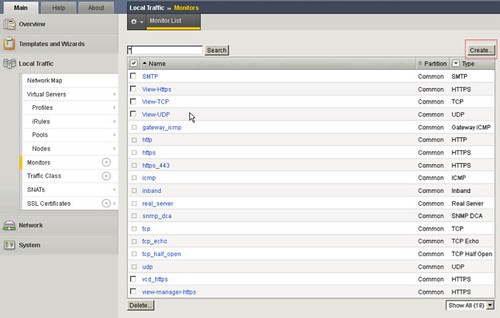

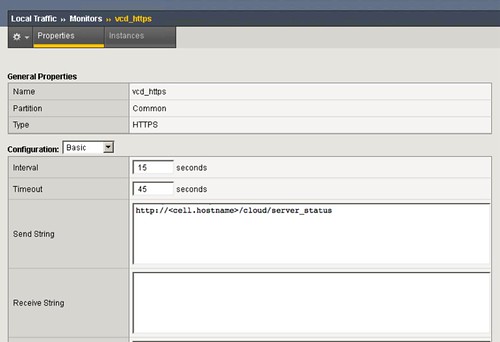

Let’s get started configuring the health monitor. A monitor is used to “monitor” the health of the service. Go to the Local Traffic page, then go to monitors. Add a monitor for vCD_https. This is unique to vCD, we recommend to use the following string “http://<cell.hostname>/cloud/server_status“ (figure 3). Everything else can be set to default.

figure 2:

figure 3:

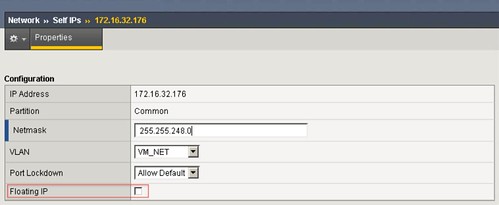

figure 4:

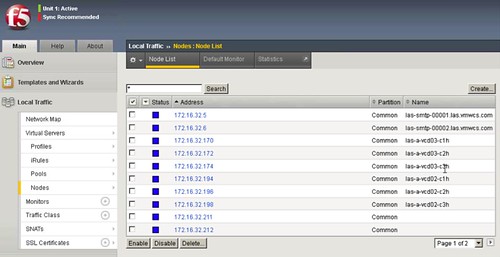

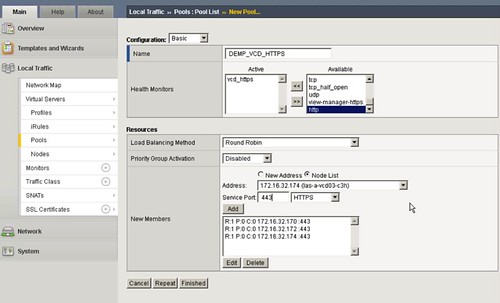

Next you will need to define the vCloud Director Cells as nodes of a member pool. The F5 Big IP will then distribute the load across these member pool nodes. You will need to type in the IP address, add the name and all the info. We suggest to use 3 vCloud Director Cells as a minimum. Go to Nodes and check your node list, depicted in figure 5. You should have three defined as shown in figure 5 and 6. You can create these by simply clicking “Create” and defining the ip-address and the name of the vCD Cell

figure 5:

figure 6:

figure 7:

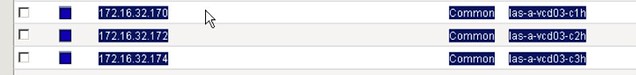

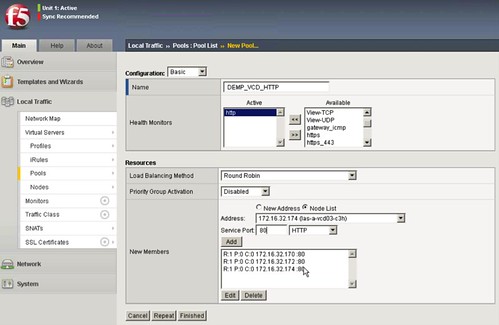

Now that you have defined the cells you will need to pool them. If vCloud Director needs to respond to both http and https (figure 8 and 9) you will need to configure two pools. Each pool will have the three cells added. We are going with most of the basics settings. (Pools menu) Don’t forget the Health Monitors.

figure 8:

figure 9:

Now validate if the health monitor has been able to successfully communicate with the vCD cells, you should see a green dot! The green dot means that the appliance can talk to the cells and that the health monitoring is fine and getting results on the query.

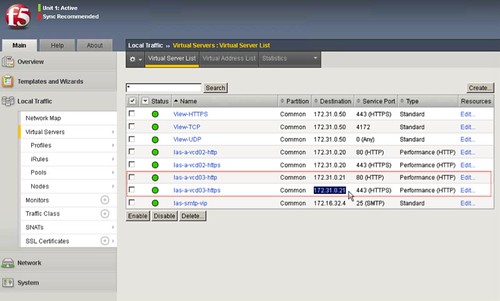

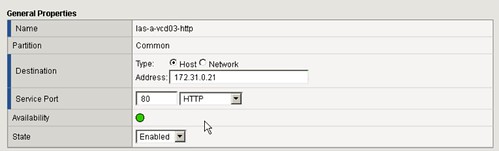

Last you will need to create a Virtual IP (VIP) per server. In this case two “virtual servers” (as the F5 appliance names them, figure 10) will have the same IP but with different ports!, http and https. These can be simply created by clicking “Create” and then define the IP Address which will be used to access the cells (figure 11).

figure 10:

figure 11:

Repeat the above steps for the Consoleproxy IP address of your vCD setup.

Last you will need to specify these newly created VIPs in the vCD environment.

See Hany’s post on how to do the vCloud Director part of it… it is fairly straight forward. (I’ll give you a hint: Administration –> System Settings –> Public Addresses)

Great Article!

Do you think there will be a follow up on WAN & MAN VCD Deployments? Right now we know that it’s best to Silo each VCD into it’s own separate cloud for each location when on a WAN link where the delay is greater than 20ms.

We are looking for good solution to have a Multi Site VCD deployment that will involve load balancers and SRM for failover. But we have found that the architecture behind VCD is still not mature enough to go beyond a MAN architecture for a highly available multi VCD WAN solution.

The insights from PEX 2012 have not given us much hope to getting this accomplished in the near future.

Any insight from your expertise would be much appreciated.

There are multiple people within VMware working on multi-site VCD architecture design considerations. I will see how / where / if I can help providing information through my blog.

Hi Duncan,

for Console proxy, actually the probed link should be https:///sdk/vimServiceVersions.xml

Only problem is that in my customer environment F5 see the probe down with this query.

Any ideas?

Thanks,

Andrea.

Is there any session persistence, ie. client1cell1 is maintained, or is each new request from the same client passed to any cell?

If there is session persistance, do you know how BigIP is doing it (in the old days cookies were used, or a state table map of source ip:port to target ip:port).

Handy post!

You can configure session persistence in a number of ways on the F5s. Cookie, Source, destination, and SSL are the most common. F5 has some other ones, but may require writing some iRules.

I’ve a similar production deployment and encounter some production

There are still some issues to be ironed out with regards to the use of F5 and vCloud Director.

1) A http profile cannot be used and we have to use a generic tcp profile instead. The uses of a generic tcp provfile does not allow us to implement iRules to restrict the access of system vdc resources as well as vcloud director API

2) In addition, SSL termination cannot be done properly on the F5 Load Balance as well since https need to run on VCD and VMRC.

If a client and server SSL profile is applied to the virtual IP, remote console will not work and performance is really slow.

I was just setting this up for the http/s service and would recommend the following changes to the monitor config —

Send String: GET /cloud/server_status \r\n\r\n

Receive String: Service is up\.

This send string doesn’t require specifying a host, and ithout a receive string, any tcp response is interpreted as ‘up’.

For the console proxy service, this monitor configuration works —

Send String: GET /sdk/vimServiceVersions.xml HTTP/1.1\r\nHost: host.domain.com\r\nConnection: Close\r\n\r\n

Receive String: vim25

In the Send String, the host used in the Host: tag is not important. The Receive String is interpreted as a matching regex, so any text in a valid response will match. I’m not sure what a ‘good’ string would be, since I’m not sure what else the service might return.

To load-balance the consoleproxy it is also is incredibly important to get the certs right and the process is not well-documented. Chris Colotti has a pretty good post on the requirements, and I left a couple comments to clarify one point since it seems the consoleproxy on every cell actually needs to use the exact same cert and private key.

http://www.chriscolotti.us/vmware/how-to-configure-vcloud-director-load-balancing/

The pictures on this post are not working, could you please re-post them?