I was just playing around in my lab and created a whole bunch of VMs when I needed to deploy to large virtual machines. Both of them had 500GB disks. The first one deployed without a hassle, but the second one was impossible to deploy, well not impossible for Storage DRS. Just imagine you had to figure this out yourself! Frank wrote a great article about the logic behind this and there is no reason for me to repeat this, just head over to Frank’s blog if you want to know more..

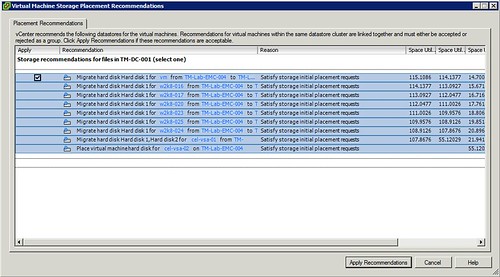

And the actually migrations being spawned:

Yes, this is the true value of Storage DRS… initial placement recommendations!

Very nice. I was thinking that in a SRM scenario, the storage DRS function may be needed to be turned off, since it could affect the replication and correct placement of the VMs on the data stores, or if left on, maybe may need a bit more monitoring. Yhe initial placement would not be an issue, but the profile driven storage and storage DRS may lead to problems.

http://www.yellow-bricks.com/2011/09/07/does-srm-support-storage-drs-yes-it-does/

Wondering how this would work in conjunction with that Nutanix solution you’ve spoken about….

I thought storage DRS would be great for initial placement too but when you create a VM, it wants to put all the .vmdk’s of a VM in the same datastore. That just doesn’t seem right to me. I would expect it to place the next .vmdk on the datastore with the least amount of space. Rather, it just wants to clump them all together. Did you see this behaviour as well? If I remember correctly, in order to move a .vmdk to a different datastore for a multi-vmdk VM, you had to disable storage DRS for that VM. Doesn’t seem right to me…and creating an anti-affinity rule for each VM is out of the question.

@RobM can’t you just disable the intra-VM affinity rule. No need to fuss with anti-affinity.

Irfan

@RobM: You just need to change the default affinity rule. It is difficult to find in the UI. Let me dig up the screenshot for you and post in in a blog article.

Cool? Yes. However storage DRS is not for everyone. Particularly, I’m referring to thin provisioned VMDK files on NFS and VMDK datastores. We use NFS thin provisioned disks and dedupe. From Netapp document TR-3749 dated 12/2011:

“Thin-provisioned LUNs do not get blocks allocated until written to. When a file is deleted in a file system inside a LUN, including VMFS, blocks are not actually zeroed or freed in a meaningful way to the underlying storage. Therefore, once a block in a LUN is written, it stays owned by the LUN even if “freed” at a higher layer. If the goal of SDRS migration was to free space in the aggregate containing a thin- provisioned VMFS datastore, that goal might not be achieved.”

If anyone has any ideas on how to make this work, I’m all ears.

You can use “vmkfstools” to reclaim those blocks again.

Unfortunately, we’re working with NFS datastores so vmkfstools is not an option. According to http://kb.vmware.com/kb/2014849 vmkfstools reclaims deleted space only. It (vmkfstools) cannot reclaim blocks that are not used by the guest OS.

I suggest you contact the appropriate people inside of VMware Ken to find out when this will be resolved. You know your way around.

Ken,

Assuming you’re utilizing snapshots, with NetApp you’re stuck with those blocks until your snapshots expire. It’s not a VMWare issue, it’s the way NetApp snapshots work.

If you’ve had these VM’s running for over 6m you may get noticable space back by zeroing out your free space and running dedupe. This depends on how much ‘junk’ your guests leave in their white space after deleting or overwriting.