I was playing around with a vSAN 6.6 environment yesterday and I figured I would record a quick demo of some of the new functionality introduced. Took me a bit longer than expected, but here it is. I hope you will find it useful, a 1080p version can be viewed on youtube.

stretched cluster

vSAN needs 3 fault domains

I have been having discussions with various customers about all sorts of highly available vSAN environments. Now that vSAN has been available for a couple of years customers are starting to become more and more comfortable around designing these infrastructures, which also leads to some interesting discussions. Many discussions these days are on the subject of multi room or multi site infrastructures. A lot of customers seem to have multiple datacenter rooms in the same building, or multiple datacenter rooms across a campus. When going through these different designs one thing stands out, in many cases customers have a dual datacenter configuration, and the question is if they can use stretched clustering across two rooms or if they can do fault domains across two rooms.

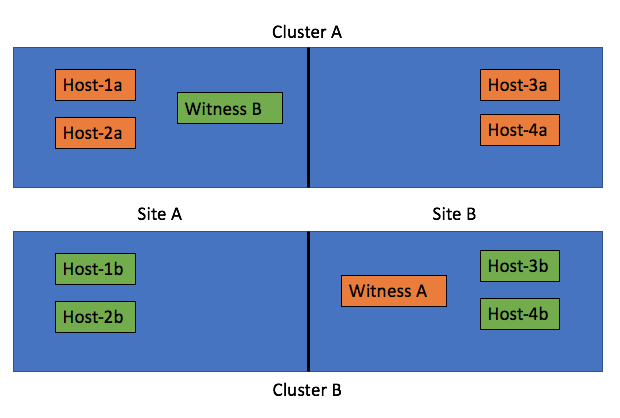

Of course theoretically this is possible (not supported, but you can do it). Just look at the diagram below, we cross host the witness and we have 2 clusters across 2 rooms and protect the witness by hosting it on the other vSAN cluster:

The challenge with these types of configurations is what happens when a datacenter room goes down. What a lot of people tend to forget is that depending on what fails the impact will vary. In the scenario above where you cross host a witness the failure if “Site A”, which is the left part of the diagram, results in a full environment not being available. Really? Yeah really:

- Site A is down

- Hosts-1a / 2a / 1b / 2b are unavailable

- Witness B for Cluster B is down >> as such Cluster B is down as majority is lost

- As Cluster B is down (temporarily), Cluster A is also impacted as Witness A is hosted on Cluster B

- So we now have a circular dependency

Some may say: well you can move Witness B to the same side as Witness A, meaning in Site B. But now if Site B fails the witness VMs are gone also impacting all clusters directly. That would only work if only Site A is ever expected to go down, who can give that guarantee? Of course the same applies to using “fault domains”, just look at the diagram below:

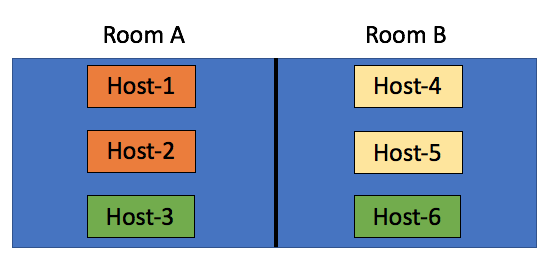

In this scenario we have the “orange fault domain” in Room A, “yellow” in Room B and “green” across rooms as there is no other option at that point. If Room A fails, VMs that have components in “Orange” and on “Host3” will be impacted directly, as more than 50% of their components will be lost the VMs cannot be restarted in Room B. Only when their components in “fault domain green” happen to be on “Host-6” then the VMs can be restarted. Yes in terms of setting up your fault domains this is possible, this is supported, but it isn’t recommended. No guarantees can be given your VMs will be restarted when either of the rooms fail. My tip of the day, when you start working on your design, overlay the virtual world with the physical world and run through failure scenarios step by step. What happens if Host 1 fails? What happens if Site 1 fails? What happens if Room A fails?

Now so far I have been talking about failure domains and stretched clusters, these are all logical / virtual constructs which are not necessarily tied to physical constructs. In reality however when you design for availability/failure, and try to prevent any type of failure to impact your environment the physical aspect should be considered at all times. Fault Domains are not random logical constructs, there’s a requirement for 3 fault domains at a minimum, so make sure you have 3 fault domains physically as well. Just to be clear, in a stretched cluster the witness acts as the 3rd fault domain. If you do not have 3 physical locations (or rooms), look for alternatives! One of those for instance could be vCloud Air, you can host your Stretched Cluster witness there if needed!

Two host stretched vSAN cluster with Standard license?

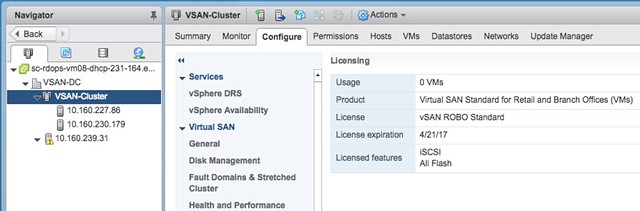

I was asked today if it was possible to create a 2 host stretched cluster using a vSAN Standard license or a ROBO Standard license. First of all, from a licensing point of view the EULA states you are allowed to do this with a Standard license:

A Cluster containing exactly two Servers, commonly referred to as a 2-node Cluster, can be deployed as a Stretched Cluster. Clusters with three or more Servers are not allowed to be deployed as a Stretched Cluster, and the use of the Software in these Clusters is limited to using only a physical Server or a group of physical Servers as Fault Domains.

I figured I would give it a go in my lab. Exec summary: worked like a charm!

Loaded up the ROBO license:

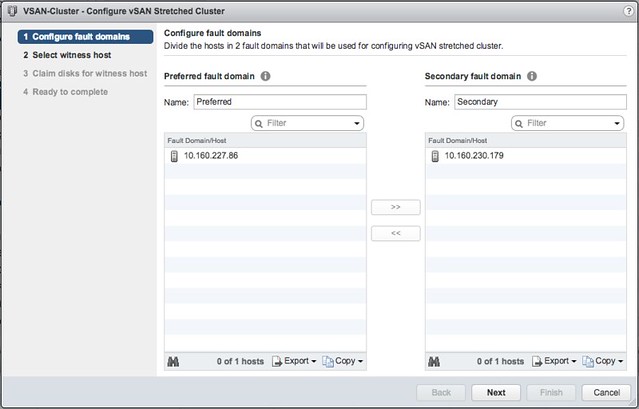

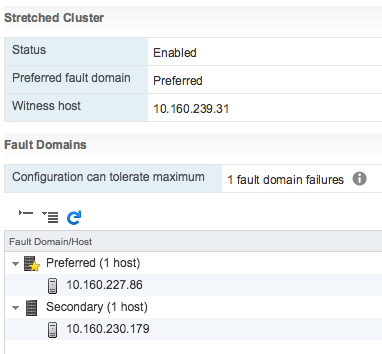

Go to the Fault Domains & Stretched Cluster section under “Virtual SAN” and click Configure. And one host to “preferred” and one to “secondary” fault domain:

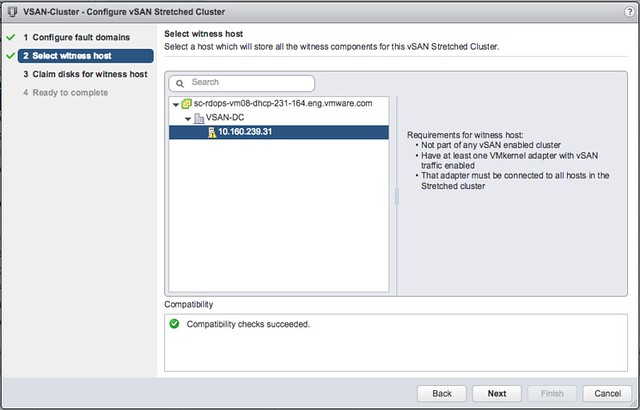

Select the Witness host:

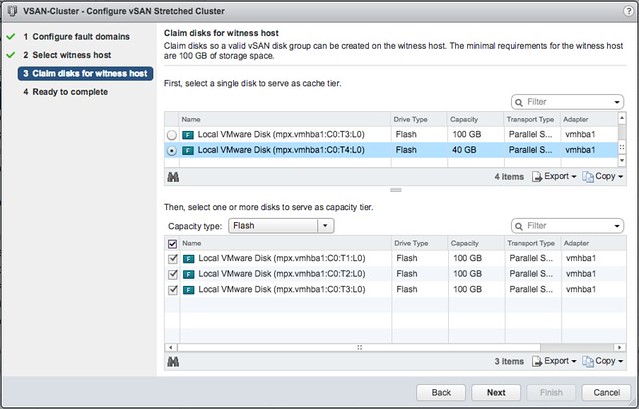

Select the witness disks for the vSAN cluster:

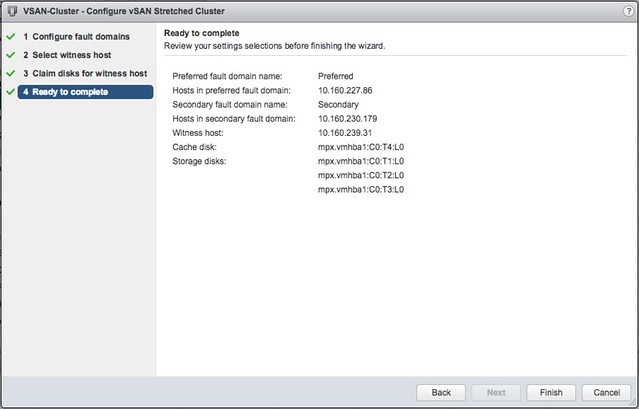

Click Finish:

And then the 2-node stretched cluster is formed using a Standard or ROBO license:

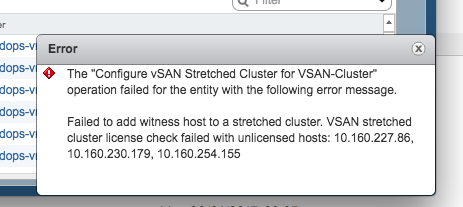

Of course I tried the same with 3 hosts, which failed as my license does not allow me to create a stretched cluster larger than 1+1+1. And even if it would succeed, the EULA clearly states that you are not allowed to do so, you need Enterprise licenses for that.

There you have it. Two host stretched using vSAN Standard, nice right?!

How HA handles a VSAN Stretched Cluster Site Partition

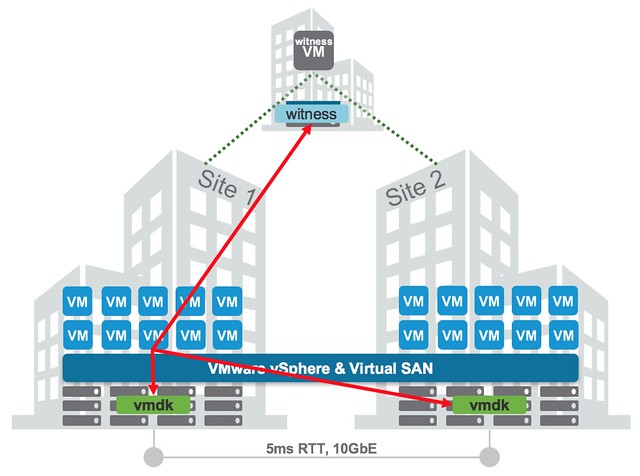

Over the past couple of weeks I have had some interesting questions from folks about different VSAN Stretched failure scenarios, in particular what happens during a VSAN Stretched Cluster site partition. These questions were in particular about site partitions and how HA and VSAN know which VMs to fail-over and which VMs to power-off. There are a couple of things I like to clarify. First lets start with a diagram that sketches a stretched scenario. In the diagram below you see 3 sites. Two which are “data” sites and one which is used for the “witness”. This is a standard VSAN Stretched configuration.

The typical question now is, what happens when Site 1 is isolated from Site 2 and from the Witness Site? (While the Witness and Site 2 remain connected.) Is the isolation response triggered in Site 1? What happens to the workloads in Site 1? Are the workloads restarted in Site 2? If so, how does Site 2 know that the VMs in Site 1 are powered off? All very valid questions if you ask me, and if you read the vSphere HA deepdive on this website closely and letter for letter you will find all the answers in there, but lets make it a bit easier for those who don’t have the time.

First of all, all the VMs running in Site 1 will be powered off. Let is be clear that this is not done by vSphere HA, this is not the result of an “isolation” as technically the hosts are not isolated but partitioned. The VMs are killed by a VSAN mechanism and they are killed because the VMs have no access to any of the components any longer. (Local components are not accessible as there is no quorum.) You can disable this mechanism by the way, although I discourage you from doing so, through the advanced host settings. Set the advanced host setting called VSAN.AutoTerminateGhostVm to 0.

In the second site a new HA master node will be elected. That master node will validate which VMs are supposed to be powered on, it knows this through the “protectedlist”. The VMs that were on Site 1 will be missing, they are on the list, but not powered on within this partition… As this partition has ownership of the components (quorum) it will now be capable of powering on those VMs.

Finally, how do the hosts in Partition 2 know that the VMs in Partition 1 have been powered off? Well they don’t. However, Partition 2 has quorum (Quorum meaning that is has the majority of the votes / components (2 our of 3) and as such ownership and they do know that this means it is safe to power-on those VMs as the VMs in Partition 1 will be killed by the VSAN mechanism.

I hope that helps. For more details, make sure to read the clustering deepdive, which can be downloaded here for free.

vMSC and Disk.AutoremoveOnPDL on vSphere 6.x and higher

I have discussed this topic a couple of times, and want to inform people about a recent change in recommendation. In the past when deploying a stretched cluster (vMSC) it was recommended by most storage vendors and by VMware to set Disk.AutoremoveOnPDL to 0. This basically disabled the feature that automatically removes LUNs which are in a PDL (permanent device loss) state. Upon return of the device a rescan would then allow you to use the device again. With vSphere 6.0 however there has been a change to how vSphere responds to a PDL scenario, vSphere does not expect the device to return. To be clear, the PDL behaviour in vSphere was designed around the removal of devices, they should not stay in the PDL state and return for duty, this did work however in previous version due to a bug.

With vSphere 6.0 and higher VMware recommends to set Disk.AutoremoveOnPDL to 1, which is the default setting. If you are a vMSC / stretched cluster customer, please change your environment and design accordingly. But before you do, please consult your storage vendor and discuss the change. I would also like to recommend testing the change and behaviour to validate that the environment returns for duty correctly after a PDL! Sorry about the confusion.

KB article backing my recommendation was just posted: https://kb.vmware.com/kb/2059622. Documentation (vMSC whitepaper) is also being updated.