I am at VMware Partner Exchange this week and there and figured I would share some of the Virtual SAN related updates.

- 6th of March their is an online Virtual SAN event with Pat Gelsinger, Ben Fathi and John Gilmartin… Make sure to register for it!

- Ben Fathi (VMware CTO) stated that VSAN will be GA in Q1, more news in the upcoming weeks

- Maximum cluster size has been increased from 8 (beta) to 16 according to Ben Fathi, VMware VSAN engineering team is ahead of schedule!

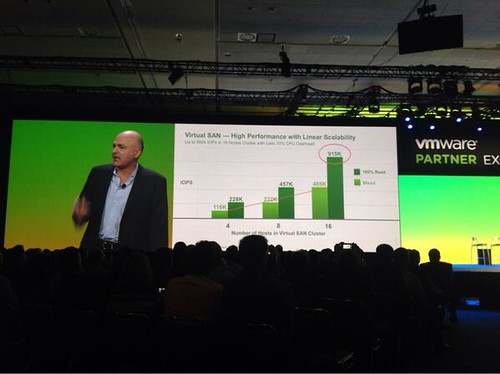

- VSAN has linear scalability, close to a million IOPS with 16 hosts in a cluster (100% read, 4K blocks). Mixed IOPS close to half a million. All of this with less than 10% CPU/Memory overhead. That is impressive if you ask me. Yeah yeah I know, numbers like these are just a part of the overall story… still it is nice to see that this kind of performance numbers can be achieved with VSAN.

- I noticed a tweet Chetan Venkatesh and it looks like Atlantis ILIO USX (in memory storage solution) has been tested on top of VSAN and they were capable of hitting 120K IOPS using 3 hosts, WOW. There is a white paper on this topic to be found here, interesting read.

- It was also reinstated that customers who sign up and download the beta will get a 20% discount on the first purchase of 10 VSAN licenses or more!

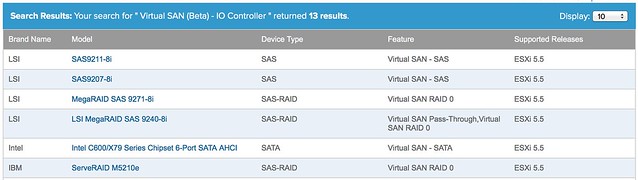

- Several hardware vendors announced support for VSAN, a nice short summary by Alberto to be found here.

I have been working on a slidedeck lately that explains how to build a hyper-converged platform using VMware technology. Of course it is heavily focusing on Virtual SAN as that is one of the core components in the stack. I created the slidedeck based on discussions I have had with various customers and partners who were looking to architect a platform for their datacenters that they could easily repeat. A platform which had a nice form factor and allowed them to scale out and up. Something that could be used in a full size datacenter, but also in smaller SMB type environments or ROBO deployments even.

I have been working on a slidedeck lately that explains how to build a hyper-converged platform using VMware technology. Of course it is heavily focusing on Virtual SAN as that is one of the core components in the stack. I created the slidedeck based on discussions I have had with various customers and partners who were looking to architect a platform for their datacenters that they could easily repeat. A platform which had a nice form factor and allowed them to scale out and up. Something that could be used in a full size datacenter, but also in smaller SMB type environments or ROBO deployments even.