Someone at out internal field conference asked me a question around why doing a full back up of a virtual machine on a VSAN datastore is slower then when doing the same exercise for that virtual machine on a traditional storage array. Note that the test that was conducted here was done with a single virtual machine. The best way to explain why this is is by taking a look at the architecture of VSAN. First, let me mention that the full backup of the VM on a traditional array was done on a storage system that had many disks backing the datastore on which the virtual machine was located.

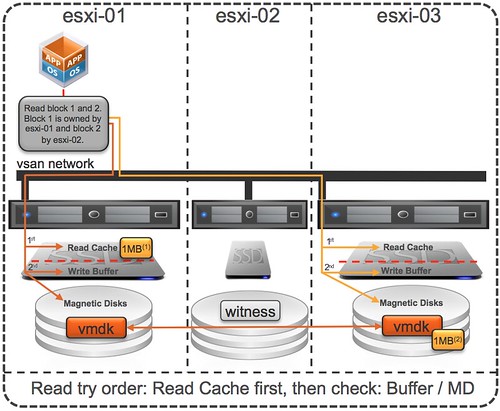

Virtual SAN, as hopefully all of you know, creates a shared datastore out of host local resources. This datastore is formed out of disk and flash. Another thing to understand is that Virtual SAN is an object store. Each object typically is stored in a resilient fashion and as such on two hosts, hence 3 hosts is the minimum. Now, by default the component of an object is not striped which means that components are stored in most cases on a single physical spindle, for an object this means that as you can see in the diagram below that the disk (object) has two components and without stripes is stored on 2 physical disks.

Now lets get back to the original question. Why did the backup on VSAN take longer then with a traditional storage system? It is fairly simple to explain looking at the above info. In the case of the traditional storage array you are reading from multiple disks (10+) but with VSAN you are only reading from 2 disks. As you can imagine when reading from disk performance / throughput results will differ depending on the number of resources the total number of disks it is reading from can provide. In this test, as there it is just a single virtual machine being backed up, the VSAN result will be different as it has a lower number of disks (resources) to its disposal and on top of that is the VM is new there is no data cached so the flash layer is not used. Now, depending on your workload you can of course decide to stripe the components, but also… when it comes to backup you can also decided to increase the number of concurrent backups… if you increase the number of concurrent backups then the results will get closer as more disks are being leveraged across all VMs. I hope that helps explaining why results can be different, but hopefully everyone understands that when you test things like this that parallelism is important or provide the right level of stripe width.

Nice post. Simple and informative. 🙂

I think a lot of us forget that there are details like this which exist below the abstraction layer(s) and can have big effects on performance (or security, availability, manageability, etc.). As you mention, due to the fairly significant differences in design between Virtual SAN and traditional arrays, the benchmark which looks “the same” is in fact not accounting for all common use cases. There will always be corner cases that expose the weaknesses in any platform.

That said, if my use case involves backing up a single VM, or several VMs sequentially, it is good for me to understand the differences in performance between Virtual SAN and a traditional array and so that I understand how my use case will be affected. That understanding provides valuable insight to help me optimize my backup processes to possibly introduce more parallelism. As you explain, in the case of VSAN, that parallelism may provide better performance because it spreads the load across more disk spindles rather than causing contention on the same set of spindles as might be the case in a traditional array.

With all of the layers of cloud and virtualization, at some point, someone needs to care about how the “stuff” works.

I got asked by a customer “Why is the first phase of a VMware View recompose slower on VSAN? (A full clone to build a replica), and we did notice that increasing stripe size fixed this. Another thing that does at least help with replica’s is if your doing a lot of recomposes (testing a new build and incrementing it) it ends up in cache pretty quickly and is a lot faster.

Experts,

Do we need additional backup tools in vSAN distributed RAID array? It is RAID already.

Don;t you always need a backup? Even with a regular storage array, which already provides raid?

Does the backup process drastically affect the service life of the SSD cache drives in the VSAN environment? When a product like Veeam B&R utilize the snapshot process to back up a VM, doesn’t all that data flow through the SSD cache?

If so we’ll need to factor that in to the total drive writes per day when selecting a SSD caching drive.

I’m looking for the best way to design to get the longest lifespan out of the SSDs. We were looking at Sandisk’s Lightning Ascend SSD drives, which I believe is what Dell rebounds and uses in their servers.