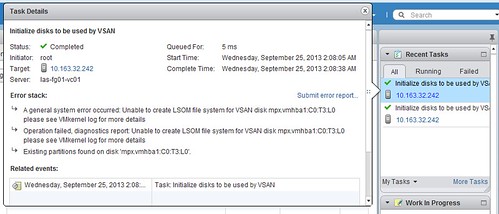

I’ve seen various people reporting the following, they wanted to create a diskgroup in Virtual SAN / VSAN, the task completes successfully but you don’t see any disks. Strange right because it gives a green checkmark?! I had the exact same scenario today, but if you click the task more details are revealed as shown in the screenshot below:

There are a couple of reasons why this can happen:

- Did not fill in the license

- VSAN licenses are applied on a cluster level. Open the Webclient click on your VSAN enabled cluster, click the “Manage” tab followed by “Settings”. Under “Configuration” click “Virtual SAN Licensing” and then click “Assign License Key”.

- Running nested and virtual disks used are too small

- SSD/HDD needs to be 4GB at a minimum (I think, have not tried this extensively though)

- Existing partition on disk found

- Wipe the disk before using it, you can do this using partedUtil for instance.

If you can’t figure out why it happens, make sure to check the task details as it can give a pretty good hint even though it looks like it was successful errors could still be in there.