I have had this question multiple times by now, I wanted to answer it in the Virtual SAN FAQ but I figured I would need some diagrams and probably more than 2 or 3 sentences to explain this. How are host or disk failures in a Virtual SAN cluster handled? I guess lets start with the beginning, and I am going to try to keep it simple.

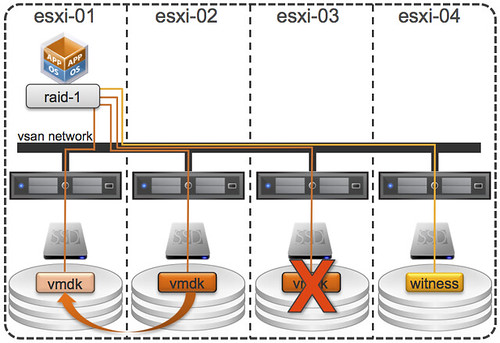

I explained some of the basics in my VSAN intro post a couple of weeks back, but it never hurts to repeat this. I think it is good to explain the IO path first before talking about the failures. Lets look at a 4 host cluster with a single VM deployed. This VM is deployed with the default policy, meaning “stripe width” of 1 and “failures to tolerate” to 1 as well. When deployed in this fashion the following is the result:

In this case you can see: 2 mirrors of the VMDKs and a witness. These VMDKs by the way are the same, they are an exact copy. What else did we learn from this (hopefully) simple diagram?

- A VM does not necessarily have to run on the same host as where its storage objects are sitting

- The witness lives on a different host than the components it is associated with in order to create an odd number of hosts involved for tiebreaking under a network partition

- The VSAN network is used for communication / IO etc

Okay, so now that we know these facts it is also worth knowing that VSAN will never place the mirror on the same host for availability reasons. When a VM writes the IO is mirrored by VSAN and will not be acknowledged back to the VM until all have completed. Meaning that in the example above both the acknowledgement from “esxi-02” and “esxi-03” will need to have been received before the write is acknowledge to the VM. The great thing here is though that all writes will go to flash/ssd, this is where the write-buffer comes in to play. At some point in time VSAN will then destage the data to your magnetic disks, but this will happen without the guest VM knowing about it…

But lets talk about failure scenarios, as that is why I started writing this in the first place. Lets take a closer look at what happens when a disk fails. The following diagram depicts the scenario where the magnetic disk of “esxi-03” fails.

In this scenario you can see that the disk of “esxi-03” has failed. VSAN responds to this failure, depending on the type, by marking all impacted components (VMDK in this example) as “degraded” and immediately creates a new mirror copy. Of course before it will create this mirror VSAN will validate if there are sufficient resources to store this new copy. The great thing here is that the virtual machine will not notice this. Well, that is not entirely true of course… The VM would be impacted performance wise if reads will need to come from disk, as in this case there is only 1 disk left instead of 2 before the failure.

One thing I found interesting was that if there are not enough resources to create that mirror copy ,VSAN will just wait until resources are added. Once you have added a new disk, or even a host, the recovery will begin. In the mean while the VM can still do IO as mentioned before, so the VMs continue to operate as normal.

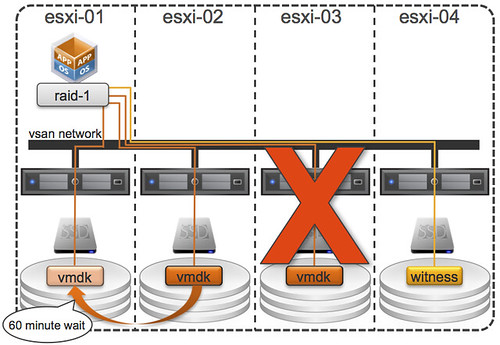

So now that we know how VSAN handles a disk failure, what if a host fails? Lets paint a picture again:

This scenario is slightly different to the “disk failure”. In the case of the disk failure VSAN knew what happened, it knew the disk wasn’t coming back… But in the case of a host failure it doesn’t. This failure state is called “absent“. As soon as VSAN realizes the components (VMDK in the example above) is absent a timer will start, as explained already in the VSAN FAQ, of 60 minutes. If the component comes back within those 60 minutes the VSAN will synchronize the mirror copies. If the component doesn’t come back then VSAN will create a new mirror copy (component). Note that you can decrease this time-out value by changing the advanced setting called “VSAN.ClomRepairDelay”. (Please consult the manual or support if you want to change this value!) If for whatever reason the component returns after VSAN has started resyncing than VSAN will try to assess if it makes more sense to bring the existing but outdated component in sync or continue the creation of the new component. On top of that, VSAN also has a “rebuild throttling / QoS” mechanism, which will throttle back replication traffic during rebuild when this can impact virtual machine performance.

Easy right? I know, these new concepts can be difficult to grasp at first… Hence the reason I may sound somewhat repetitive at times, but this is valuable to know in my opinion. In the next article I will explain how the Isolation / Partition scenario works and include some HA logic in it. Before I forget, I want to thank Christian Dickmann (VSAN Dev Team) for reviewing this article.

Great post.. that helps simplify it!

Thanks!

When you say that VSAN will wait for resources, does that mean the vm is paused until the recovery is complete (because of the policy of acknowledging writes after all replicas have confirmed)?

If it doesn’t pause, what happens with the IO policy? Is that restriction removed until recovery occurs? Can this policy be configured?

Thanks!

The VM notices nothing either way, so the VM isn’t paused or halted. It can do IO just like before, only there is just one mirror copy instead of two.

If an SSD fails what happens?

When the SSD fails then all components on that disk group will be marked as degraded.

In the example above if the SSD in ESXi-03 fails and there is another SSD in ESXi-03 will that be used.

And if there is 2nd SSD in ESXi-03 will another node be used for the SSD? What happens to the data on the HDD will it be copied across — also will the cache in the new storage node be warmed with the cache on the remaining storage node or will the SSD on the remaining node be invalidated?

A flash device is directly coupled to a group of disks (aka disk group). When the flash device fails the disk group and all components will be marked degraded. There is no such a thing as hotspare SSD at the moment, so the answer is no it will not be used.

New objects will be created when sufficient capacity is available, and when they do and VMs read / write then the cache will be warmed. Read cache is only stored 1, hence the need to warm up again.

At first I was thinking VSAN is wasting space by writing the vmdk twice but then I realized I’m suppose to passthrough the storage controller anyways. Which means, instead of typical RAID10 traditional configuration, I am assuming VSAN is just using RAID0 stripes. Redundancy is accomplished through the VSAN host cluster vise the disk raid. After munching on that information for a bit, its not so bad after all. Do you know on top of your head if the HP P410i included in HP DL380 are allowed for passthrough?

No the HP DL380 G7 – HP410i doesn’t support pass through.

What happens in your scenarios if one of the 4 hosts is in maintenance mode. Does the next failure shut down the environment? Are there cleanup procedures that start when a host/disk is back online?

Nothing will ever shut down the environment… However, VM storage might become unavailable when there are various failures.

Yes there is a cleanup process which will make sure disk capacity of a previously failed host becomes available again when there are already new copies instantiated.

Great post , and easy to understand .. Thanks ,

I have seen incidences where the power outage happens in data-center. In conventional SAN and NAS, the data is retrieved ( 100 % most of time – may need to restart the SAN box in some cases ) . How do we handle that here in the VSAN ?

If a physical hard disk fails in one disk group which has two or more physical hard disks, what will happen?

Will this situation cause all components on that disk group be marked as degraded?

Only the components on the disks that have failed will be marked as degraded

So if the host (esxi-03) comes back after the 60 mins elapse time, what happen to the existing data (from previous mirror)? Will VSAN automatically reformat the disk before adding it back to the disk group? And lastly, any ways to manipulate the configuration on witness object in case I like to fall back to esxi-03?

Is it also possible to build a solution with only 3 hosts? Vmware says you need at least 3x ESXi host running version 5.5.

3 host minimum

Hi Duncan,

Your path IO explanation is good and easy to understand. But you are talking only about write IO… What about read ? How does vSAN handles read IO when all is running fine ? Is vSAN reading blocks on both node (from SSD or HDD) where are located replicated copy ? Or is vSAN reading from only one copy (only one node) ?

If that’s only one, is there a way to know on which node is it ?

Thanks !

It will read from both hosts, but it sill differ per “block”. Depends on who “actively” owns what I guess. So lets say for “block 1” it will read from “host 1” and for “block 2” it will read from “block 2”. It will read from the read cache normally, if the block is not available there then it will try the write buffer and if it isn’t in the write buffer either it will read it from disk.

Hope that helps,

I guess your sence is : “So lets say for “block 1″ it will read from “host 1″ and for “block 2″ it will read from “host 2″”

So does it means that if host 1 is failing (host, disk group or network failure) at the moment when vSAN is trying to read block 1, it will then try to read it from host 2 ? If yes, then it means that on this event, read latency will be increased from the VM…

Right ?

Correct.

How is this cenario going on with 3 esxi host? Writes VSAN the VMDK-File to the same hose where the witness file is storaged or doesn’t rebuild the vdmk file?

Thanks for Reply….

Im trying to wrap my head around the VMDK and Witness pieces. So it sounds it creates multiple copies (3 in your example) of the entire VMDK on separate hosts? In a case where a VM has multiple disks, it would have 2,3,4 etc. Is 3 the max number of copies its keeping? 2 of the VMDK’s and one witness? Which is sounds like its just another VMDK? Are these full copies of the VMDK’s that it keeps on the other hosts?

I find the title a little misleading. Host failure could lead to downtime if the VM is running on the Host that fails, leading to an HA event. I’d expect the host to hold one of the copies of the VM local for optimal performance, which may lead to both the active VM and the storage to fail over.

At least, that’s what I expect? Am I incorrect?

Nope, there is no such a thing as data locality… so the bigger the cluster the bigger the chance your VM is running on a host which is not carrying any objects!

Maybe you have already addresses the question. If you loose a server or even one or more disks inside the disk group. Data are still available because of the mirror in another host. You are with just one copy of data until you replace the units and the software completes the re-mirroring right? If you need more protection you have to triple-mirror the data right? Any option for parity protection instead of mirrors in the future? Many thanks in advance!

that is correct indeed, software will complete the re-mirroring when components are replaced OR the 60 minutes have passed OR if VSAN knows that components are not coming back any time soon it will instantly remirror to free disk space in the cluster.

you can have different levels of protection: N+1 / 2 / 3. No option for parity now, can’t talk about future unfortunately.