After explaining how a disk or host failure worked in a VSAN cluster, it only made sense to take the next step… How are Isolations or Partitions in a Virtual SAN cluster handled? I guess lets start with the beginning, and I am going to try to keep it simple, first a recap of what we learned in the disk/host failures article.

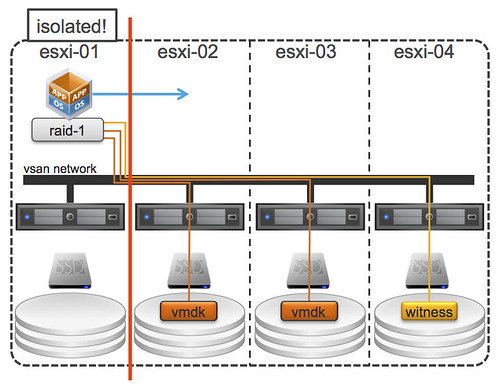

Virtual SAN (VSAN) has the ability to create mirrors of objects. This ability is defined within a policy (VM Storage Policy aka Storage Policy Based Management). You can define option called “failures to tolerate” anywhere between 0 and 3 at the moment. By default this option is set to 1. This means you will have two copies of your data. On top of that VSAN will need a witness / quorum to help figuring out who takes ownership in the case of an event. So what does this look like? Note that in the below diagram I used the term “vmdk” and “witness” to simplify things, in reality this could be any type of component of a VM.

So what did we learn from this (hopefully) simple diagram?

- A VM does not necessarily have to run on the same host as where its storage objects are sitting

- The witness lives on a different host than the components it is associated with in order to create an odd number of hosts involved for tiebreaking under a network partition

- The VSAN network is used for communication, IO and HA

Lets recap some of the HA changes first for a VSAN cluster before we dive in to the details:

- When HA is turned on in the cluster, FDM agent (HA) traffic uses the VSAN network and not the Management Network. However, when a potential isolation is detected HA will ping the default gateway (or specified isolation address) using the Management Network.

- When enabling VSAN ensure vSphere HA is disabled. You cannot enable VSAN when HA is already configured. Either configure VSAN during the creation of the cluster or disable vSphere HA temporarily when configuring VSAN.

- When there are only VSAN datastores available within a cluster then Datastore Heartbeating is disabled. HA will never use a VSAN datastore for heartbeating as the VSAN network is already used for network heartbeating using the Datastore for heartbeating would not add anything,

- When changes are made to the VSAN network it is required to re-configure vSphere HA!

As you can see the VSAN network plays a big roll here, and even bigger then you might realize as it is also used by HA for network heartbeating. So what if the host on which the VM is running gets isolated from the rest of the network? The following would happen:

- HA will detect there are no network heartbeats received from “esxi-01”

- HA master will try to ping the slave “esxi-01”

- HA will declare the slave “esxi-01” is unavailable

- VM will be restarted on one of the other hosts… “esxi-02” in this case, but that could be any, depicted in the diagram below

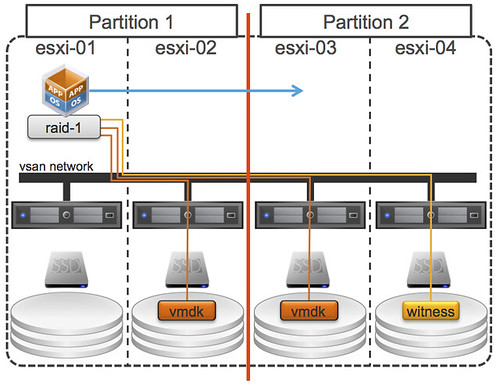

Simple right? Before I forget, for these scenarios it is important to ensure that your isolation response is set to power-off. But I guess the question now arises… what if “esxi-01” and “esxi-02” would be part of the same partition? What happens then? Well that is where the witness comes in to play. Let show the diagram first, as that will make it a bit easier to understand!

Now this scenario is slightly more complex. There are two partitions, one of the partition is running the VM with its VMDK and the other partition has a VMDK and a witness. Guess what happens? Right, VSAN uses the witness to see which partition has quorum and based on that fact one of the two will win. In this case Partition-2 has more than 50% of the components of this object and as such is the winner. This means that the VM will be restarted on either “esxi-03” or “esxi-04” by HA. Note that the VM in Partition-1 will not be powered off, even if you have configured the isolation response to do so, as this partition would re-elect a master and would be able to see each other!

But what if “esxi-01” and “esxi-04” were isolated, what would happen then? This is what it would look like:

Remember that rule which I slipped in to the previous paragraph? The winner is declared based on the % of components available within that partition. If the partition has access to more than 50% it has won. Meaning that when “esxi-01” and “esxi-04” are isolated, either “esxi-02” or “esxi-03” can restart the VM because 66% of the components reside within this part of the cluster. Nice right?!

I hope this makes isolations / partitions a bit clearer, I realize though concepts will be tough for the first weeks/months… I will try to explore some more (complex) scenarios in the near future.

Great post, this really helps.

I have one question. If esxi-01 and esxi-04 are isolated with esxi-01/esxi-02 in partion1 and esxi-03/esxi-04 in partition 2 then neither partition 1 or partition2 has a quorum – will the VM be moved but have no access to data or will it be left alone?

Euuhm, hosts are either in a partition or they are isolated… not both 🙂 If you are asking what happens when all hosts are isolated then the answer is: nothing. The VM will not be restarted as non of the hosts will have quorum.

I understand the quorum idea but VSAN response in the second situation (two partitions) seems not optimal. VM will be restarted on esxi-03 or esxi-04 but this will cause downtime. It could keep running on esxi-01 as it still has access to vmdk on esxi-02 so there is no reason (except for the quorum) for restart. Or am I wrong?

So how would the hosts in Partition 2 know that the hosts in Partition 1 are not actually dead? Lets assume we would keep the VM running in Partition 1, and because the hosts in partition 2 now restarts the VM as well as they don’t see anything in P1… what would happen then?

I think this VM could keep running on esxi-01 if there’s a method to make sure it have enough objects on Partition 1 to work nomarlly

You are right. I guess there is no better way (other than moving the witness outside the cluster which probably would not be the best solution)

Great post,

So Could you explain me some questions ?

Picture 2 : esxi-01 isolated

– How to FDM agent make sure esxi-01’s isolated/partition/die to reboot VM if it only used network heartbeat but not datastore heartbeat ?

– If FDM declare the esxi-01 is unavailable by network heartbeat and restart VM on new host, How about the old instance of this VM on the esxi-01 ? It’s still running or will be powered off ? Do i need config isolation response on cluster ?

– What happened if there is a replication on esxi-01 ?

Picture 3: esxi-01,02 and esxi-03,04 splitted to 2 partition

– Are there differences if the HA master host is esxi-01 or esxi-03 ?

Thank,

Hey

A short question about the scenario with two partitions.

Pre VSAN Partition1 wouldn’t have triggered the isolation response because esx01 and esx02 would see each others election traffic. Has this changed with VSAN? Because otherwise the VM running on esx01 would not be shut down (which would be nasty is the VM network is not affected by the network problem)!?

Regards

Patrick

You raise a good point, just notice the mistake in the article. Partition 1 would get a new master and the VMs would keep on running

Hey,

I also refer to your scenario with partition 1 and partition 2.

In partition 2 there are more that 50% of the VSAN components (vmdk + witness), so this partition will “win”. But doens’t that mean, that Partition 1 looses and the vmdk in this partition gets inactive (and as a result of this, that the VM in partition 1 is unable to run anymore)?

Regards

Bastian