Back in March I wrote about this new and interesting storage vendor called Tintri which had just released a new NAS appliance called VMstore. I wrote about their level of integration and the fact that their NAS appliance is virtual machine aware and allows you to define performance policies per virtual machine. I am not going to rehash the complete post so for more details read it before you continue reading this article. During the briefing for that article we discussed some of the caveats with regards to their design and some possible enhancements. Tintri apparently is the type of company who listens to community input and can act quick. Yesterday I had a briefing of some of the new features Tintri will announce next week. I’ve been told that none of this is under embargo so I will go ahead and share with you what I feel is very exciting. Before I do though I want to mention that Tintri now also has teams in APAC and EMEA, as some of you know they started out only in North-America but now have expanded to the rest of the world.

First of all, and this is probably the most heard complaint, is that the upcoming Tintri VMstore devices will be available in a dual controller configuration which makes it more interesting to many of you probably. Especially the more up-time sensitive environments will appreciate this, and who isn’t sensitive about up-time these days? Especially in a virtualized environment where many workloads share a single device this improvement is more than welcome! The second thing which I really liked is how they enhanced their dashboard. Now this seems like a minor thing but I can ensure you that it will make your life a lot easier. Let me dump a screenshot first and then discuss what you are looking at.

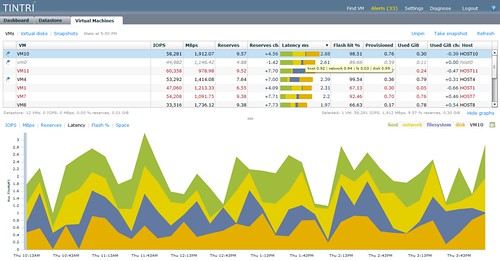

The screenshot shows the per VM latency statistics… Now what is exciting about that? Well if you look at the bottom you will see the different colors and each of those represent a specific type of latency. Lets assume your VM experiences 40ms of latency and your customer starts complaining. The main thing to figure out is what causes this slow down. (Or in many cases, who can I blame?) Is your network saturated? Is the host swamped? Is it your storage device? In order to identify these types of problems you would need a monitor tool and most likely multiple tools to pinpoint the issue. Tintri decided to hook in to vCenter and just pull down the various metrics and use this to create the nice graph that you see in the screenshot. This allows you to quickly pinpoint the issue from a single pane of glass. And yes you can also expect this as a new tab within vCenter.

Another great feature which Tintri offers is the ability to realign your VMDKs. Tintri does this, unlike most solutions out there, from the “inside”. With that meaning that their solution is incorporated into their appliance and not a separate tool which needs to run against each and every VM. Smart solution which can and will safe you a lot of time.

It’s all great and amazing isn’t it? Or are there any caveats? One thing I still feel needs to be addressed is replication. With this next release it is not available yet but is that a problem now that SRM offers vSphere Replication? I guess that relieves some of the immediate pressure but I would still like to see a native Tintri’s solution providing a-sync and sync replication. Yes it will take time but I would expect though that Tintri is working on this. I tried to persuade them to make a statement yesterday they unfortunately couldn’t say anything with regards to a timeline / roadmap.

Definitely a booth I will be checking out at VMworld.