I get this question on a regular basis and it has been explained many many times, I figured I would dedicate a blog to it. Now, Cormac has written a very lengthy blog on the topic and I am not going to repeat it, I will simply point you to the math he has provided around it. I do however want to provide a quick summary:

I get this question on a regular basis and it has been explained many many times, I figured I would dedicate a blog to it. Now, Cormac has written a very lengthy blog on the topic and I am not going to repeat it, I will simply point you to the math he has provided around it. I do however want to provide a quick summary:

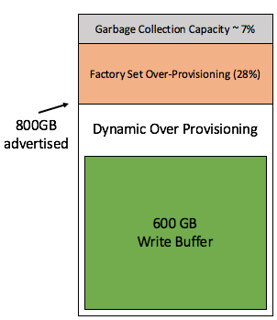

When you have an all-flash VSAN configuration the current write buffer limit is 600GB. (only for all-flash) As a result many seem to think that when a 800GB device is being used for the write buffer that 200GB will go unused. This simply is not the case. We have a rule of thumb of 10% cache to capacity ratio. This rule of thumb has been developed with both performance and endurance in mind as described by Cormac in the link above. The 200GB that is above the 600GB limit of the write buffer is actively used by the flash device for endurance. Note that an SSD usually is over-provisioned by default, most of them have extra cells for endurance and write performance. Which makes the experience more predictable and at the same time more reliable, the same applies in this case with the Virtual SAN write buffer.

The image at the top right side shows how this works. This SSD has 800GB as advertised capacity. The “write buffer” is limited to 600GB however the white space is considered “dynamic over provisioning” capacity as it will be actively used by the SSD automatically (SSDs do this by default). Then there is an additional x % of over provisioning by default on all SSDs, which in the example is 28% (typical for enterprise grade) and even after that there usually is an extra 7% for garbage collection and other SSD internals. If you want to know more about why this is and how this works, Seagate has a nice blog.

So lets recap, as a consumer/admin the 600GB write buffer limit should not be a concern. Although the write buffer is limited in terms of buffer capacity, the flash cells will not go unused and the rule of thumb as such remains unchanged: 10% cache to capacity ratio. Lets hope this puts this (non) discussion finally to rest.