I get this question on a regular basis and it has been explained many many times, I figured I would dedicate a blog to it. Now, Cormac has written a very lengthy blog on the topic and I am not going to repeat it, I will simply point you to the math he has provided around it. I do however want to provide a quick summary:

I get this question on a regular basis and it has been explained many many times, I figured I would dedicate a blog to it. Now, Cormac has written a very lengthy blog on the topic and I am not going to repeat it, I will simply point you to the math he has provided around it. I do however want to provide a quick summary:

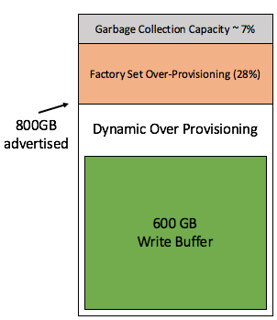

When you have an all-flash VSAN configuration the current write buffer limit is 600GB. (only for all-flash) As a result many seem to think that when a 800GB device is being used for the write buffer that 200GB will go unused. This simply is not the case. We have a rule of thumb of 10% cache to capacity ratio. This rule of thumb has been developed with both performance and endurance in mind as described by Cormac in the link above. The 200GB that is above the 600GB limit of the write buffer is actively used by the flash device for endurance. Note that an SSD usually is over-provisioned by default, most of them have extra cells for endurance and write performance. Which makes the experience more predictable and at the same time more reliable, the same applies in this case with the Virtual SAN write buffer.

The image at the top right side shows how this works. This SSD has 800GB as advertised capacity. The “write buffer” is limited to 600GB however the white space is considered “dynamic over provisioning” capacity as it will be actively used by the SSD automatically (SSDs do this by default). Then there is an additional x % of over provisioning by default on all SSDs, which in the example is 28% (typical for enterprise grade) and even after that there usually is an extra 7% for garbage collection and other SSD internals. If you want to know more about why this is and how this works, Seagate has a nice blog.

So lets recap, as a consumer/admin the 600GB write buffer limit should not be a concern. Although the write buffer is limited in terms of buffer capacity, the flash cells will not go unused and the rule of thumb as such remains unchanged: 10% cache to capacity ratio. Lets hope this puts this (non) discussion finally to rest.

Hmm, if your SSD is a 2T drive, you are pushing the 28% default enterprise grade overprovisioning to a largish 260%, and then your expected life will be out of synch with the capacity tier, which is the reason for the 10% tier cache size. So I would say is is not a non discussion. 🙂

Can’t say I have seen customers using 2TB drives for caching to be honest. Especially not as that would mean a 20TB disk group to stick to the ratio.

Great work once again – I keep coming back and I keep loving these blog posts! I use almost everyone. If I dont use them then I pass my newly found knowledge onto others!

Another thing to add is that its 600GB per disk group worth of write buffer. Considering you can have 5 disk groups in a host, that is 3TB of write buffer in a host, or 192TB in a VSAN cluster. Rather than deploy 2TB cache devices, breaking that up into 3 disk groups would increase performance quite a bit and break up fault domains internally.

If you need more write buffer than this I would point out that you likely need to be looking at the performance of your existing drives to increase the speed at which cache can de-stage. I have never seen anyone deploy a traditional storage array with cache at this scale/size.

But that would also have an impact on the dedupe and compression ratio as this is carried out at the disk group level

The 600GB write buffer per disk group is plenty – like John N said, that can scale up to 3TB/host. What I was stating on my blog is that above 600GB, VSAN doesn’t recognize the flash for a write buffer, but the SSD itself does still recognize (and uses) the extra flash. Micron optimized an SSD, the 600GB M510DC, to accommodate write buffer and provide the proper endurance.

indeed a great job by Micron…

Hi Duncan,

Really useful stuff.

So at the end of the day the 600 GB Write Buffer limit for all-flash is irrelevant as the tier is for endurance more than performance – is that correct?

So if you had 5×1.92TB SDDs in the capacity tier (9.6 TB in total) and you were using de-dupe and compression (expected 2:1 saving) you would need an SSD Write cache tier of 10% of 19.2 TB (i.e. 1.92 TB) – is that correct?

If this is purely about endurance then I assume there would not be any reason to split this into multiple disk groups each with a smaller cache SSD so you could increase the write buffer to 1,200 GB.

VxRail does no follow this rule as a 19.2 TB capacity tier only has an 800GB cache which is way short even before you take into account de-duplication and compression – any reason for this?

Also what is the reason behind the 600 GB limit and will it change in a future release?

Many thanks

Mark

Yes indeed that is correct, it is more endurance than performance, or better said: endurance and predictable performance.

With regards to your sizing question, yes that is true. However you can also look at the endurance factor, Cormac described that in a post I linked in the article above. Some 800GB SSDs have 2x or 3x the endurance than larger SSDs, so that needs to be taken in to account as well.

Also, when it comes to VxRail, I don’t know what their sizing looks like.

In terms of roadmap, yes we are looking in to increasing the limit. The limit we have today was all around finding a balance in terms of buffer size, memory usage and predictable performance / experience. This may change in the future indeed based on new learnings (and ever changing hardware specs)