At the Italian VMUG I was part of the “Expert panel” at the end of the event. One of the questions was around innovation in the world of IT, what should be next. I knew immediately what I was going to answer: backup/recovery >> data copy management. My key reason for it being is that we haven’t seen much innovation in this space.

And yes before some of my community friends will go nuts and point at Veeam and some of the great stuff they have introduced over the last 10 years, I am talking more broadly here. Many of my customers are still using the same backup solution they used 10-15 years ago, yes it is a different version probably, but all the same concepts apply. Well maybe tapes have been replaced by virtual tape libraries stored on a disk system somewhere, but that is about it. The world of backup/recovery hasn’t evolved really.

And yes before some of my community friends will go nuts and point at Veeam and some of the great stuff they have introduced over the last 10 years, I am talking more broadly here. Many of my customers are still using the same backup solution they used 10-15 years ago, yes it is a different version probably, but all the same concepts apply. Well maybe tapes have been replaced by virtual tape libraries stored on a disk system somewhere, but that is about it. The world of backup/recovery hasn’t evolved really.

Over the last years though we’ve been seeing a shift in the industry. This shift started with companies like Veeam and then continued with companies like Actifio, and this is now accelerated by companies like Cohesity and Rubrik. What is different from what these guys offer versus the more traditional backup solution… well the difference is that all of these are more than backup solutions, they don’t focus on a single use case. They “simply” took a step back and looked at what kind of solutions are using your data today, who is using it, how and of course what for. On top of that, where the data is stored is also a critical part of it of the puzzle.

In my mind Rubrik and Cohesity are leading the pack when it comes to this new wave of, they’ve developed a solution which is a convergence of different products (Backup / Scale-out storage / Analytics / etc). I used “convergence” on purpose, as this is what it is to me “converged data (copy) management”. Although not all use cases may have reached the full potential yet, the vision is pretty clear, and multiple layers have already converged, even if we would just consider backup and scale-out storage. I said pretty clear as the various startups have taken different messaging approaches. This is something that became obvious during the last Storage Field Day where Cohesity presented. Different story than for instance Rubrik had during Virtualization Field Day. Just as an example, Rubrik typical;y leads with data protection and management, where Cohesity’s messaging appears to be more around being a “secondary storage platform”. This in the case of Cohesity lead to discussions (during SFD) around what secondary storage is, how you get data on the platform and finally then what you can do with it.

To me, and the folks at these startups may have completely different ideas around this, there are a couple of use cases which stand out for a converged data management platform, use cases which I would expect to be the first target and I will explain why in a second.

- Backup and Recovery (long retention capabilities)

- Disaster Recovery using point in time snapshots/replication (relatively short retention capabilities and low RPO)

Why are these the two use cases to go after first? Well it is the easiest way to suck data in to your system and make your system sticky. It is also the market where innovation is needed, on top of that you need to have the data in your system first before you can do anything with it. Before some of the other use cases start to make sense like “data analytics”, or creating clones for “test / dev” purposes, or spinning up DR instances whether that is in your remote site or somewhere in the cloud.

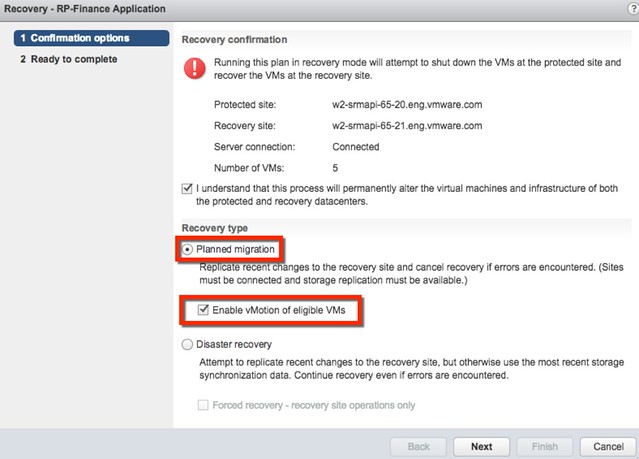

The first use case (backup and recovery) is something which all of them are targeting, the second one not so much at this point. In my opinion a shame, as it could definitely be very compelling for customers to have these two data availability concepts combined. Especially when some form of integration with an orchestration layer can be included (think Site Recovery Manager here) and protection of workloads is enabled through policy. Policy in this case allowing you to specify SLA for data recovery in the form of recovery point, recovery time and retention. And then when needed, you as a customer have the choice of how you want to make your data available again: VM fail-over, VM recovery, live/instant recovery, file granular or application/database object level recovery and so on and so forth. Not just that, from that point on you should be capable of using your data for other use cases, the use cases I mentioned earlier like analytics, test/dev copies etc.

We aren’t there yet, better said we are far from there, but I do feel this is where we are headed towards… and some are closing in faster than others. I can’t wait for all of this to materialize and we start making those next steps and see what kind of new use cases can be made possible on converged data management platforms.