VMware Virtual SAN, or I should say VMware vSAN, has been around since August 2013. Back then it was indeed called Virtual SAN, today is it is officially known as vSAN, but that is what most people used anyway. As this article keeps popping up on google search I figured I would rewrite it and provide a better more generic introduction to vSAN which is up to date and covers all that VMware vSAN is about up to the current version of writing, which is VMware vSAN 6.6.

VMware vSAN is a software based distributed storage solution. Some will refer to it as hyper-converged, others will call it software defined storage and some even referred to is as hypervisor converged at some point. The reason for this is simple, VMware vSAN is fully integrated with VMware vSphere. Those of you who are vSphere administrators who are reading this will have no problem configuring vSAN. If you know how to enable HA and DRS, then you know how to configure vSAN. Of course you will need to have a vSAN Network, and you achieve this by creating a VMkernel interface and enabling vSAN on it. vSAN works with L2 and L3 networks, and as of vSAN 6.6 no longer requires multicast to be enabled on the network. (If you want to know what changed with vSAN 6.6 read this article.)

Before we will get a bit more in to the weeds, what are the benefits of a solution like vSAN? What are the key selling points?

- Software defined – Use industry standard hardware, as long as it is on the HCL you are good to go!

- Flexible – Scale as needed and when needed. Just add more disks or add more hosts, yes both scale-up and scale-out are possible.

- Simplicity – Ridiculously easy to manage! Ever tried implementing or managing some of the storage solutions out there? If you did, you know what I am getting at.

- Automated – Per virtual machine and per virtual disk policy based management. Yes, even VMDK level granularity. No more policies defined on a per LUN/Datastore level, but at the level where you need it!

- Hyper-Converged – It allows you to create dense / building block style solutions!

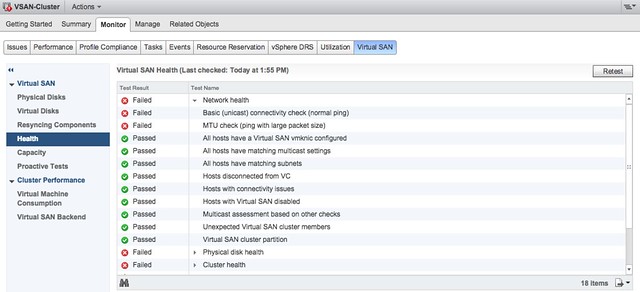

To me “simplicity” is the key reason customers buy vSAN. Not just simplicity in configuring or installing, but even more so simplicity in management. Features like the vSAN Health Check provide a lot of value to the admin. With one glance you can see what the status is of your vSAN. Is it healthy or not? If not, what is wrong?

Okay that sounds great right, but where does that fit in? What are the use-cases for vSAN, how are our 7000+ customers using it today?

- Production / Business Critical Workloads

- Exchange, Oracle, SQL, anything basically…. This is what the majority of customers use vSAN for.

- Management Clusters

- Isolate their management workloads completely, and remove the dependency on your storage systems to be available. Even when your enterprise storage system is down you have access to your management tools

- DMZ

- Where NSX helps isolating a DMZ from the world from a networking/security point of view, vSAN can do the same from a storage point of view. Create a separate cluster and avoid having your production storage go down during a denial of service attack, and avoid complex isolated SAN segments!

- Virtual desktops

- Scale out model, using predictive (performance etc) repeatable infrastructure blocks lowers costs and simplifies operations. Note that vSAN is included with Horizon Advanced and Enterprise!

- Test & Dev

- Avoids acquisition of expensive storage (lowers TCO), fast time to provision, easy scale out and up when required!

- Big Data

- Scale out model with high bandwidth capabilities, Hadoop workloads are not uncommon on vSAN!

- Disaster recovery target

- Cheap DR solution, enabled through a feature like vSphere Replication that allows you to replicate to any storage platform. Other options are of course VAIO based replication mechanisms like Dell/EMC Recover Point.

Yes that is a long list of use cases, I guess it it fair to say that vSAN fit everywhere and anywhere! Now, lets get a bit more technical, just a bit as this is an introduction and for those who want to know more about specific features and settings I have hundreds of vSAN articles on my blog. Also a vSAN book available, and then there’s of course the long list of articles by the likes of William Lam and Cormac Hogan.

When vSAN is enabled a single shared datastore is presented to all hosts which are part of the vSAN enabled cluster. Typically all hosts will contribute performance (SSD) and capacity (magnetic disks or flash) to this shared datastore. This means that when your cluster grows from a compute perspective, your datastore will typically grow with it. (Not a requirement, there can be hosts in the cluster which just consume the datastore!) Note that there are some requirements for hosts which want to contribute storage. Each host will require at least one flash device for caching and one capacity device. From a clustering perspective, vSAN supports the same limits as vSphere: 64 hosts in a single cluster. Unless you are creating a stretched cluster, then the limit is 31 hosts. (15 per site.)

As can be expected from any recent storage system, vSAN heavily relies on flash for performance. Every write I/O will go to the flash cache first, and eventually they will go to the capacity tier. vSAN supports different types of flash devices, broadest support in the industry, ranging from SATA SSDs to 3D XPoint NVMe based devices. This goes for both the caching as well as the capacity tier. Note that for the capacity layer, vSAN of course also supports regular spinning disks. This ranges from NL-SAS to SAS, 7200 RPM to 15k RPM. Just check the vSAN Ready Node HCL or the vSAN Component HCL for what is supported and what is not.

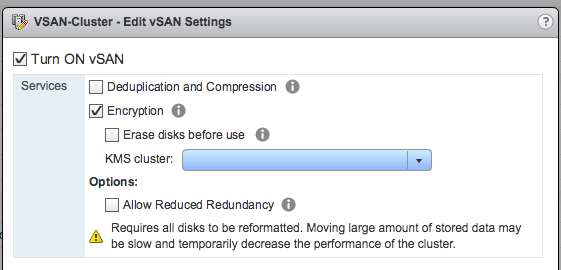

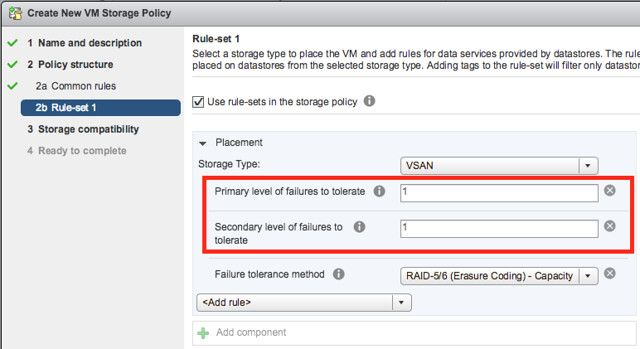

As mentioned, you can set policies on a per virtual machine or even virtual disk level. These policies define availability and performance aspects of your workloads. But for instance also allow you to specify whether checksumming needs to be enabled or not. There are 2 key features which are not policy driven at this point and these are “Deduplication and Compression” and Encryption. Both of these are enabled on a cluster level. But lets get back to the the policy based management. Before deploying your first VMs, you will typically create a (or multiple) policy. In this policy you define what the characteristics of the workload should be. For instance as shown in the example below, how many failures should the VM be able to tolerate? In the below example it shows that “primary” and “secondary” level of failures to tolerate is set to 1. Which in this case means the VM is stretched across 2 locations and also protected by RAID-5 in each site as the “Failure Tolerance Method” is also specified.

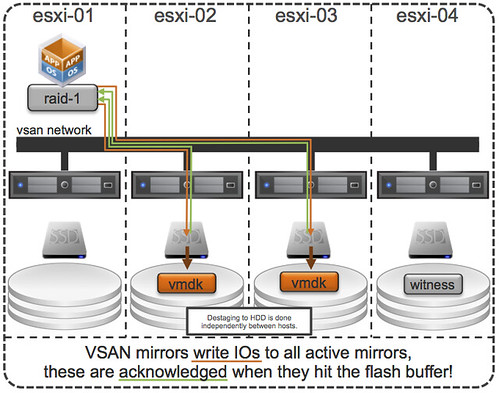

The above is a rather complex example, it can be as simple as only setting “Failures to tolerate” to “1”, which in reality is what most people do. This means you will need 3 nodes at a minimum and you will from a VM perspective have 2 copies of the data and 1 witness. vSAN is often referred to as a generic object based storage platform, but what does that mean? The VM can be seen as an object and each copy of the data and the witness can be seen as components. Objects are placed and distributed across the cluster as specified in your policy. As such vSAN does not require a local RAID set, just a bunch of local disks which can be attached to a passthrough disk controller. Now, whether you defined a 1 host failure to tolerate, or for instance a 3 host failure to tolerate, vSAN will ensure enough replicas of your objects are created within the cluster. Is this awesome or what?

Lets take a simple example to illustrate that as I realize it is also easy to get lost in all these technical terms. We have configured a 1 host failure and we create a new virtual disk. This results in vSAN creating 2 identical data components and a witness component. The witness is there just in case something happens to your cluster and to help you decide who will take control in case of a failure, the witness is not a copy of your data component let that be clear, it is just a quorum mechanis. Note, that the amount of hosts in your cluster could potentially limit the amount of “host failures to tolerate”. In other words, in a 3 node cluster you can not create an object that is configured with 2 “host failures to tolerate” as it would require vSAN to place components on 5 hosts at a minimum. (Cormac has a simple table for it here.) Difficult to visualize? Well this is what it would look like on a high level for a virtual disk which tolerates 1 host failure:

First, lets point out that the VM from a compute perspective does not need to be aligned with the data components. In order to provide optimal performance vSAN has an in memory read cache which is used to serve the most recent blocks from memory. Of course blocks which are not in the memory cache will need to be fetched from either of the two hosts that serve the data component. Note that a given block always comes from the same host for reads. This to optimize the flash based read cache. For writes it is straight forward. Every write is synchronously pushed to the hosts that contain data components for that VM. Some may refer to this as replication or mirroring. With all this replication going on, are there requirements for networking? At a minimum vSAN will require a dedicated 1Gbps NIC port for hybrid configurations, and 10GbE for all-flash configurations. Needless to say, but 10Gbps is definitely preferred with solutions like these, and you should always have an additional NIC port available for resiliency. There is no requirement from a virtual switch perspective, you can use either the Distributed Switch or the plain old vSwitch, both will work fine, the Distributed Switch is recommended and comes included with the vSAN license.

So what else is there, well from a feature / functionality perspective there’s a lot. Let me list some of my favourite features:

- RAID-1 / RAID-5 / RAID-6

- Stretched Clustering

- All-Flash for all License options

- Deduplication and Compression

- vSAN Datastore Encryption

- iSCSI Targets (for physical machines)

That more or less covers the basics and I think is a decent introduction to vSAN. Something that hopefully sparks your interest in this distributed storage platform that is deeply integrated with vSphere and enables convergence of compute and storage resources as never seen before. It provides virtual machine and virtual disk level granularity through policy based management. It allows you to control availability, performance and security in a way I have never seen it before, simple and efficient. And then I haven’t even spoken about features like the Health Check, Config Assist, Easy Install and any of the other cool features that are part of vSAN 6.6.

If there are any questions, find me on twitter!

Hello Duncan,

Have they released vSphere 5.5 officially from vmware?

Or typo? “beta of Virtual SAN with vSphere 5.5”

Not a typo, they just announced the public beta of Virtual SAN for vSphere 5.5. This means it is not available yet, but should be in the near future.

No. While you can try the products of competitors. For example, Parallels already have the similar product in production about a year:

http://www.parallels.com/products/pcs/cloud-storage/

Sounds promising……

But…

1-What if i don’t have a hardware raid configured on bunch of disks which are a part of VSAN DISK

then who gonna save my life.

So if i got this correct

Hardware disk failures will be handled by replicas and witness along with host failures?

Next…… PURPOSE — > ? Confused ?

Why all this dual or triple writes of data blocks on replicas,engaging witness,making compute more busy passing data through SSD to traditional disks.Increasing read/write IO and turnaround time for data blocks all just at the cost of “A SHARED DATASTORE ”

Is it worth bringing this concept into existence, am afraid or might am not able to get this concept right.

Increasing complexity,IO,Compute just to get a share data store.

No doubt i like the idea about “hypervisor based distributed platform” might be i lack some more deep thought on this.

Cheers

Parth

VSAN doesn’t require RAID, or better said… you don’t want to do RAID when you do VSAN as VSAN takes care of that by replicating objects when required. Hardware failures are handled by replicas and the witness indeed.

Not sure I am following your other comments to be honest.

I like that news. You know I mentioned before ever since I heard of Nutanix a year ago I thought that VMware should buy them, little did I know that you guys had something similar brewing. Nutanix is still superior but VSAN is a good step forward in the direction of compute/storage residing on the same host. The great part is that it is embedded on the the hypervisor. Questions:

– Is it base on ZFS?

– Is it safe to assume that like Nutanix that the vm vmdk store will follow wherever the vm is hosted to get the most storage performance?

– I use an enterprise HP 4GB SD card to store the hypervisor, Is there a problem if I use this feature? – Is the SD card future proof for installing ESXi hypervisor?

1) not based on ZFS, fully in-house developed.

2) we don’t move data around to follow the VM like Nutanix does. 10GbE infra will ensure low latency. Also, it is distributed caching layer and resilient so multi copies of data, no need to move stuff id you ask me

3) I don’t see an issue with using that SD card.

Glad that this is finally built into vSphere and no longer just an appliance, makes it much more convenient to use. Ability to have 8 servers in cluster seems to make it a better option for businesses that may eventually need more than 3 node cluster offered by Storage Appliance.

8 nodes is just a testing limit, that constraint will be lifter at some point in the future

VMware View in a box anyone? not for every use case obviously but perhaps for remote office.

Is the size of a single VM bound by the max size of the disks of a single host?

And where are the metadata of the virtual datastore saved? Thanks.

No the VM is not bound to the size of a disk or host.

Hey Duncan,

I build Virtual Server clusters using ASUS servers for my customers using VMware VSA. Will the Virtual SAN replace the VSA ? If so will the HCL match the HCL for the VSA ? What about upgrading from VSA to the Virtual SAN. Would it even be an upgrade? Will VMware keep the VSA as well or will this be dropped ? What are the performance advantages between the two. Price differences? VSA is part of ESS Plus, will the Virtual SAN be an extension of the VSA for customer swanting to scale past 3 hosts?

Any insight wold be helpful.

Cheers!

Peter

There will be no direct upgrade path, it means move out and move in. It is a completely different product, which means that the HCL will also differ. For instance for now it will require a RAID controller that supports pass-through.

Hey Duncan – thanks for taking the time to respond to comments during vmworld!

Question related with VSAN vs VSA: if you’ve implimented that clunky product, what is it’s license/support path moving forward? Example: Full support for VSA for X amount of time but no SNS license upgrade path to VSAN on upgrade to 5.5 (when out of beta)? I understand no upgrade path of the software itself, just checking to see if my client will need to purchase a different virtual SAN solution moving forward.

Parallels already have the similar product in production about a year:

http://www.parallels.com/products/pcs/cloud-storage/

Duncan,

As always, thanks for the useful info you provide! You are a credit to VMware. With that, we’ll await the all important ‘limitations and caveats’ article that needs to accompany this. There’s a ton of questions floating around the community about some of the real-world implications of this technology.

Such as:

1. You mention “Every write I/O will go to SSD first, and eventually they will go to magnetic disks (SATA).”. Detail? When does this happen?

2. Obviously this brings RAID into question — Does this replace RAID? Many people will be very, VERY hesitant to go without RAID, so can this be used in conjunction with RAID? RAID still brings other performance benefits, not just redundancy of course…

3. Does this work or can this work with vFlash caching?

4. How ‘intelligently’ does this tier the data? Can vSphere differentiate between 7.2K/10K/15K disks? My only fear is that this may be an ‘all or nothing’ type approach to tiering, whereas many 3rd parties have been doing tiering for quite a while.

Overall, I’m really impressed with the announcements in 5.5. vSAN sounds very interesting. I’ld be willing to bet in 5 years, you won’t buy a server, hypervisor, and storage separately. Everything will basically be very Nutanix’esque.

With every single write going to SSD, will the HCL for SSD be fairly restrictive to models that have a higher number of write cycles? How will monitoring for SSD wear work?

I just wanted to say I appreciate your summary Duncan. It’s great to see the difference between the testing limit of 8 nodes and the goal of the product being beyond that – far beyond 8 nodes, I imagine.

We’re a little ways away from it, but I’m curious to see adoption in the SMB market and the layered play of ViPR + VSAN for larger implementations.

Cheers.

What is the new limit of LUNs per host in vSphere 5.5?

vSphere 5.5 configuration maximums: 256 LUNs

http://www.vmware.com/pdf/vsphere5/r55/vsphere-55-configuration-maximums.pdf

Duncan,

Tested it on real harware. Three hosts, 1 SSD + DAS each.

Configured everything, but vsphere didn’t use them for datastore though showed both hosts and disks as eligible.

Did you add the license key for VSAN?

If yes: go to your cluster -> Manage -> Virtual SAN -> Disk Mangement and try to “Claim disks” (the leftmost buttn below “Disk groups”) manually

Thanks!

Yes the issue was in license key absence. Now it works! Great post!

Just go lookup Nutanix.com where their core product does all of this out of the box (put more accurately, in their box…). VMware is late to this party and for the time being you’re better off getting your converged scale-out platform from folks that tailor their solutions around this concept, rather than offer it as another thing you COULD do with your virtual architecture.

I guess you just said it all: “in their box” << that is key here. VSAN is a software solution, not tied to hardware.

Anyway, there is a big market out there… And still much more to come.

VSAN looks to be be very similar when compared to CEPH. I’m excited to see how this offering grows over time. I’m also very interested to see how the big box storage companies respond to this over the next few years.

Hi Ducan, is vSAN substitute for VSA? Does it work with local disk or shared sotrage? More one: what kind of datastore does it support? NFS, iSCSI, FC?

Thanks for share your knowledge

1) no it is not a substitute for VSA (for now)

2) local disks only

3) it will surface up a shared datastore using a VMware proprietary protocol, so not NFS/iSCSI/FC

Hi, Any way to make this work without SSD? Thanks, Mickey

Yes, just fake an ssd using the “esxcli” commands described in various other posts on this blog.

Cool – thanks for the info!

Reading through the documentation centre at the moment and a lot of the diagrams are in a different language?

http://pubs.vmware.com/vsphere-55/index.jsp#com.vmware.vsa.doc/GUID-7FA669A2-400F-48E3-9800-3FF7EB0AE569.html

Different language?

Thank you Duncan for sharing your knowledge.

Thank you for the post Duncan. But could you please elaborate a bit on the role of the witness?

Did you take a look at the other articles I wrote on the topic of VSAN like for instance:

http://www.yellow-bricks.com/2013/09/18/vsan-handles-disk-host-failure/

I’m taking a dive in to this technology since a few days and I have many questions/remarks…

You need to create local disk groups with local disks. All these local disk groups will be combined to one datastore. (As far as I understand you can not create 1 disk group with disks from host 1 & host 2 or is this possible?)

Since the disk group is JBOD and not a raid set, how will the data be written to this disk group?

Will it start filling up first disk 1, then disk 2, disk 3 and so on? What happens if you delete a vm and create a new vm? This will lead to fragmentation?

Lets say you have 5 disks of 300GB and you have one VM of 930GB, then this vm will consume 4 disks.

There is no raid configured here since the raid is build on virtual machine level.

So if there is one disk failure in this disk group, you will need to use the replicated VM on HOST2 in able to continue working. (Which means here that you need at least 8 disks for this vm only…)

There is mentioned that if one disk failes in the disk group, only the data residing on that disks needs to be rebuild? So is vSan that intelligent that it knows which blocks it needs to replicate back from host 2 to host 1, or does the complete VM needs to be replicated back?

Is the replication synchronous or asynchronous? Didn’t find any information about this

The maximum size of a VMDK is 2TB. Does the disk group needs to be bigger or can you place one VMDK over 2 smaller disk groups?

As hardware is so advanced nowadays you can get pre-deticve failures on disk level in your storage box. You can replace the disk and you will have a performance impact during the rebuild, but you can continue working.

But when you have a predictive failure on a disk in vSan you can consider it as a disk failure as there is no raid protection in your disk group…..so you need to start working on the replicated vm on host 2.

as a start I would recommend the other posts on the topic of VSAN to be found here: vmwa.re/vsan