As John Nicholson was traveling in and around New Zealand I was asked by Pete if I could co-host the Virtually Speaking Podcast again. It is always entertaining to join, Pete is such a natural when it comes to these things. I euuh, well I do my best to keep up with him :). Below you can find the latest episode on the topic of vSAN Customer Use Cases. It includes a lot of soundbites recorded at VMware World Wide Kick Off / Tech Summit, which is a VMware internal event for all Sales, Pre-Sales and Post-Sales field facing people.

You can of course also subscribe on iTunes!

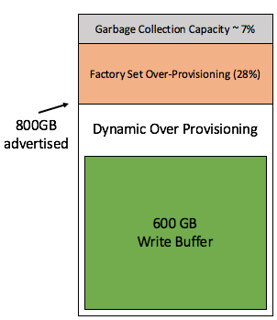

I got this question today and I thought I already wrote something on the topic, but as I cannot find anything I figured I would write up something quick. The question was if a disk controller for vSAN should have cache or not? It is a fair question as many disk controllers these days come with 1GB, 2GB or 4GB of cache.

I got this question today and I thought I already wrote something on the topic, but as I cannot find anything I figured I would write up something quick. The question was if a disk controller for vSAN should have cache or not? It is a fair question as many disk controllers these days come with 1GB, 2GB or 4GB of cache. I was driving back home from Germany on the autobahn this week when thinking about 5-6 conversations I have had the past couple of weeks about performance tests for HCI systems. (Hence the pic on the rightside being very appropriate ;-)) What stood out during these conversations is that many folks are repeating the tests they’ve once conducted on their legacy array and then compare the results 1:1 to their HCI system. Fairly often people even use a legacy tool like Atto disk benchmark. Atto is a great tool for testing the speed of your drive in your laptop, or maybe even a RAID configuration, but the name already more or less reveals its limitation: “disk benchmark”. It wasn’t designed to show the capabilities and strengths of a distributed / hyper-converged platform.

I was driving back home from Germany on the autobahn this week when thinking about 5-6 conversations I have had the past couple of weeks about performance tests for HCI systems. (Hence the pic on the rightside being very appropriate ;-)) What stood out during these conversations is that many folks are repeating the tests they’ve once conducted on their legacy array and then compare the results 1:1 to their HCI system. Fairly often people even use a legacy tool like Atto disk benchmark. Atto is a great tool for testing the speed of your drive in your laptop, or maybe even a RAID configuration, but the name already more or less reveals its limitation: “disk benchmark”. It wasn’t designed to show the capabilities and strengths of a distributed / hyper-converged platform. I get this question on a regular basis and it has been explained many many times, I figured I would dedicate a blog to it. Now, Cormac has written a very lengthy blog on the topic and I am not going to repeat it, I will

I get this question on a regular basis and it has been explained many many times, I figured I would dedicate a blog to it. Now, Cormac has written a very lengthy blog on the topic and I am not going to repeat it, I will