Last week the folks from Tintri reached out and asked me if I was interested to play around with a lab they have running. They gave me a couple of hours of access to their Partner Lab. It had a couple of hosts, 4 different Tintri VMStore systems including their all-flash offering and of course their management solution Global Center. I have done a couple of posts on Tintri in the past, so if you want to know more about Tintri make sure to read those as well. (1, 2, 3, 4)

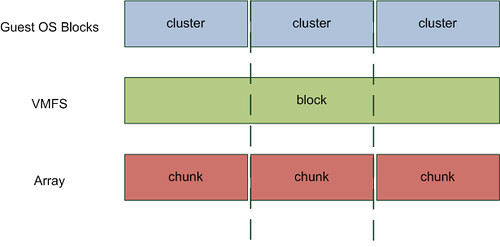

For those who have no clue whatsoever, Tintri is a storage company which sells “VM-Aware” storage. This basically means that all of the data services they offer can be enabled on a VM/VMDK level and they give visibility all the way down to the lowest level. And not just for VMware, they support other hypervisors as well by the way. I’ve been having discussions with Tintri since 2011 and it is safe to say they came a long way, most of my engagements however were presentations and the occasional demo so it was nice to actually go through the experience personally.

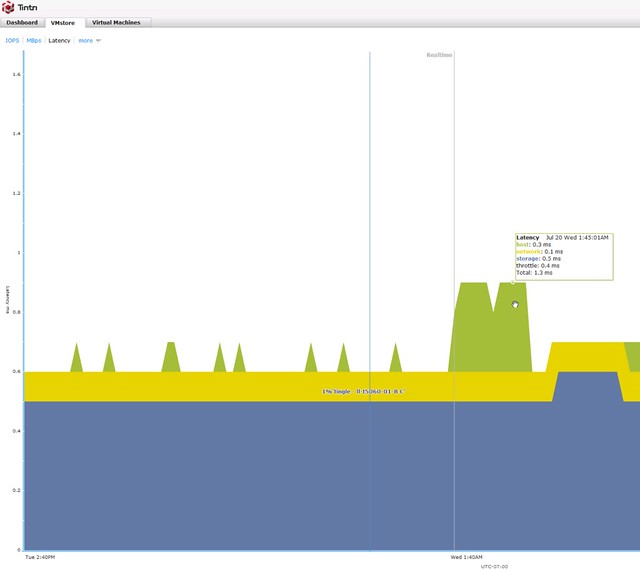

First of all their storage system management interface. If you login to one of them you are presented with all the info you would want to know, IOPS / Bandwidth / Latency, but even for latency you can see a split in network, host and storage latency. So if anything is misbehaving you will find out what and why probably relative fast.

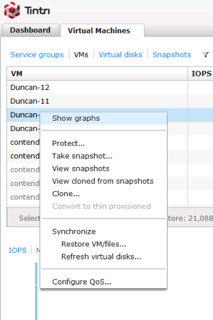

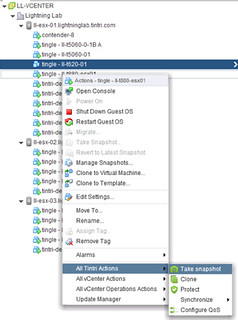

Not just that, if you look at the VMs running on your system from the array side you can also do things like take a storage snapshot, clone the vm, restore the VM, replicate it or set QoS for that VM. Very powerful, all of that is also available in vCenter by the way through a plugin.

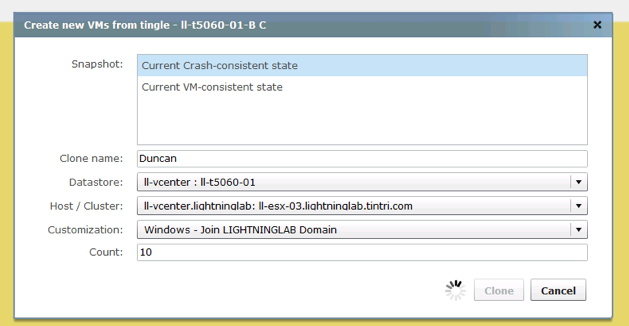

Now when you clone a VM, you can also create many VMs, pretty neat. I say give me 10 with the name Duncan and you get 10 of those called Duncan-01 –> Duncan-10.

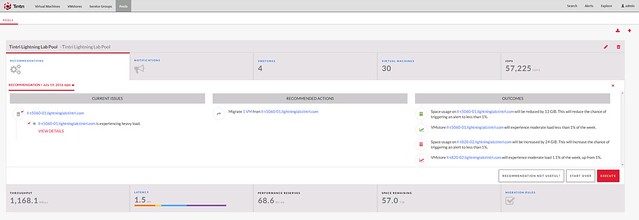

Their central management solution is what I was interested in as I had only seen it once in a demo and that is it, it is called Tintri Global Center. Now one thing I have to say, it has been said by some that Tintri offers a scale out solution but the storage system itself is not a scale out system. When they refer to scale out, they refer to the ability to manage all storage systems through a single interface and the ability to group storage systems and load balance between those, which is done through Global Center and their “Pools” functionality. Pools kind of feels like SDRS to me as said in a previous post, now that I have played with it a bit it definitely feels a lot like SDRS. When I was playing with the lab I received the following message.

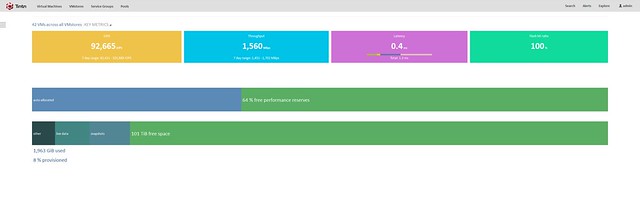

If you have used SDRS at some point in time and look at the screenshot (click it for bigger screenshot) you know what I mean. Anyway, good functionality to have. Pool different arrays and balance between based on space and performance. Nothing wrong with that. But that is not the best thing about Global Center, like I said I like the simplicity of Tintri’s interfaces and that also applies to Global Center. For instance when you login, this is the first you see

I really like the simplicity, it gives a great overview of the state of the total environment, and at the same time it will give you the ability to dive deeper when needed. You can look for per VMstore details, and figure out where your capacity is going for instance. (Snapshots, live data etc) But also see basic trending in terms of how what VMs are demanding from a storage performance and capacity point of view.

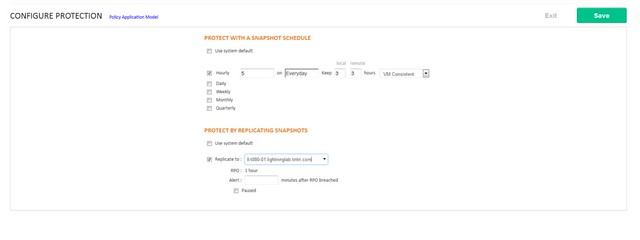

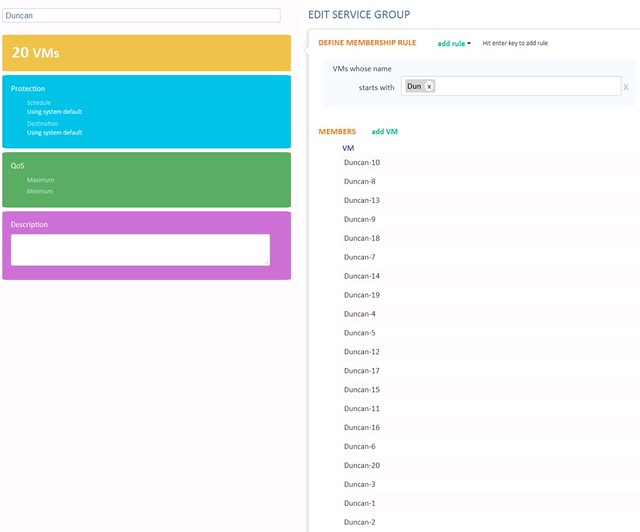

Having said all of that there is one thing that bugs me. Yes Tintri provides a VASA Provider but this is the “old style” VASA Provider which revolves around the datastore. Now if you look at VVols, it is all about the VM and which capabilities it needs. I would definitely welcome VVol support from Tintri, now I can understand this is no big priority for them as they have “similar” functionality, it is just that as a VM-Aware storage system I would expect there to be deep(er) integration from that perspective as well. But that is just me nitpicking I guess, as a VMware employee working for the BU that brought you VVols, it is safe to say I am biased when it comes to this. Tintri does offer an alternative which makes it easy to manage groups of VMs and it is called Service Groups. It allows you to apply data service to a logical grouping, which is defined by a rule. I could for instance say, all VMs that start with “Dun” need to be snapshotted every 5 hours, and this snapshot needs to be replicated etc etc. Pretty powerful stuff, and fairly easy to use as well. Still, for consistency it would be nice to be able to do this through SPBM in vSphere so that if I have other storage systems I can use the same mechanism to define services all through the same interface.

** Update: I was just pointed to the fact that there is a VVol capable VASA Provider, at least according to the VMware HCL. I have not seen the implementation and what is / what is not exposed unfortunately. Also just read the documentation and VVol is indeed supported. With a caveat for some systems: Tintri OS 4.1 supports VMware VMware vSphere Aware Storage API (VASA), 3.0 (VVOL 1.0). The Tintri vCenter Web Client Plugin is not required to run VVOLs on Tintri. VVOLs is not available for Tintri VMstore T540 or T445 systems. Also, the docs I’ve see don’t show the capabilities exposed through VVols unfortunately. **

Again, I really liked the simplicity of the solution. The overall user experience was great, I mean taking a snapshot is dead simple. Replicating that snapshot? One click. Clone? One click. QoS? 3 settings. Do I need to say more? Well done Tintri, and looking forward to what you guys will release next and thanks for providing me the opportunity to play around in your lab, I hope I didn’t break anything.