This question keeps on coming up over and over again lately, vSphere Metro Storage Cluster (vMSC) using Virtual SAN, can I do it? (I guess the real question is “should you do it”.) It seems that Virtual SAN/VSAN is getting more and more traction, even though it is still beta and people are trying to come up with all sorts of interesting usecases. At VMworld various people asked if they could use VSAN to implement a vMSC solution during my sessions and the last couple of weeks this question just keeps on coming up in emails etc.

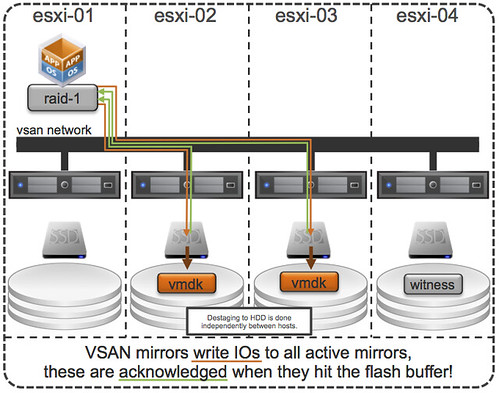

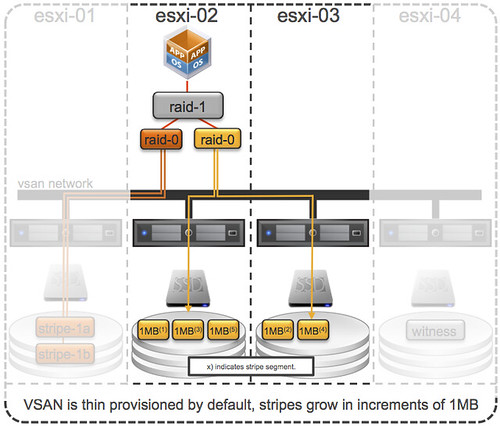

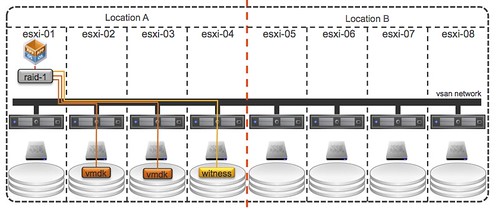

I guess if you look at what VSAN is and does it makes sense for people to ask this question. It is a distributed storage solution with a synchronous distributed caching layer that allows for high resiliency. You can specify the number of copies required of your data and VSAN will take care of the magic for you, if a component of your cluster fails then VSAN can respond to it accordingly. This is what you would like to see I guess:

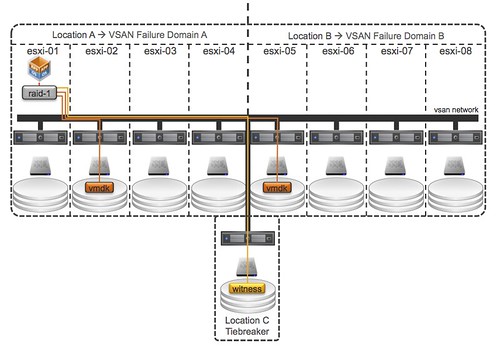

Now let it be clear, the above is what you would like to see in a stretched environment but unfortunately not what VSAN can do in its current form. I guess if you look at the following it becomes clear why it might not be a such a great idea to use VSAN for this use case at this point in time.

The problem here is:

- Object placement: You will want that second mirror copy to be in Location B but you cannot control it today as you cannot define “failure domains” within VSAN at the moment.

- Witness placement: Essentially you want to have the ability to have a 3rd site that functions as a tiebreaker when there is a partition / isolation event.

- Support: No one has tested/certified VSAN over distance, in other words… not supported

For now, the answer is to the question can I use Virtual SAN to build a vSphere Metro Storage Cluster is: No, it is not supported to span a VSAN cluster over distance. The feedback and request from many of you has been heard loud and clear by our developers and PM team… And at VMworld it was already mentioned by one of the developers that he was intrigued by the use case and he would be looking in to it in the future. Of course, there was no mention of when this would happen or even if it would ever happen.