I’ve been working on a whole bunch of VSAN diagrams… I’ve shared a couple already via twitter and in the various blog articles, but I liked the following two very much and figured I would share it with you as well. Hoping they make sense to everyone. Also, if there are any other VSAN concepts you like to see visualized let me know and I will see what I can do.

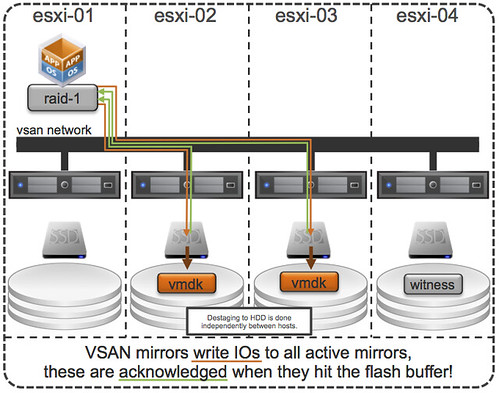

First one shows how VSAN mirrors writes to two active mirror copies. Writes need to be acknowledged by all active copies, but note they are acknowledged as soon as they his the flash buffer! De-staging from buffer to HDD is a completely independent process, even between the two hosts this happens independently from each other.

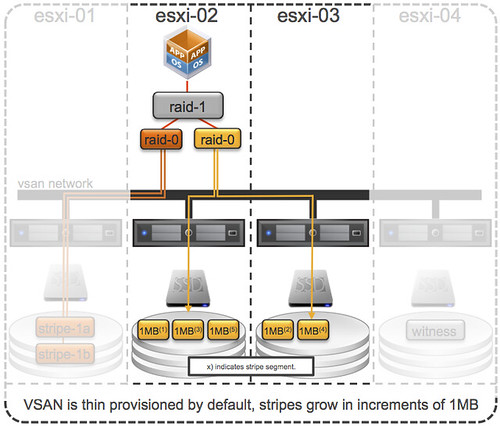

The second diagram is all about striping. When the stripe width is defined using the VM Storage Policies then objects will grow in increments of 1MB. In other words: stripe segment 1 will go to esxi-02 and stripe segment 2 will go to esxi-03 and so on.

Just a little something I figured was nice to share on a Friday, some nice light pre-weekend/VMworld content 🙂

Duncan.

Default VSAN protection policy is “Protect all VM’s by making sure replica VM resides on another node”. is this correct?

If so, does this not reduce the total pooled capacity of the available storage on the VSAN, the same “gotcha” we had on the VSA ?

It is the same gotcha you have with every storage system when you require any level of resiliency I would say.

Thank you for the quick reply Duncan.

What edition of vSphere 5.5 is VSAN included in ? Standard, Enterprise, or Enterprise plus ?

@IP Did you expect to be protected from thin air?!

Duncan,

you wrote: ” but note they are acknowledged as soon as they his the flash buffer!”.

Does this mean that they are acknowledged after they hit the bufferS of all mirrors or after they hit the buffer of ONE mirror?

ack from all write buffers to guarantee consistency.

Im late to this party but wait a sec:

“Writes need to be acknowledged by all active copies, but note they are acknowledged as soon as they his the flash buffer! De-staging from buffer to HDD is a completely independent process, even between the two hosts this happens independently from each other.”

Writes are acked by SSD? Those were supposed to be ‘write through’ i thought like vFRC, and acked by disk but cached in SSD. If writes are acked by SSD (which doesnt seem like programmatic rocket science, would be of obvious benefit from a latency perspective, with N+x SSDs serving the redundancy requirement), then can it be said that a single 7200 RPM disk backed by an SSD (a la your el reg counter point) can do 20,000ish IOPS at least for bursts up to the % allocated to write capacity of the SSD? That would be the tell right? .. whether the SSD is acking writes? That would be huge man. Can you confirm?

Writes are ACK’d, and 30% is how much of the flash disk is reserved for writes (this is mirrored between two systems). What you adjust is how much of the 70% of the disk for reads can be hard reserved for a VMDK. In the 1.0 release you can’t change this mix (not that you would really want to). I have a deploying racking right now with 2 x 1TB NL-SAS drives + a 400GB SSD in each host. This is going to yield 133GB of Write Cache per node (or minus the mirroring penalty) ~65GB of sustained burst per node (in the case with 4 nodes, 266GB for the cluster).

Thats so huge, I dont know what to say and cant believe VMware doesnt advertise this more unless im missing something. Do you have 4k IOmeter stats and latency numbers at all? So 2x 1 TB sas would give you what, 160-220 IOPS without SSD acceleration. Any IOmeter 70/30 read/write, 4k, whatever level random should be in the 5 digits right? Is that what youre seeing?

As I said, writes are acknowledged by SSD, they will be sitting their for a while and destaged when VSAN thinks it makes sense.

I have 2 Questions on VSANs.

First: Disks where ESXI is installed are also part of VSAN disk group.For example host has 8 *300 GB Disks. Usually ESXi is Installed as Raid1 on 2 Disks. So in this case VSAN disk group will consist of balance 6 Disk or we can use all 8 Disks.

Secondly how many datastores we can create on VSAN and what is Maximum Datastore Storage Limit.

1) no, disks on which ESXi is installed cannot be part of the VSAN datastore. So if you do RAID1 for ESXi you lose 2 disks.

2) it always is just 1 datastore, and max size is based on number of disks * disk size. I does not have the traditional VMFS limit.

I recommend reading my VSAN intro post!

Thanks for the reply Duncan. It has cleared lot of doubts. Just one more question from Design Point of view straight from Virtualization Trenches.

I have a cluster of eight HP DL 380 G8 Servers. Each server is with 8 disk configuration.

The server has Raid controller which can do Simultaneous Multiple raids.

2* 146 GB 15K in Raid 1 for ESXi Installation

5* 1 TB 7.2K for VSAN

1* 600 GB Solid State Hard Disk for VSAN

Here i need to do another raid lets say raid 5 on 5*1 TB Disks or VSAN will discover the five 1 TB disks and 1 Solid State Disk automatically.

Second the most important question is what will be the usable space of the datastore,

if we use 2 Host failure. ( The Raw space is 8*5* 1 TB = 40 TB). 8 SSD are separate.

RAID 1 and disks for ESXi? Normally we use a SD card these days. Is there a reason your not using embedded installs?

Usable space depends on the policies you apply to VM’s. If you set it to the policy to tolerate no failures then its the RAW capacity of the disks. If its 1 host, then its 50% of RAW, if its set to 2 hosts then 33% of RAW and so forth. You don’t do RAID 5 in the hosts (In fact RAID 5 with 1TB disks is a bad idea, but thats another argument). Its basically RAID 0,10,1 type designs. The disks are passed through RAW, and not abstracted by a RAID controller ideally, and all this logic is handled by VSAN and the policies you set. With VSAN you are not layering RAID like traditional VSA systems. Think more like IBM XIV RAID-X, or other scale out mirror based systems.

As mentioned, the Intro blog posts clears up these questions:

http://www.yellow-bricks.com/2013/08/26/introduction-vmware-vsphere-virtual-san/

🙂

Thanks John for the clarification. You mean to say Usable space depends upon policies applied to VM and not at Cluster VSAN level. So you mean that we can apply Different options for Different VMs. For One VM we say 1 Host failure another VM for 2 Host failure. Is that what you mean.

http://www.yellow-bricks.com/2013/08/26/introduction-vmware-vsphere-virtual-san/

That is exactly what VSAN does. For some VMs you can select “N+1” for the other you select “N+2”. Fully depending on your requirements etc.

Thanks Duncan for the reply. These clarification are turning me into VSAN expert.

When VSAN was introduced many people including me dismissed it as just a SMB Product not suitable for enterprise workloads and it might go the way of VSA which didnt found much uptake even in the SMB market.

But now i am getting warmed up to VSAN and i think it has tremendous potential. Now we need to look into appropriate use cases for VSAN. one can be vdi where virtual desktops are on VSAN Datastore and user data or User Home directories on SAN. Additional use cases probably you can highlight in your post.

When are the performance and scaling numbers to be released? I get the management and architecture, but when a certain workload requires certain storage performance capacity, we need real world numbers to best implement vSAN.

What are the disadvantages of increasing the stripe number?

I know it increase performance for destaging and missed cache reads but why wouldn’t you just set your policy to 12 (the max?)

It uses more object chunks which technically there is a limit (1000?) per host.

There is a 3000 components limit indeed. So the higher you set this the more likely you will hit this number and so you change the max number of VMs you can run.

Also, it will make placement of the component more difficult as it will be mandatory to stripe.