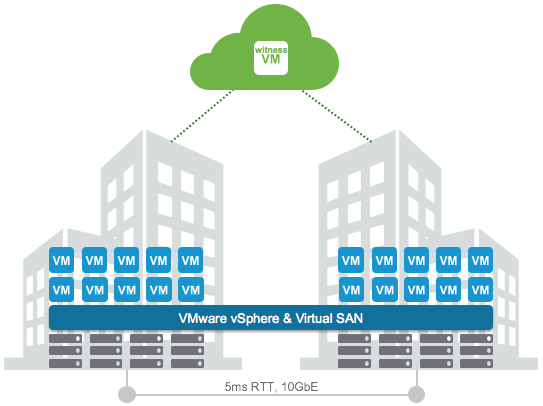

This week I was talking to a customer in Germany who had deployed a VSAN stretched cluster within a building. As it was all within a building (extremely low latency) and they preferred to have a very simple operational model they decided not to implement any type of VM/Host rules. By default when a stretched cluster is deployed in VSAN (and ROBO uses this workflow as well) then “site locality” is implemented for caching. This means that a VM will have its read cache on the host which holds the components in the site where it is located.

This is great of course and avoids incurring latency hit for reads. Now in some cases you may not desire this behaviour. For instance in the situation above where there is an extremely low latency connection between the different rooms in the same building. In this case as well because there are no VM/Host rules implemented a VM can freely roam around the cluster. Now when a VM moves between VSAN Fault Domains in this scenario the cache will need to be rewarmed as it only reads locally. Fortunately you can disable this behaviour easily through the advanced setting called DOMOwnerForceWarmCache:

[root@esxi-01:~] esxcfg-advcfg -g /VSAN/DOMOwnerForceWarmCache

Value of DOMOwnerForceWarmCache is 0

[root@esxi-01:~] esxcfg-advcfg -s 1 /VSAN/DOMOwnerForceWarmCache

Value of DOMOwnerForceWarmCache is 1

In a stretched environment you will see that this setting is set to 0 set it to 1 to disable this behaviour. In a ROBO environment VM migrations are uncommon, but if they do happen on a regular basis you may also want to look in to setting this setting.