I had this question last week around vSAN 2-node direct connect and whether using a crossover cable is still required to be used or if a regular CAT6 cable (CAT 5E works as well) can be used. I knew the answer and figured this would be documented somewhere, but it doesn’t appear to be. To be honest, many websites when talking about the need for crossover cables are blatantly wrong. And yes, I also spotted some incorrect recommendations in VMware’s own documentation, so I requested those entries to be updated. Just to be clear, with vSAN 2-Node Direct Connect, or vMotion, or any other service for that matter, you can use a regular CAT6 cable, combined with 10GbE (or faster) NICs, this gives you great performance without the cost of a 10GbE (or faster) switch. I can’t recall having seen a NIC in the past 10 years that does not have Auto MDI/MDI-X implemented, even though it was an optional feature in the 1000Base-T standard. In other words, there’s no need to buy a crossover cable, or make one, just use a regular cable.

I had this question last week around vSAN 2-node direct connect and whether using a crossover cable is still required to be used or if a regular CAT6 cable (CAT 5E works as well) can be used. I knew the answer and figured this would be documented somewhere, but it doesn’t appear to be. To be honest, many websites when talking about the need for crossover cables are blatantly wrong. And yes, I also spotted some incorrect recommendations in VMware’s own documentation, so I requested those entries to be updated. Just to be clear, with vSAN 2-Node Direct Connect, or vMotion, or any other service for that matter, you can use a regular CAT6 cable, combined with 10GbE (or faster) NICs, this gives you great performance without the cost of a 10GbE (or faster) switch. I can’t recall having seen a NIC in the past 10 years that does not have Auto MDI/MDI-X implemented, even though it was an optional feature in the 1000Base-T standard. In other words, there’s no need to buy a crossover cable, or make one, just use a regular cable.

networking

Hyper-Converged is here, but what is next?

Last week I was talking to a customer and they posed some interesting questions. What excites me in IT (why I work for VMware) and what is next for hyper-converged? I thought they were interesting questions and very relevant. I am guessing many customers have that same question (what is next for hyper-converged that is). They see this shiny thing out there called hyper-converged, but if I take those steps where does the journey end? I truly believe that those who went the hyper-converged route simply took the first steps on an SDDC journey.

Hyper-converged I think is a term which was hyped and over-used, just like “cloud” a couple of years ago. Lets breakdown what it truly is: hardware + software. Nothing really groundbreaking. It is different in terms of how it is delivered. Sure, it is a different architectural approach as you utilize a software based / server side scale-out storage solution which sits within the hypervisor (or on top for that matter). Still, that hypervisor is something you were already using (most likely), and I am sure that “hardware” isn’t new either. Than the storage aspect must be the big differentiator right? Wrong, the fundamental difference, in my opinion, is how you manage the environment and the way it is delivered and supported. But does it really need to stop there or is there more?

There definitely is much more if you ask me. That is one thing that has always surprised me. Many see hyper-converged as a complete solution, reality is though that in many cases essential parts are missing. Networking, security, automation/orchestration engines, logging/analytic engines, BC/DR (and orchestration of it) etc. Many different aspects and components which seem to be overlooked. Just look at networking, even including a switch is not something you see to often, and what about the configuration of a switch, or overlay networks, firewalls / load-balancers. It all appears not to be a part of hyper-converged systems. Funny thing is though, if you are going on a software defined journey, if you want an enterprise grade private cloud that allows you to scale in a secure but agile manner these components are a requirement, you cannot go without them. You cannot extend your private cloud to the public cloud without any type of security in place, and one would assume that you would like to orchestrate every thing from that same platform and have the same networking / security capabilities to your disposal both private and public.

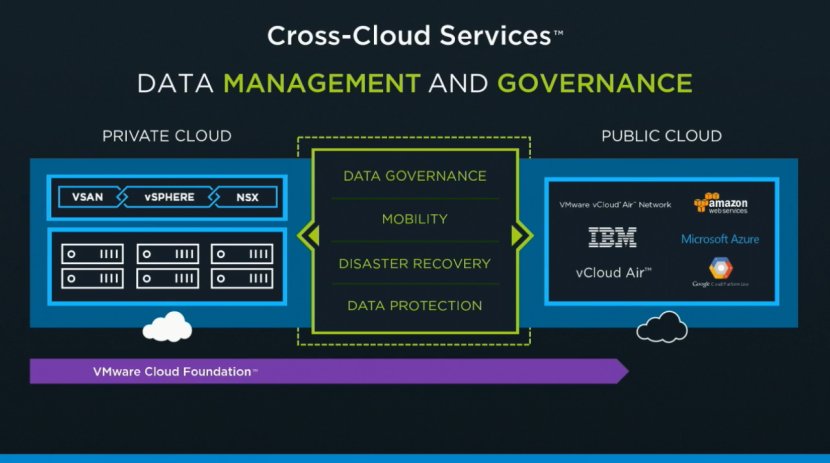

That is why I was so excited about the VMworld US keynote. Cross Cloud Services on top of hyper-converged leveraging all the tools VMware provides today (vSphere, VSAN, NSX) will exactly allow you to do what I describe above. Whether that is to IBM, vCloud Air or any other of the mega clouds listed in the slide below is even besides the point. Extending your datacenter services in to public clouds is what we have been talking about for a while, this hybrid approach which could bring (dare I say) elasticity. This is a fundamental aspect of SDDC, of which a hyper-converged architecture is simply a key pillar.

Hyper-converged by itself does not make a private cloud. Hyper-converged does not deliver a full SDDC stack, it is a great step in to the right direction however. But before you take that (necessary) hyper-converged step ask yourself what is next on the journey to SDDC. Networking? Security? Automation/Orchestration? Logging? Monitoring? Analytics? Hybridity? Who can help you reach full potential, who can help you take those next steps? That’s what excites me, that is why I work for VMware. I believe we have a great opportunity here as we are the only company who holds all the pieces to the SDDC puzzle. And with regards to what is next? Deliver all of that in an easy to consume manner, that is what is next!

E1000 VMware issues

Lately I’ve noticed that various people have been hitting my blog through the search string “e1000 VMware issues”, I want to make sure people end up in the right spot so I figured I would write a quick article that points people there. I’ve hit the issues described in the various KB articles myself, and I know how frustrating it can be. The majority of problems seen with the E1000 and E1000E drivers have been solved with the newer releases. I always run the latest and greatest version so it isn’t something I encounter any longer, but you may potentially witness the following:

- vmkernel.log entries with “Heap netGPHeap already at its maximum size. Cannot expand.”

- PSOD with “E1000PollRxRing@vmkernel#nover+”

- vmware.log entries with “[msg.ethernet.e1000.openFailed] Failed to connect ethernet0.”

These problems are witnessed with vSphere 5.1 U2 and earlier and patches have been released to mitigate these problems, if you are running one of those versions either use the patch or preferably upgrade to vSphere 5.1 Update 3 at a minimum when you are still running 5.0 or 5.1, or move up to the latest 5.5 release.

KB articles with more details can be found here:

Virtualization networking strategies…

I was asked a question on LinkedIn about the different virtualization networking strategies from a host point of view. The question came from someone who recently had 10GbE infrastructure introduced in to his data center and the way the network originally was architected was with 6 x 1 Gbps carved up in three bundles of 2 x 1Gbps. Three types of traffic use their own pair of NICs: Management, vMotion and VM. 10GbE was added to the current infrastructure and the question which came up was: should I use 10GbE while keeping my 1Gbps links for things like management for instance? The classic model has a nice separation of network traffic right?

Well I guess from a visual point of view the classic model is nice as it provides a lot of clarity around which type of traffic uses which NIC and which physical switch port. However in the end you typically still end up leveraging VLANs and on top of the physical separation you also provide a logical separation. This logical separation is the most important part if you ask me. Especially when you leverages Distributed Switches and Network IO Control you can create a great simple architecture which is fairly easy to maintain and implement both from a physical and virtual point of view, yes from a visual perspective it may be bit more complex but I think the flexibility and simplicity that you get in return definitely outweighs that. I definitely would recommend, in almost all cases, to keep it simple. Converge physically, separate logically.

Book: Networking for VMware Administrators

Fellow blogger Chris Wahl just announced the availability of an awesome book titled Networking for VMware Administrators he authored with Steve Pantol. The book is published via VMware Press and is a must read if you ask me. I am going to order it for sure as it is an area that I can definitely brush up on. The book is 368 pages and covers everything from the networking models to switching, but of course heavily focuses on the virtual side and dives in to the standard vSwitch, distributed switch and the Cisco Nexus 1000v!

Fellow blogger Chris Wahl just announced the availability of an awesome book titled Networking for VMware Administrators he authored with Steve Pantol. The book is published via VMware Press and is a must read if you ask me. I am going to order it for sure as it is an area that I can definitely brush up on. The book is 368 pages and covers everything from the networking models to switching, but of course heavily focuses on the virtual side and dives in to the standard vSwitch, distributed switch and the Cisco Nexus 1000v!

Knowing Chris this book is going to be worth it, his blog material has always been excellent and I expect nothing less. Congrats Chris and Steve, awesome work and looking forward to reading it.