I started digging in to this yesterday when I had a comment on my Metro Cluster article. I found it very challenging to get through the vSphere Metro Storage Cluster HCL details and decided to write an article about it which might help you as well when designing or implementing a solution like this.

First things first, here are the basic rules for a supported environment?

(Note that the below is taken from the “important support information”, which you see in the “screenshot, call out 3”.)

- Only array-based synchronous replication is supported and asynchronous replication is not supported.

- Storage Array types FC, iSCSI, SVD, and FCoE are supported.

- NAS devices are not supported with vMSC configurations at the time of writing.

- The maximum supported latency between the ESXi ethernet networks sites is 10 milliseconds RTT.

- Note that 10ms of latency for vMotion is only supported with Enterprise+ plus licenses (Metro vMotion).

- The maximum supported latency for synchronous storage replication is 5 milliseconds RTT (or higher depending on the type of storage used, please read more here.)

How do I know if the array / solution I am looking at is supported and what are the constraints / limitations you might ask yourself? This is the path you should walk to find out about it:

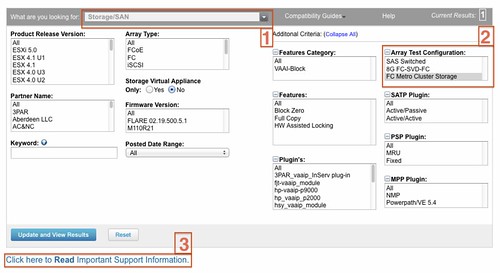

- Go to : http://www.vmware.com/resources/compatibility/search.php?deviceCategory=san (See screenshot, call out 1)

- In the “Array Test Configuration” section select the appropriate configuration type like for instance “FC Metro Cluster Storage” (See screenshot, call out 2)

(note that there’s no other category at the time of writing) - Hit the “Update and View Results” button

- This will result in a list of supported configurations for FC based metro cluster solutions, currently only EMC VPLEX is supported

- Click name of the Model (in this case VPLEX) and note all the details listed

- Unfold the “FC Metro Cluster Storage” solution for the footnotes as they will provide additional information on what is supported and what is not.

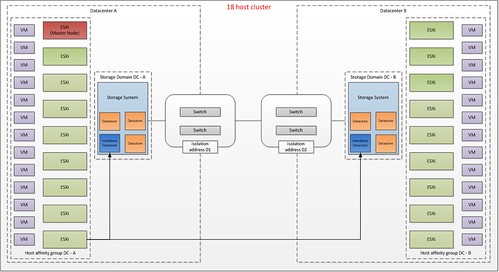

- In the case of our example, VPLEX, it says “Only Non-uniform host access configuration is supported” but what does this mean?

- Go back to the Search Results and click the “Click here to Read Important Support Information” link (See screenshot, call out 3)

- Half way down it will provide details for ” vSphere Metro Cluster Storage (vMSC)in vSphere 5.0″

- It states that “Non-uniform” are ESXi hosts only connected to the storage node(s) in the same site. Paths presented to ESXi hosts from storage nodes are limited to local site.

- Note that in this case not only is “non-uniform” a requirement, you will also need to adhere to the latency and replication type requirements as listed above.

Yes I realize this is not a perfect way of navigating through the HCL and have already reached out to the people responsible for it.