I started digging in to this yesterday when I had a comment on my Metro Cluster article. I found it very challenging to get through the vSphere Metro Storage Cluster HCL details and decided to write an article about it which might help you as well when designing or implementing a solution like this.

First things first, here are the basic rules for a supported environment?

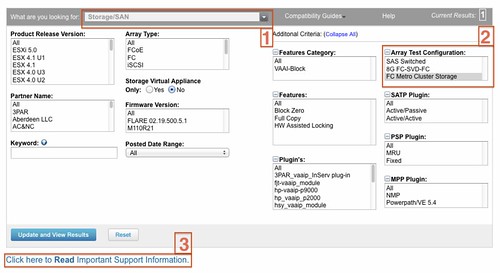

(Note that the below is taken from the “important support information”, which you see in the “screenshot, call out 3”.)

- Only array-based synchronous replication is supported and asynchronous replication is not supported.

- Storage Array types FC, iSCSI, SVD, and FCoE are supported.

- NAS devices are not supported with vMSC configurations at the time of writing.

- The maximum supported latency between the ESXi ethernet networks sites is 10 milliseconds RTT.

- Note that 10ms of latency for vMotion is only supported with Enterprise+ plus licenses (Metro vMotion).

- The maximum supported latency for synchronous storage replication is 5 milliseconds RTT (or higher depending on the type of storage used, please read more here.)

How do I know if the array / solution I am looking at is supported and what are the constraints / limitations you might ask yourself? This is the path you should walk to find out about it:

- Go to : http://www.vmware.com/resources/compatibility/search.php?deviceCategory=san (See screenshot, call out 1)

- In the “Array Test Configuration” section select the appropriate configuration type like for instance “FC Metro Cluster Storage” (See screenshot, call out 2)

(note that there’s no other category at the time of writing) - Hit the “Update and View Results” button

- This will result in a list of supported configurations for FC based metro cluster solutions, currently only EMC VPLEX is supported

- Click name of the Model (in this case VPLEX) and note all the details listed

- Unfold the “FC Metro Cluster Storage” solution for the footnotes as they will provide additional information on what is supported and what is not.

- In the case of our example, VPLEX, it says “Only Non-uniform host access configuration is supported” but what does this mean?

- Go back to the Search Results and click the “Click here to Read Important Support Information” link (See screenshot, call out 3)

- Half way down it will provide details for ” vSphere Metro Cluster Storage (vMSC)in vSphere 5.0″

- It states that “Non-uniform” are ESXi hosts only connected to the storage node(s) in the same site. Paths presented to ESXi hosts from storage nodes are limited to local site.

- Note that in this case not only is “non-uniform” a requirement, you will also need to adhere to the latency and replication type requirements as listed above.

Yes I realize this is not a perfect way of navigating through the HCL and have already reached out to the people responsible for it.

Worth to mention in this topic are the VMware KBs for stretch cluster: KB2000948 (IBM SVC Split IO), KB1001783 (NETAPP Metro), KB1026692 (EMC VPLEX Metro).

Keep in mind that some of these are not for vSphere 5.0 currently Tho 🙂

Disclosure – EMCer here.

It’s worth noting that the VPLEX KB article has an update specifically for vMSC and vSphere 5.

http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2007545

There have been (for some time now) a set of stretched cluster solutions at the storage level (NetApp Metrocluster, EMC VPLEX, HP P-series aka Lefthand, IBM SVC etc) – several of which (mostly EMC, NetApp and HP – the SVC one has some real funky bits) have customers using them in the stretched vSphere cluster use case.

Some also have long KB articles, and storage-vendor documentation about how to use them.

The point of vMSC is that these configurations have been historically:

– relatively complex

– have many, MANY hidden “gotchas”, mostly related to VM HA behavior, but also split-brain and APD conditions on storage partition. Lee Dilworth and I did a VMworld 2011 session (BCO2479) which you can find online (google “virtual geek BCO2479″)

– most importantly – VMware never tested (and therefore never explicitly supported) all the additional failure conditions (they tested them as “normal” storage HCL test harnesses) that Duncan points out in this post.

Remember, I’m not saying solutions like yours don’t work, they certainly do. How I would characterize it is that vMSC and the explicit VMware support is an important step to mainstreaming these sorts of stretched cluster configurations (with more steps to come).

vMSC would not be possible without the changes in vSphere 5, and loads, and loads of testing.

I fully expect Lefthand to join quickly (their stretched cluster model supports the design model closely, while as you would expect, I would argue vehemently that EMC VPLEX is superior), and I’m sure that NetApp will join eventually (a little harder, as it’s a stretched storage aka Uniform Access model).

Thanks for the follow-up post Duncan, navigating through the HCL and KBs definitely wasn’t very intuitive and left me confused. This does clear up most of the questions I had.

One final question I had… According to the HCL, VPLEX is supported on ESX 4.1, but not ESXi 4.1 (only 5.0), any ideas why ESX 4.1 is supported but not ESXi 4.1?

This probably means it has not been explicitly tested, but I am not sure. Maybe Chad can fill us in.

@Craig – disclosure, EMCer here

Like your vSphere 4.1 observation re VPLEX is like some of the other comments in this thread… With vSphere 4.x and VPLEX, the core question is one of what has been tested, by whom, and where VMware’s support begins and ends.

Like NetApp Metrocluster, Lefthand, and other examples, there are plenty of EMC VPLEX happy, working customers in stretched configurations that aren’t running vSphere 5, but are on vSphere 4.1. Some of those customers use Host Affinity groups, some don’t.

Like those cases, there are earlier VMware KB articles, and vendor provided (in this case the vendor is EMC) that describe how to use it, and failure conditions.

Until vSphere 5, and the vMSC, VMware didn’t explicitly test (for themselves) the failure conditions that are associated with the stretched cluster use case. The HCL test harness for stretched storage configurations was the same as for a normal storage target.

That means that if you follow the “letter of the law”, VMware doesn’t explicitly support vSphere 4.1 and VPLEX stretched cluster configurations, but they also don’t explicitly support ANY stretched cluster configurations. REMEMBER: that doesn’t mean “doesn’t work”, but the customer needs to understand that VMware will redirect to the storage vendor in certain circumstances.

This is what Lee Dilworth @ VMware and I were talking about in BCO2479 at VMworld about “hardening” the stretched cluster use case…. vSphere 5, and it’s changes to VM HA, changes to path failure handling, addition to the HCL (building the automated test harness was a TON of work, as an example, as the failure modes are a lot broader than a traditional non-stretched cluster) for this specific use case – these are all the first steps of making this use case (stretched clusters) more mainstream (with more stuff to come, like surfacing “sidedness” for automated placement of VMs and more)

Hope that helps!

I am just wondering why you meant Netapp Metro Cluster is not supported on vSphere 5. Netapp Metro Cluster had been in place for years even before EMC start to talk about VPLEX previously.

Is this part of the certification process for VMware to certified it?

From your previous post if we compare the technology behind both VPLEX and Netapp Metrocluster, the back end architecture are 2 different things. Therefore I had raised my curious question in your previous post, is the stretch metro cluster you mentioned is only applied to specified brand, because I do not believe all storage will develop the same architecture to achieve the same result. Let’s see what is the respond from netapp on this.

Currently only EMC VPLEX is officially certified. The only response NetApp can have is: “that is correct, we are undergoing the same process and hope to join EMC soon.”

Read my other article on what is supported/certified and what not for more details: http://www.yellow-bricks.com/2011/10/07/vsphere-metro-storage-cluster-solutions-what-is-supported-and-what-not/

As Chad explained normal rules don’t apply when designing metro clusters. i.e. isolation response split brain etc. Hopefully Vmware will bring out more material on metro clusters and HA options even if it does eat into there SRM sales. Datastore heartbeating will help but it’s not the total solution.

To all those people thinking doing it, go for it the results are excellent and it isn’t as complex as you’d think. We’ve had complete datacentre scheduled outages with no downtime to users it’s great.

Our Lefthand multi-site cluster runs 200 vm’s and about 50 tb of storage with no issues NOW…..but earlier ;). Hopefully HP can get on the certified list soon

What other material than presented above would you like to see. To be honest, most of the weight is on the storage side of things and not the vSphere side…

Steve, would you mind sharing some information in your configuration? I am just in the process of implementing 16 P4500 boxes and some real-world input would be nice.

Is it likely that HP EVAs will become suppported? – specifically two EVAs using standard HP Continuous Access without HP SVSP or an equivalent 3rd party storage virtualisation product.

With HP CA a LUN failover is initialized manually so in the event of a site failure (i.e. hosts and storage down) the VMs would fail to HA recover.

I have tested a LUN failover and the WWN on the source and destination LUN remains the same so just a datastore rescan is required.

I’m currently using the classic two site SRM configuration but considering a design change with vSphere 5.

it is up to HP to test and cerify the configuration to be honest.

Yes it is.

My question really is in order for a storage platform to be certified does the failover for a synchronous mirrored datastore have to be automated? As mentioned without a storage virtualisation layer the EVA LUNs require a manual failover.

VPLEX is EMC’s heterogeneous storage virtualization product. The question was asked if HP EVA is supported… VPLEX can sit in front of many vendors storage. VPLEX has a HCL which details which storage is supported.

you recommend Storage DRS in manual mode for vSMC, why not automatic within two datastore clusters that match vplex consistency groups?

“Only array-based synchronous replication is supported and asynchronous replication is not supported.”

by this did u mean the vSphere replication is not supported. Appologies for my ignorance I am still a newbie when it comes to vMSC Cluster.

vSphere Replication doesn’t offer vMSC capability at all. So not really relevant in this discussion.

Duncan, if you create two datastore clusters from storage in two VPLEX consistency groups (one defaulting to Site A, one to Site B), can you use automatic SDRS? the VMs would only be able to move within datastores on their default site. There would be additional WAN traffic, but it’s unlikely that VMs would be migrated that often.

Also, have you seen this VMware KB where it seems to suggest SDRS shouldn’t be used on VPLEX distributed virtual volumes (the foundation of a vMSC!):

http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2042596

thanks for your help

are you able to help Duncan?

thanks

Let me look in to it.

were you able to find out any more information on using SDRS with VPLEX?

Is it something Chad Sakac may be able to help with, or should I just log an SR?

@Graham http://kb.vmware.com/kb/2042596

Any of you Cisco Nexus experts out there know if it would be possible to run the vMSC over FCoE? I’m thinking 5K at each site, dark fiber connecting them, FC connections from each 5K to the storage at the respective site.

How do you have the FCF on each side talk to each other with an ISL trunk of sorts over FCoE?

Storage[FC]—[FC]Nexus5K[FCoE]—————————-[FCoE]Nexus5K[FC]Storage

How far are the two sites apart? I don’t think you can use FCoE as a data centre interconnect due to the buffer limitations. You can use FCIP though, and I’m sure you can use VPLEX with FCIP routers as we looked at doing it before there was a native 10GbE option.

Graham

How far are the two sites apart? I don’t think you can use FCoE as a data centre interconnect due to the buffer limitations. You can use FCIP though, and I’m sure you can use VPLEX with FCIP routers as we looked at doing it before there was a native 10GbE option.

Graham

Hello Duncan,

Thanks a lot for this article.

Currently, I have a datacore solution.

If you don’t know much about datacore, here’s a diagram that explains it:

http://www.storagenewsletter.com/images/public/sites/StorageNewsletter.com/articles/icono7/datacore_sansymphonyv.jpg

we have two redundant lines, running 10GB/Sec IP, and 8GB/Sec FC.

maximum length of the line is 7KM (almost 5 miles).

My question, is metrocluster supported for a datacore solution ?

Also, what would be the requirements ? I saw in your documentation 5mms RTT is required. how would i measure something like that with Datacore.

In datacore, there is no active-standby nodes. There’s only a preferred path. Datacore is a uniformed solution. I don’t know how this would fit inside the metrocluster storage…..

Please help,

Thank you 🙂

Datacore is not on the vMSC HCL at the moment as far as I can tell. But I am not part of the certification team for vMSC so cannot say much about it other than: contact a Datacore representative.

Thank you duncan.

First point, as Duncan says, I don’t believe Datacore is on the vMSC HCL. You can check the HCL here:

http://www.vmware.com/resources/compatibility/search.php?deviceCategory=san

The latency you refer to needs to be measured between the Datacore nodes, so across the fabrics/networks you are going to use to connect them. The actual requirement will be based on whatever Datacore requires. ESXi has latency requirements for vMotion which are less than 5ms, or less than 10ms if you’re using Enterprise Plus licensing.

If/when you speak to Datacore, I would ask them about their LUN ownership model as my knowledge of their solution (which I fully accept may be out of date) was that there wasn’t a concept of site awareness. This means that if the intersite links fail, you need to understand fully how the two nodes prevent a split brain scenario. Other vMSC solutions use the concept of a 3rd site witness or quorum server to aid this situation, but I’m not sure Datacore do.

Graham

Thanks Graham,

Already in touch with Datacore team to find out what’s the best scenario for this…

Will keep you updated.

Ahmed