I had a discussion via email about metro clusters and HA last week and it made me realize that HA’s new architecture (as part of vSphere 5.0) might be confusing to some. I started re-reading the article which I wrote a while back about HA and metro / stretched cluster configurations and most actually still applies. Before you read this article I suggest reading the HA Deepdive Page as I am going to assume you understand some of the basics. I also want to point you to this section in our HCL which lists the certified configurations for vMSC (vSphere Metro Storage Cluster). You can select the type of storage like for instance “FC Metro Cluster Storage” in the “Array Test Configuration” section.

In this article I will take a single scenario and explain the different type of failures and how HA underneath handles this. I guess the most important part in these scenarios is why HA or did not respond to a failure.

Before I will explain the scenario I want to briefly explain the concept of a metro / stretched cluster, which can be carved up in to two different type of solutions. The first solution is where a synchronous copy of your datastore is available on the other site, this mirror copy will be read-only. In other words there is a read-write copy in Datacenter-A and a read-only copy in Datacenter-B. This means that your VMs in Datacenter-B located on this datastore will do I/O on Datacenter-A since the read-write copy of the datastore is in Datacenter-A. The second solution is which EMC calls “write anywhere”. In this case VMs always write locally. The key point here is that each of the LUNs / datastores has a “preferred site” defined, this is also sometimes referred to as “site bias”. In other words, if anything happens to the link in between then the storage system on the preferred site for a given datastore will be the only one left who can read-write access it. This of course to avoid any data corruption in the case of a failure scenario.

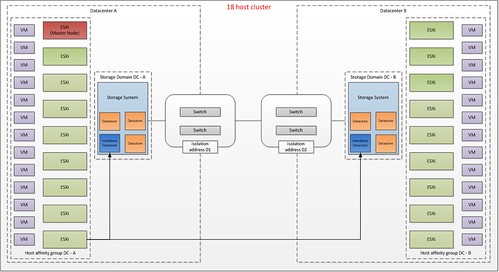

In this article we will use the following scenario:

- 2 sites

- 18 hosts

- 50KM in between sites

- Preferred (aka “Should”) VM-Host affinity rule to create “Datacenter Affinity – I/O Locality”

- Designated heartbeat datastores

(read this article more details on heartbeat datastores) - Synchronous mirrored datastores

(Please note that I have not depicted the “mirror” copy just to simply the diagram)

This is what it will look like:

What do you see in this diagram? Each site will have 9 hosts. The HA master is located in Datacenter-A. Each host will use the designated “heartbeat datastore” in each of the datacenters, note that I only drew the line for the lower left ESXi host just to simplify the diagram.

There are many failures which can occur but HA will be unaware of many of these. I will not discuss these as they are explained in-depth in the storage vendor’s documentation. I will however discuss the following “common” failures:

- Host failure in Datacenter-A

- Storage Failure in Datacenter-A

- Loss of Datacenter-A

- Datacenter Partition

- Storage Partition

Host failure in Datacenter-A

When a host fails in Datacenter-A this is detected by the HA master node as network heartbeats from it are not received any longer. When the master has detected network heartbeats are missing it will start monitoring for datastore heartbeats. As the host has failed there will be no datastore heartbeats issued. During this time a third liveness check will be done, which is pinging the management addresses of the failed hosts. If all of these liveness checks are unsuccessful, the master will declare the host dead, and will attempt to restart all the protected virtual machines that were running on the host before the master lost contact with it. . The rules defined on a cluster level are “preferred rules” and as such the virtual machine can be restarted on the other site. If DRS is enabled then it will attempt to correct any sub-optimal placements HA made during the restart of your virtual machines. This same scenario also applies to a situation where all hosts fail in one site without storage being affected.

Storage Failure in Site-A

In this scenario only the storage system fails in Site-A. This failure does not result in down time for your VMs. What will happen? Simply said the mirror, read-only, copy of your datastore will become read-write and be presented with the same identifier and as such the hosts will be able to write to these volumes without the need to resignature. (This sounds very simple of course, but do note I am describing it on an extremely high level and in most solutions manual intervention is required to indicate a failure has occurred.) In most cases however, from a VMs perspective this happens seamlessly. It should be noted that all I/O will now go across your link to the other site. Note that HA is not aware of this failure. Although the storage heartbeat might be lost for a second, HA will not take action as a HA master agent only checks for the storage heartbeat when the network heartbeat has not been received for three seconds.

Loss of Datacenter-A

This is basically a combination of the first and the second failure we’ve described. In this scenario, the hosts in Datacenter-B will lose contact with the master and elect a new one. The new master will access the per-datastore files HA uses to record the set of protected VMs, and so determine the set of HA protected VMs. The master will then attempt to restart any VMs that are not already running on its host and the other hosts in Datacenter-B. At the same time, the master will do the liveness checks noted above, and after 50 seconds, report the hosts of Datacenter-A as dead. From a VMs perspective the storage fail-over could occur seamlessly. The mirror copy of the datastore promoted to Read-write and the hosts on Datacenter-B will be able to access the datastores which were local to Datacenter-A.

Datacenter Partition

This is where most people feel things will become tricky. What happens if your link between the two datacenters fails? Yes I realize that the chances of this happening are slim as you would typically have redundancy on this layer, but I do think it is an interesting one to explain. The main thing to realize here is that with these types of failures VM-Host affinity, or should I call it VM-Datacenter affinity, is very important suddenly, but we will come back to that in a second. Lets explain the scenario first.

In this case a distinction could be made between the two types of metro cluster as briefly explained before. There is a solution, which EMC likes to call “write anywhere” which basically presents a virtual datastore across Datacenters and allows writes on both sites. On the other hand there is the traditional stretched cluster solution where there’s only 1 site actively handling I/Os and a “passive” site which will be used in the case a fail-over need to occur. In the case of “write anywhere” a so-called site bias or preference is defined per datastore. Both scenarios however are similar in terms of that a given datastore will in the case of a failure only be accessible on one site.

If the link between the sites should fail the datastore would become active on just one of the sites. What would happen to the VM that is running on Datacenter-B but has its files stored on a datastore which was configured with site bias for Datacenter-A?

In the case where a VM is running on Datacenter-B the VM would have its storage “yanked” out underneath of it. The VM would more than likely keep running and keep retrying the I/O. However as the link has been broken between the sites, HA in Datacenter-A will try to restart the workload. Why is that?

- The network heartbeat is missing because the link dropped

- The datastore heartbeat is missing because the link dropped and the datastore becomes inaccessible from Datacenter-B

- A ping to the management address of the host fails because the link is missing

- The master for Datacenter-A knows the VM was powered on before the failure, and since it can’t communicate with the VM’s host in Datacenter-B after the failure, it will attempt to restart the VM.

What happens in Datacenter-B? In Datacenter-B a master is elected. This master will determine the VMs that need to be protected. Next it will attempt to restart all those that it knows are not already running. Any VMs biased to this site that are not already running will be powered on. However any VMs biased to the other site, won’t be as the datastore is inaccessible. HA will report a restart failure for the latter since it does not know that the VMs are (still) running in the other site.

Now you might wonder what will happen if the link returns? This is the classic “VM split brain scenario”. For a short period of time you will have 2 active copies of the VM on your network both with the same mac address. However only one copy will have access to the VM’s files and HA will recognize this. As soon as this is detected the VM copy that has no access to the VMDK will be powered off.

I hope all of you understand why it is important to understand what the preferential site is for your datastore as it can and probably will impact your up-time. Also note that although we defined VM-Host affinity rules these are preferential / should rules and can be violated by both DRS.

Storage Partition

This is the final scenario. In this scenario only the storage connection between Datacenter-A and Datacenter-B fails. What happens in this case? This scenario is very straight forward. As the HA master is still receiving network heartbeats it will not take any action unfortunately currently. This is very important to realize. Also HA will not be aware of these rules. HA will restart virtual machines where ever it feels it should, DRS however should move the virtual machines to the correct location based on these rules. That is, if and when correctly defined of course!

Summarizing

Stretched Clusters are in my opinion great solutions to increase resiliency in your environment. There is however always a lot of confusion around failure scenarios and the different type of responses from both the vSphere layer and the Storage layer. In this article I have tried to explain how vSphere HA responds to certain failures in a stretched / metro cluster environment. I hope this will help everyone getting a better understanding of vSphere HA. I also fully realize that things can be improved by a tighter integration between HA and your storage systems, for now all I can is that this is being worked on. I want to finish with a quick summary of some vSphere 5.0 HA design consideration for stretched cluster environments:

- Das.isolationaddress per Datacenter!

Having multiple isolation addresses will help your hosts understanding if they are isolated or if the master has isolated in the case of a network failure. - Designated heartbeat datastores per Datacenter!

Each site will need a designated heartbeat datastore to ensure each site can at a minimum update the heartbeat region of the site local storage.- If there are multiple storage systems on each site it is recommended to increase the number of heartbeat datastores to four, two for each site.

- Define VM-Host affinity rules as it can lower the impact during a failure and it can help keeping I/O local

Thanks for taking the time to read this far, and don’t hesitate to leave a comment if you have any questions or feedback / remarks.

<edit> completely coincidentally Chad Sakac posted an article about Stretched Clusters and the new HCL Category. Read it!</edit>

** Disclaimer: This article contains references to the words master and/or slave. I recognize these as exclusionary words. The words are used in this article for consistency because it’s currently the words that appear in the software, in the UI, and in the log files. When the software is updated to remove the words, this article will be updated to be in alignment. **

Excellent article Duncan.

When talking about a storage failure (you used Storage Failure in Site-A), it is important to know whether your storage fabrics are cross-connected, meaning hosts in both datacenters are zoned to see the arrays in both datacenters. Some implementations of stretched clusters do not cross-connect the storage fabric, which would cause downtime for your VMs in Site-A should the array fail.

How would you manually handle that? Can you perform a vMotion to a host in Datacenter-B?

It would definitely be cool to see HA handle this type of failure in the future

Actually that would not be a supported configuration with vSphere 5.0. As stated in the HCL “Paths presented to ESXi hosts are stretched across distance.”

The next section in the HCL is “Non-Uniform host access configuration” and it states “Paths presented to ESXi hosts from storage nodes are limited to local site”. To me it reads that separate fabrics are supported, am I missing something?

I don’t know who wrote it, but it should be rephrased to be clear what is and what is not supported. I’ll ask for an update.Now I get is… that refers to the note in the support statement for the specific array. So in the case of the VPLEX it is only supported in Non-Uniform mode apparently.

Awesome article and great timing (for me)! I need to read up on VPLEX and such storage technologies, but this will certainly be helpful. Thanks Duncan!

Hugo

any specified storage you are referring to this? example Netapp Metro Cluster or EMC VPLEX?

or it should work with any brand of storage that supported Synchronous replication?

I tried to keep it as generic as possible as my focus was on the HA side of things.

Thanks for an excellent article, Duncan. It’s great to see there is almost no chance for a split-brain disaster in a DC partition scenario (assuming you get the storage setup right ;). VMware did a great job with vSphere 5.

However, the networking component is missing in your picture. No problems with any non-partition failure scenario, but when you do get DC partition, you’ll also get split subnets and lots of the external end-user traffic will get forwarded into the wrong DC (resulting in lost user sessions).

I hope you don’t mind if I include a link to a blog post describing the split subnet issues (it also has a few great comments from fellow networking engineers)

http://blog.ioshints.info/2011/04/distributed-firewalls-how-badly-do-you.html

Kind regards

Ivan

I know it was missing, I also did not go in to deep on the Storage layer as my focus for this article was vSphere and HA.

Thanks for the link,

Is it true that I can failover a mirrored Datastore without taking the VMs offline or forcibly killing them? I am doing some testing here, and I saw that ESX won’t mount a failed-over VMFS if it still ‘remembers’ the ‘old’ paths and the ‘old’ SAN-UUID of it. I had to reboot ESX and do an esxcfg-volume -M to get the VMFS mounted.

I am just testing this. Created a clone of the LUN, failed the original one with one VM running on it an presented the cloned one. Here’s what I get:

~ # esxcfg-volume -l

VMFS UUID/label: 4e8b2a0d-90b3f8cc-d82e-101f742f0252/VC

Can mount: No (the original volume is still online)

Can resignature: Yes

Extent name: naa.6006016006b12d00a621a1b93cf0e011:1 range: 0 – 61183 (MB)

I cannot unmount the original (now inactive) VMFS, because it’s busy. How do I deal with that?

With solutions like NetApp you would need to initiate a “takeover”. With which type of storage did you test this?

I have two VNX5300 (block) here. I can e.g. promote the mirror, but as long as I have a VM running on the dead datastore, my ESX won’t let me mount the replicated (now online) VMFS. As long as a VM is running on the lost datastore I cannot unmount it, because its busy. I have to power of the VM, unregister it from vCenter and after that I can mount the replica an reregister VM.

Ist like stated here: http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2004684 (quite at the end, “An unplanned PDL occurs…”).

Will Netapp take over the SAN-UUID of the volume?

Yes NetApp will allow for that. But what you are trying to achieve is not really what we are discussing here to be honest.

Duncan, I understand that we were actually not talking about the same thing. But I would suggest to make that a little more clear in your “Storage Failure in Site-A” scenario. When you talk about the “same identifier” I initially thought you meant the VMFS-identifier. In a synchronous replication with e.g. MirrorView/S the VMFS-identifier surely stays the same, but the LUN-identifier changes. And in that case the failover is not transparent.

After reading the various comments it has become absolutely clear why one needs a storage replication solution that supports vMSC. EMC² MirrorView and HP EVA CA don’t, EMC² VPLEX and HP P4000 do.

Thank you for this great article, it will be very handy in customer discussions!

We actually do this already in vSphere 4.1 🙂 By stretching the cluster between two datacenters connected with black fiber and using the network raid 10 with HP P4300/P4500.

Yes I know. But I wrote this article because the way HA behaves is different.

With Lefthand you have a completely different behaviour, because it keeps the LUNs UUID the same. You don’t have a failover like in replicated environments. This makes site failover much simpler with lefthand.

@Ollfried – Disclosure, I’m an EMCer.

There have been (for some time now) a set of stretched cluster solutions at the storage level (NetApp Metrocluster, EMC VPLEX, HP P-series aka Lefthand, etc) – several of which (mostly those three) have customers using them in the stretched vSphere cluster use case.

Some also have long KB articles, and storage-vendor documentation about how to use them.

The point of vMSC is that these configurations have been historically:

– relatively complex

– have many, MANY hidden “gotchas”, mostly related to VM HA behavior, but also split-brain and APD conditions on storage partition. Lee Dilworth and I did a VMworld 2011 session (BCO2479) which you can find online (google “virtual geek BCO2479”)

– most importantly – VMware never tested (and therefore never explicitly supported) all the additional failure conditions (they tested them as “normal” storage HCL test harnesses) that Duncan points out in this post.

Remember, I’m not saying solutions like yours don’t work, they certainly do. How I would characterize it is that vMSC and the explicit VMware support is an important step to mainstreaming these sorts of stretched cluster configurations (with more steps to come).

vMSC would not be possible without the changes in vSphere 5, and loads, and loads of testing.

I fully expect Lefthand to join quickly (their stretched cluster model supports the design model closely, while as you would expect, I would argue vehemently that EMC VPLEX is superior), and I’m sure that NetApp will join eventually (a little harder, as it’s a stretched storage aka Uniform Access model).

Chad, thank you for your input! I only have experience on HP P4000, HP EVA and EMC CX4 and VNX, unfortunately I never worked with VPLEX. These products will become more and more important in the next few years, because customers want transparant business continuity even in case of disaster. MirrorView and SRM are charming, but VPLEX and P4000 are even more. I look forward to VMware supporting more than one vCPU in a FT-VM – that will bring us to a whole new level.

Annex A: I hope the SRA for MirrorView will be out soon.

Annex B: HP P-series is no more only lefthand. Lefthand ist P4000 series, P6000 is EVA e.g.

Hi Duncan,

i have some misunderstanding, as i understand in you solution, you use one cluster stretched in two DC, so how is it possible to use different isolation address for different group of host ?

Das.isolationaddress per Data center!

Just specify two isolation addresses, one for datacenter-A and one for datacenter-B. If the link is cut, then isolation response will not be triggered as one of the isolation addresses is still reachable.

If in VPLEX vMSC the fabric is not cross-connected, it means that in the scenario in which a storage controller (the whole cluster) in either of the sites fails, the I/O won’t go across the datacenters.

Instead, the ESX (supposedly not booted from SAN) servers in the failed site will lose access to the array, but it will go undetectable by HA and therfore will require manual intervention by the administrator in order to get VMs up and running on the healthy site.

Or am I missing something..?

This is where the title of the article is important. People should be aware of what a storage cluster solution is, and it is not storage replication 🙂

Duncan, question about the DC partition scenario with a traditional (NetApp) metrocluster, assuming all of the affinity rules are setup correctly:

If all of the hosts see LUNs from both sides, and the connection (that carries both regular network and FC) goes down, would this cause an APD scenario for both sides? Also, confused about whether isolation response is triggered on a host or hosts that are network partitioned (if they see election traffic from their buddies on their respective side, would they still try and ping the isolation address?) Thanks.

Hi Stacy,

1) if the connection between the hosts and LUNs is gone then ESX will label this as an “APD” indeed.

2) as soon as the host doesn’t receive a heartbeat it will ping the isolation address. when election traffic is seen and a new master is elected the pinging will stop.

Hope that helps,

Thanks, Duncan, for the clarification. So the hosts would not start to become unresponsive due to the APDs on LUNs if site affinity is correct?

Not sure if the isolation addresses/default gateways on each side would be pingable in the event of a complete link failure, but it seems like it would be overkill to specify more than two isolation addresses.

That depends Stacy:

1) if the connection between the hosts and all storage paths are gone –> yes will become unresponsive

2) if you still have access to the “local array” and the affinity is set correctly… no problem

As long as there is traffic possible on each site, and as such a new master will be elected… it shouldn’t be a problem.

I have one query on the heartbeat datastores. If you do have synchronously mirrored storage, should the heartbeat datastores also be mirrored, or should they be stand alone ones, one (or two) in each datacenter?

Hi,

I have one question ,

In a cluster -one of the host is grayed out but all the gust VMs are working (in right click no options are not enable there)

1.Its possible ?

2.How to move all the gusts to other host.

Thanks in advance

Great article Duncan. I have seen a read a few articles about stretched clusters. I am wondering can I still have a single cluster with 32 hosts in a stretched cluster?

What would be the correct behavior if I have a datastore using a streched VPLEX Metro when there is a Wan-com disconnection between the two VPLEX clusters. Currently I am seeing that ESX that sees the loser side of the datastore is showing inactive after fixing the wan-com problem. I have Disk.AutoremoveOnPDL =0

Thanks for great articls…Its really helpful