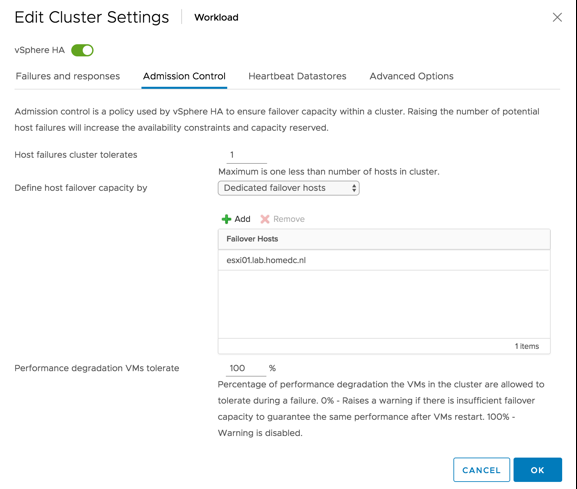

I’ve had this question over a dozen times now, so I figured I would add a quick pointer to my blog. What is causing the error “vSphere HA agent on this host could not reach isolation address” to pop up on a 2-node direct connect vSAN cluster? The answer is simple, when you have vSAN enabled HA uses the vSAN network for communication. When you have a 2-node Direct Connect the vSAN network is not connected to a switch and there are no other reachable IP addresses other than the IP addresses of the vSAN VMkernel interfaces.

When HA tries to test if the isolation address is reachable (the default gateway of the management interface) the ping will fail as a result. How you can solve this is simply by disabling the isolation response as described in this post here.