This week I received some questions on the topic of HA Admission Control. There was a customer that had a cluster configured with the dedicated failover host admission control policy and they had no clue why. This cluster had been around for a while and it was configured by a different admin, who had left the company. As they upgraded the environment they noticed that it was configured with an admission control policy they never used anywhere else, but why? Well, of course, the design was documented, but no one documented the design decision so that didn’t really help. So they came to me and asked what it exactly did and why you would use it.

Let’s start with that last question, why would you use it? Well normally you would not, you should not. Forget about it, well unless you have a specific use case and I will discuss that later. What does it do?

When you designate hosts as failover hosts, they will not participate in DRS and you will not be able to run VMs on these hosts! Not even in a two-host cluster when placing one of the two in maintenance. These hosts are literally reserved for failover situations. HA will attempt to use these hosts first to failover the VMs. If, for whatever reason, this is unsuccessful, it will attempt a failover on any of the other hosts in the cluster. For example, in a when two hosts would fail, including the hosts designated as failover hosts, HA will still try to restart the impacted VMs on the host that is left. Although this host was not a designated failover host, HA will use it to limit downtime.

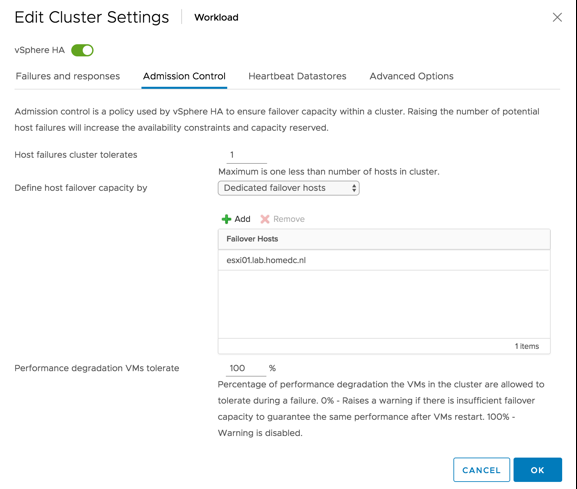

As can be seen above, you select the correct admission control policy and then add the hosts. As mentioned earlier, the hosts added to this list will not be considered by DRS at all. This means that the resources go wasted unless there’s a failure. So why would you use it?

- If you need to know where a VM runs all the time, this admission control policy dictates where the restart happens.

- There is no resource fragmentation, as a full host (or multiple) worth of resources will be available to restart VMs on, instead of 1 host worth of resources across multiple hosts.

In some cases, the above may be very useful, for instance knowing where a VM is all the time could be required for regulatory compliance, or could be needed for licensing reasons when you run Oracle for instance.

And what in case you are not suing reservations for VMs at all – is it good to user admission control based on % ?

I would almost always use the Percentage Based option, and if you have 6.5 or higher I would combine it with the “performance degradation tolerated” feature as well, as this ensures you have similar performance after a failure has occured.

But for Percentage Based option to work you need to use reservations on VMs, which also lowers ROI. I saw a lot of people using Percentage Based admission control without setting up reservations on VMs, thinking they are safe.

That was the point I was alluding too, using % admission control without reservations on VMs doesn’t provide protection IMHO.. Performance degradation tolerated is just a warning which will not stop an Admin to power on more VMs. On the other hand it’s been said that reservations should be avoided whenever possible if not explicitly required – e.g. when there are enough resources..

Moreover some soruces say that we should avoid using reservation if not necessary, on the other hand admission control based on % or slots relies on reservations which might not provide expected results in case of failover. So should we use % in that case?

Hello Duncan, You said everything! No fragmentation. Nice post! Dictate failover hosts is the most robust admision control ever, but it requires solid requirements to justify because the decrease of ROI is huge! For sure it can be called old school active-passive, and it must continue available in further releases.

That is good feedback. Do you use the policy itself anywhere? And what if we provide a new option where we can guarantee VMs can be powered on and get similar performance after a failure?

Never had a production use case for dictate hosts policy Duncan! Have a new admission control policy that be able to forecast cluster resources to guarante a similar performance will be revolutionary! If this function can runs on ESXi and can be independent of vCenter, this will rocks! Maybe the master node can handle this work 😉

Good to see you back from vacation. It’s a nice post.

Just want to add: If we use dedicated failover hosts admission control and designate multiple failover hosts, DRS does not attempt to enforce VM-VM affinity rules for virtual machines that are running on failover hosts.

We had a customer where their maintenance support contracts lapsed for a number of hosts. While getting that straightened out, we designated those hosts as HA failover hosts so they were available in case of emergency, but were running workloads during normal operations.

I’ve used it for a production SQL cluster – a VMware cluster where you are licensing Microsoft SQL server at the host core level. If you have a dedicated standby host that has no active VMs on it, you do not have to license its cores for SQL server, but it can provide you the vSphere HA recoverability needed to satisfy N+1. I checked with our VAR and their assessment was this was ok from a Microsoft licensing standpoint.