Two weeks ago I tweeted about All-Flash HCI taking over fast, maybe I should have said All-Flash vSAN as I am not sure every vendor is seeing the same trend. Reason for it being of course is the price of flash dropping while capacity goes up. At the same time with vSAN 6.5 we introduced “all-flash for everyone” by dropping the “all-flash” license option down to vSAN Standard.

I love getting these emails about huge vSAN environments… this week alone 900TB and 2PB raw capacity in a single all-flash vSAN cluster

— Duncan Epping (@DuncanYB) November 10, 2016

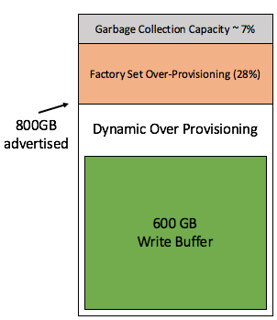

So the question naturally came, can you share what these customers are deploying and using, I shared those later via tweets, but I figured it would make sense to share it here as well. When it comes to vSAN there are two layers of flash used, one for capacity and the other for caching (write buffer to be more precise). For the write buffer I am starting to see a trend, the 800GB and 1600 NVMe devices are becoming more and more popular. Also the write-intensive SAS connected SSDs are often used. I guess it largely depends on the budget which you pick, needless to say but NVMe has my preference when budget allows for it.

For the capacity tier there are many different options, most people I talk to are looking at the read intensive 1.92TB and 3.84TB SSDs. SAS connected are a typical choice for these environments, but it does come at a price. The SATA connected S3510 1.6TB (available at under 1 euro per GB even) seems to be a choice many people make who have a tighter budget, these devices are relatively cheap compares to the SAS connected devices. With the downside being the shallow queue depth though, but if you are planning on having multiple devices per server than this probably isn’t a problem. (Something I would like to see at some point is a comparison between SAS and SATA connected for real life workloads for drives with similar performance capabilities to see if there actually is an impact.)

With prices still coming down and capacity still going up it will be interesting to see how the market shifts in the upcoming 12-18 months. If you ask me hybrid is almost dead, of course there are still situations where it may make sense (low $ per GB requirements), but in most cases all-flash just makes more sense these days.

I would interested in hearing from you as well, if you are doing all-flash HCI/vSAN, what are the specs and why are you selecting specific devices/controllers/types?

I was driving back home from Germany on the autobahn this week when thinking about 5-6 conversations I have had the past couple of weeks about performance tests for HCI systems. (Hence the pic on the rightside being very appropriate ;-)) What stood out during these conversations is that many folks are repeating the tests they’ve once conducted on their legacy array and then compare the results 1:1 to their HCI system. Fairly often people even use a legacy tool like Atto disk benchmark. Atto is a great tool for testing the speed of your drive in your laptop, or maybe even a RAID configuration, but the name already more or less reveals its limitation: “disk benchmark”. It wasn’t designed to show the capabilities and strengths of a distributed / hyper-converged platform.

I was driving back home from Germany on the autobahn this week when thinking about 5-6 conversations I have had the past couple of weeks about performance tests for HCI systems. (Hence the pic on the rightside being very appropriate ;-)) What stood out during these conversations is that many folks are repeating the tests they’ve once conducted on their legacy array and then compare the results 1:1 to their HCI system. Fairly often people even use a legacy tool like Atto disk benchmark. Atto is a great tool for testing the speed of your drive in your laptop, or maybe even a RAID configuration, but the name already more or less reveals its limitation: “disk benchmark”. It wasn’t designed to show the capabilities and strengths of a distributed / hyper-converged platform.

I get this question on a regular basis and it has been explained many many times, I figured I would dedicate a blog to it. Now, Cormac has written a very lengthy blog on the topic and I am not going to repeat it, I will

I get this question on a regular basis and it has been explained many many times, I figured I would dedicate a blog to it. Now, Cormac has written a very lengthy blog on the topic and I am not going to repeat it, I will