Yes, finally… the Virtual SAN 6.2 release has just been announced. Needless to say, but I am very excited about this release. This is the release that I have personally been waiting for. Why? Well I think the list of new functionality will make that obvious. There are a couple of clear themes in this release, and I think it is fair to say that data services / data efficiency is most important. Lets take a look at the list of what is new first and then discuss them one by one

- Deduplication and Compression

- RAID-5/6 (Erasure Coding)

- Sparse Swap Files

- Checksum / disk scrubbing

- Quality of Service / Limits

- In mem read caching

- Integrated Performance Metrics

- Enhanced Health Service

- Application support

That is indeed a good list of new functionality, just 6 months after the previous release that brought you Stretched Clustering, 2 node Robo etc. I’ve already discussed some of these as part of the Beta announcements, but lets go over them one by one so we have all the details in one place. By the way, there also is an official VMware paper available here.

Deduplication and Compression has probably been the number one ask from customers when it comes to features requests for Virtual SAN since version 1.0. The Deduplication and Compression is a feature which can be enabled on an all-flash configuration only. Deduplication and Compression always go hand-in-hand and is enabled on a cluster level. Note that Deduplication and Compression are referred to as nearline dedupe / compression, which basically means that deduplication and compression happens during destaging from the caching tier to the deduplication tier.

Now lets dig a bit deeper. More specifically, deduplication granularity is 4KB and will happen first and is then followed by an attempt to compress the unique block. This block will only be stored compressed when it can be compressed down to 2KB or smaller. The domain for deduplication is the disk group in each host. Of course the question then remains, what kind of space savings can be expected? It depends is the answer. In our environments, and our testing, have shown space savings between 2x and 7x. Where 7x arefull clone desktops (optimal situation) and 2x is a SQL database. Results in other words will depend on your workoad.

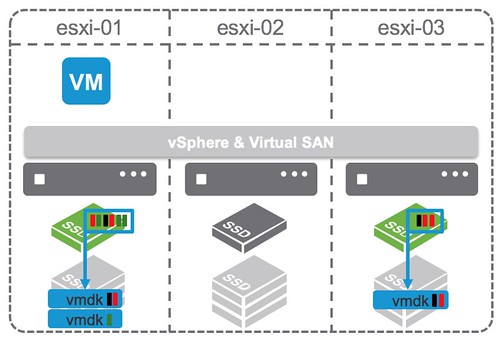

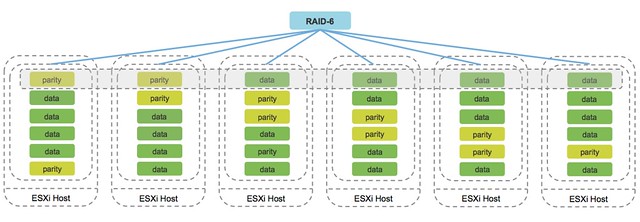

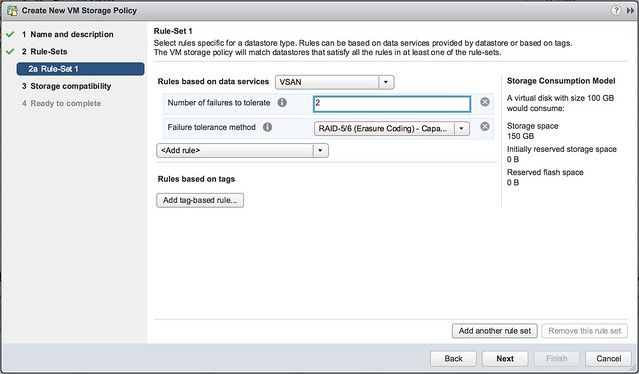

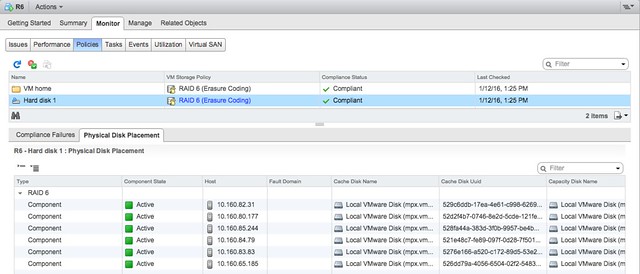

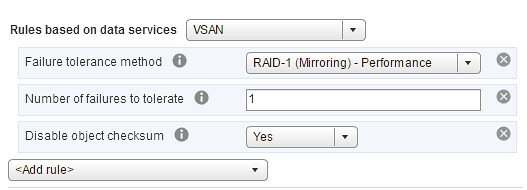

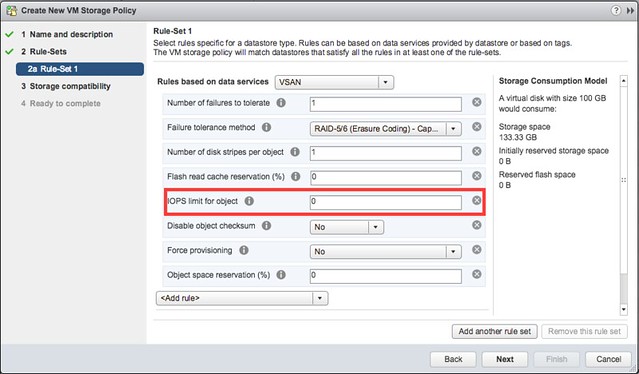

Next on the list is RAID-5/6 or Erasure Coding as it is also referred to. In the UI by the way, this is configurable through the VM Storage Policies and you do this through defining the “Fault Tolerance Method” (FTM). When you configure this you have two options: RAID-1 (Mirroring) and RAID-5/6 (Erasure Coding). Depending on how FTT (failures to tolerate) is configured when RAID-5/6 is selected you will end up with a 3+1 (RAID-5) configuration for FTT=1 and 4+2 for FTT=2.

Note that “3+1” means you will have 3 data blocks and 1 parity block, in the case of 4+2 this means 4 data blocks and 2 parity blocks. Note that again this functionality is only available for all-flash configurations. There is a huge benefit to using it by the way:

Lets take the example of a 100GB Disk:

- 100GB disk with FTT =1 & FTM=RAID-1 set –> 200GB disk space needed

- 100GB disk with FTT =1 & FTM=RAID-5/6 set –> 130.33GB disk space needed

- 100GB disk with FTT =2 & FTM=RAID-1 set –> 300GB disk space needed

- 100GB disk with FTT =2 & FTM=RAID-5/6 set –> 150GB disk space needed

As demonstrated, the space savings are enormous, especially with FTT=2 the 2x savings can and will make a big difference. Having that said, do note that the minimum number of hosts required also change. For RAID-5 this is 4 (remember 3+1) and 6 for RAID-6 (remember 4+2). The following two screenshots demonstrate how easy it is to configure it and what the layout looks of the data in the web client.

Sparse Swap Files is a new feature that can only be enabled by setting an advanced setting. It is one of those features that is a direct result of a customer feature request for cost optimization. As most of you hopefully know, when you create VM with 4GB of memory a 4GB swap file will be created on a datastore at the same time. This is to ensure memory pages can be assigned to that VM even when you are overcommitting and there is no physical memory available. With VSAN when this file is created it is created “thick” at 100% of the memory size. In other words, a 4GB swap file will take up 4GB which can’t be used by any other object/component on the VSAN datastore. When you have a handful of VMs there is nothing to worry about, but if you have thousands of VMs then this adds up quickly. By setting the advanced host setting “SwapThickProvisionedDisabled” the swap file will be provisioned thin and disk space will only be claimed when the swap file is consumed. Needless to say, but we only recommend using this when you are not overcommitting on memory. Having no space for swap and needed to write to swap wouldn’t make your workloads happy.

Next up is the Checksum / disk scrubbing functionality. As of VSAN 6.2 for every write (4KB) a checksum is calculated and stored separately from the data (5-byte). Note that this happens even before the write occurs to the caching tier so even an SSD corruption would not impact data integrity. On a read of course the checksum is validated and if there is a checksum error it will be corrected automatically. Also, in order to ensure that over time stale data does not decay in any shape or form, there is a disk scrubbing process which reads the blocks and corrects when needed. Intel crc32c is leveraged to optimize the checksum process. And note that it is enabled by default for ALL virtual machines as of this release, but if desired it can be disabled as well through policy for VMs which do not require this functionality.

Another big ask, primarily by service providers, was Quality of Service functionality. There are many aspects of QoS but one of the major asks was definitely the capability to limit VMs or Virtual Disks to a certain number of IOPS through policy. This simply to prevent a single VM from consuming all available resources of a host. One thing to note is that when you set a limit of 1000 IOPS VSAN uses a block size of 32KB by default. Meaning that when pushing 64KB writes the 1000 IOPS limits is actual 500. When you are doing 4KB writes (or reads for that matter) however, we still count with 32KB blocks as this is a normalized value. Keep this in mind when setting the limit.

When it comes to caching there was also a nice “little” enhancement. As of 6.2 VSAN also has a small in-memory read cache. Small in this case means 0.4% of a host’s memory capacity up to a max of 1GB. Note that this in-memory cache is a client side cache, meaning that the blocks of a VM are cached on the host where the VM is located.

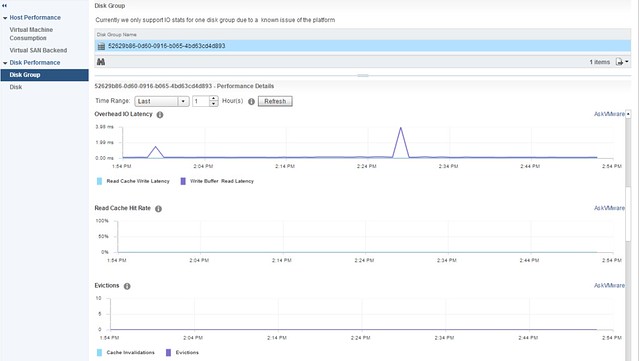

Besides all these great performance and efficiency enhancements of course a lot of work has also been done around the operational aspects. As of VSAN 6.2 no longer do you as an admin need to dive in to the VSAN observer, but you can just open up the Web Client to see all performance statistics you want to see about VSAN. It provides a great level of detail ranging from how a cluster is behaving down to the individual disk. What I personally feel is very interesting about this performance monitoring solution is that all the data is stored on VSAN itself. When you enable the performance service you simply select the VSAN storage policy and you are set. All data is stored on VSAN and also all the calculations are done by your hosts. Yes indeed, a distributed and decentralized performance monitoring solution, where the Web Client is just showing the data it is provided.

Of course all new functionality, where applicable, has health check tests. This is one of those things that I got used to so fast, and already take for granted. The Health Check will make your life as an admin so much easier, not just the regular tests but also the pro-active tests which you can run whenever you desire.

Last but not least I want to call out the work that has been done around application support, I think especially the support for core SAP applications is something that stands out!

If you ask me, but of course I am heavily biased, this release is the best release so far and contains all the functionality many of you have been asking for. I hope that you are as excited about it as I am, and will consider VSAN for new projects or when current storage is about to be replaced.

Thanks for another great post! One question regarding the all-flash config requirement for dedup/compression. I guess there are technical reasons for this? Or can I use a VSAN Advanced license and spinnings disks?

No you cannot use a hybrid configuration and then use dedupe/compression. This has only been certified and tested for all-flash.

Hi,

Can you clarify something here? So, if we do a raid 1 and have one copy on host 1 and one copy on host 2 and host 3 is hosting the witness file, what would happen if we loose host 2 and 3 at the same time?

Is the VM going to be orphaned since less than 50% of quorum is available or be smart enough to figure out there is enough data available to run it but without redundancy?

Also, any news on HTML5 based web client? Sorry but the flash based management for vSAN sucks.

Thank you

There are dozens of posts on VSAN basic that explains RAID-1 behaviour, just look here: vmwa.re/vsan

Great info and improvements, but it’s very disappointing that erasure coding is only supported for all-flash configurations. This was the new feature I was most looking forward to but I won’t actually be able to use it in my hybrid config.

Good news!!!

Wait…no… Dedup and Erasure Coding only for all flash configs?

I am not really happy with this decision, what is the technical reason not supporting it on Hybrid VSANs?

Duncan, is the RAID 6 option based on a Reed-Solomon (or equivalent) erasure code or a diagonal parity?

https://blogs.vmware.com/virtualblocks/2016/02/12/the-use-of-erasure-coding-in-virtual-san-6-2/

You mention “the support for core SAP applications” does that mean full HANA support and certification?

I was under the impression the swap was already thin provisioned by default in version 6.0. In the version 6.0 design document they say, “The virtual machine swap object also has its own default policy, which is to tolerate a single failure. It has a default stripe width value, is thinly provisioned, and has no read cache reservation.”

Can you explain how this is different in 6.2?

It is provisioned with 100% space reservation, that is what this advanced feature allows you to disable.

Great news for the minority whom use all flash and not so great news for the majority whom don’t. Kind of bummed being I was hoping for this change to come to hyrbid as well.

Some great work from you, and all the VSAN team! Looking forward to sampling these wares 🙂

How do fault domains work with erasure coding in both RAID5 and RAID6?

Regarding the in memory Read cache, is there any way to enable/disable it? is it enabled by default?

Yes as of 6.2 it is enabled by default. It uses 1GB of memory per host at most, and a default of 0.4% of memory, not sure why you would want to disable it considering how little it consumes?

Hi Duncan, as I understood so far VSAN was architected with the design principle in mind that data locality (having host local read access) shouldn’t (and shall not) matter. From that perspective why has this principle been abandoned with v6.2? What were the reasons behind this decision?

Hi Duncan,

Has VMware produced any assessment methods for analyzing a workload as-is and assess the likely data reduction results on vSAN?

Best,

Magnus

I’m looking at working with vSAN for an internal lab prior to purchase and I was wondering, if I were to install the VCSA, on what storage would I store this as I would need the VCSA installed before vSAN? I assume I would need some form of local or external storage, then Storage vMotion the VCSA to the vSAN datastore after configuration? – Whats best practice here, should I be leaving the vCSA on the vSAN datastore?

Thanks,