I was reviewing a document today and noticed something that I’ve seen a couple of times already. I already wrote about Active/Standby set ups for etherchannels a year ago but this is a slight different variant. Frank also wrote a more extensive article on it a while and I just want to re-stress this.

Scenario:

- Two NICs

- 1 Etherchannel of 2 links

- Both Management and VMkernel traffic on the same switch

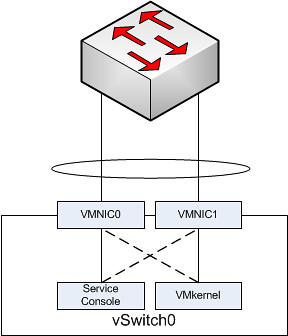

I created a simple diagram to depict this:

In the above scenario each “portgroup” is configured in an active/standby scenario. So let’s take the Service Console. It has VMNIC0 as active and VMNIC1 as standby. The physical switch however is configured with both NICs active in a single channel.

Based on the algorithm that etherchannels use either of the two VMNICs will accept inbound traffic. The Service Console however will only send traffic outbound via VMNIC0. Even worse, the Service Console isn’t actively listening to VMNIC1 for incoming traffic as it was placed in Standby mode. Standby mode means that it will only be used when VMNIC0 fails. In other words your physical switch will think it can use VMNIC1 for you Service Console but your Service Console will not see the traffic coming in on VMNIC1 as it is configured in Standby mode on the vSwitch. Or to quote from Frank’s article…

it will sit in a corner, lonely and depressed, wondering why nobody calls it anymore.

I’ve come across a similar scenario and would appreciate any feedback. Based on Duncan’s, Frank’s, and Ken Cline’s postings, I think the proper configuration is clear but thought I’d bounce it off the readers here…

Scenario:

+ 2 NICs (2 additional unused NICs are available)

+ 1 Etherchannel of 2 links (cross-stack using 2 3750’s)

+ NFS VMkernel portgroup with IPHash

+ Storage targets have multiple IP addresses (aliases) for load balancing

Desire:

Keep NFS setup as is and add iSCSI portgroups with load balancing, preferably with NMP and RR to utilize multiple TCP sessions and drive traffic down 2 paths. Maintain isolated storage vswitches (only IP storage portgroups).

Solution I’ve come up with:

Since multiple TCP sessions for iSCSI requires creating a VMkernel port group for each vmnic on the vswitch and binding each port group to only 1 vmnic, I don’t believe this would work well on a vswitch setup for IPHash load balancing like the above scenario. The reason would be similar to Duncan’s postings on Active/Standby setups for etherchannel, only this would be an Active/Unused setup for the iSCSI portgroups. Wouldn’t there be some iSCSI traffic sitting in a corner, lonely and depressed?

If IPHash is desired for NFS and multiple TCP sessions desired for iSCSI in this scenario, I believe the correct solution would be to create a 2nd vswitch for iSCSI. Set it up with default port ID load balancing with no etherchannel on the switch stack and dedicate 2 unused NICs to the vswitch. Then, proceed with setting up iSCSI port binding, etc.

I’m aware there are multiple ways to effectively combine NFS and iSCSI on 1 vswitch, but if IPHash/EC is desired for NFS and multiple TCP sessions is desired for iSCSI in a setup with a 3750 stack, aren’t 2 vswitches as described required?

Duncan – Correct me if I’m wrong but couldn’t you correct the above scenario by just not creating the EtherChannel? You could have two uplinks from the virtual switch and place the physical switch ports that you are connecting too in trunk mode if you are running SC and VMkernel on different VLANs per best practice.

This would give you two uplinks, both active, one carrying one VLAN (SC), the other carrying the other VLAN (VMkernel), and they would fail to each other as needed and you would set failback to yes to make sure they go back when the link comes back.

Would that be the best solution to this scenario? Sure, you are limited to 1GB per link (assuming you are on 1GB links) but it prevents the above scenario.

Thanks!

@Aaron : Correct. I guess the reason people are doing this is just because they misunderstand the concept.

@Leif: The best way indeed would be to create multiple vSwitches. This way not only won’t you run into any issues mentioned above, also from an Ops perspective it will make it more clear which NICs are doing what. 2 vSwitches is most definitely the way to go in a scenario like that.

I agree with Aaron, I don’t think an IP Hash configuration with only one active adapter doesn’t make any sense at all. Just a normal active standby configuration is good enough in this situation I think…

It doesn’t, and you understand why, but some don’t. I have also seen this setup with 3 links where 2 are active and 1 isn’t during a healthcheck.

Help me with this please. Am I overthinking this?

Goal: As much redundancy as I can get plus performance.

Host has 2 4-port nics for a total of 8 ports.

Connect host to 2 different Cisco switches. Cisco switches are not stacked or grouped as a single logical switch.

vSwitch0 uses 2 ports from each nic (vmnic0,1,4,5).

2 ports on nic1 (vmnic0,1) use etherchannel to Cisco1 for performance

2 ports on nic2 (vmnic4,5) use etherchannel to Cisco2 for redundance

vSwitch0 has all 4 nics Active.

Portgroup1 (vlan 300) has (0,1) active and (4,5) standby.

Portgroup2 (vlan 300) has (4,5) active and (0,1) standby.

This allows me to split hosts between portgroups for maximun bandwidth utilization plus redundancy. If I lose either a switch or a nic I am covered. If I lose a single port though I have traffic problems because neither Cisco or VM allow me to shut down the matching port in the etherchannel automatically and fail both ports to the other channel. A single port failure causes the traffic to be split between the 2 etherchannels on 2 different switches and that does not work well.

Is there a perfect option or better tweak that I am missing? Are there things wrong that I have not found yet?

OK, should have followed the above link to the previous article. I see that there is still no good solution.

Don’t go there, it will cause MAC flapping and your switches will literally go nuts. Why would you want to use etherchannels in the first place? For what type of traffic is this? If it is pure VM, do you have VMs who require more than 1GB?

Hi all great post I have a related question:

I have 1 vswitch which has VMs (4 port groups with different vlans) and the SC on it. (others for iscsi and vmotion). This vswitch has 4 1GB NICs (spread between cards etc. as you described above). What I would like to do is use two of the 4 NICs as standby nics so i can cable them into a second LAN switch in case of switch failure (actually power failure to the main LAN switch but that is another story!).

My plan was to move two of the NICs into standby status, cable them into the second physical switch (HP), trunk the two ports and tag the VLANs so that if power drops to the main physical switch, the standby nics would light up and everything would keep working through the second physical switch.

When i went to move two of the four active nics into standby, I get an error saying “The IP Hash based Load Balancing does not support Standby uplink physical adapters. Change all standby uplinks to active status.”.

I need IP Hash load balancing to play nice with my inbound trunks on the HP switches. But I also want standby NICs for my second switch.

I don’t know if it would be advisable to have two nics trunked to pswitch1, two nics trunked to pswitch2, and have all four nics active? The two pswitches are connected by a two-port LACP trunk using 1Gb ports. I figured there might be issues….

Any advice on either how to achieve what i want, or why i can’t achieve this? Perhaps a different load-balancing policy if the Procurves will let me?

Thanks and keep up the great blogs!

Just seen the discussion at vmware communities that dicusses my exact question:

http://communities.vmware.com/thread/279965?tstart=30