I just noticed the link for the VROps VSAN Content Pack had changed and it isn’t easy to find through google either. Figured I would post it quickly so at least it is indexed and easier to find. Also including the LogInsight VSAN content pack link for your convenience:

- LogInsight VSAN content pack: https://solutionexchange.vmware.com/store/products/vmware-vsan

- VROps VSAN content pack: https://solutionexchange.vmware.com/store/products/vrealize-operations-management-pack-for-storage-devices

This week I had the pleasure to have a chat with

This week I had the pleasure to have a chat with  for the capacity tier. It all makes sense to me. Right now for current projects, NVMe based flash by Intel is being explored, and I am very curious to see what Marc’s experience is going to be like in terms of performance, reliability and the operational aspects compared to Fusion-IO.

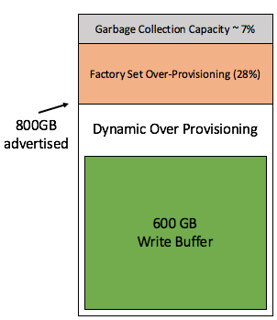

for the capacity tier. It all makes sense to me. Right now for current projects, NVMe based flash by Intel is being explored, and I am very curious to see what Marc’s experience is going to be like in terms of performance, reliability and the operational aspects compared to Fusion-IO. I get this question on a regular basis and it has been explained many many times, I figured I would dedicate a blog to it. Now, Cormac has written a very lengthy blog on the topic and I am not going to repeat it, I will

I get this question on a regular basis and it has been explained many many times, I figured I would dedicate a blog to it. Now, Cormac has written a very lengthy blog on the topic and I am not going to repeat it, I will