I got this question today if you can use a custom TCP/IP stack for the vSAN Network, and I had this before. This question is usually asked by customers who have a stretched cluster as the stretched cluster forces them to create different routes in an L3 network. Especially with a large number of hosts this is cumbersome and error prone, unless you have everything automated end to end, but we are not all William Lam or Alan Renouf right 🙂

The answer is unfortunately: No, we do not support custom TCP/IP stacks for vSAN and there’s no vSAN TCP/IP stack either. The default TCP/IP stack has to be used, this is documented on storagehub.vmware.com in the excellent vSAN Networking guide which my colleagues Paudie and Cormac wrote. Of course for things like vMotion you can use the vMotion TCP/IP stack while using vSAN, that is fully supported.

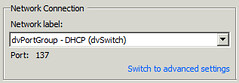

Currently, vSphere does not include a dedicated TCP/IP stack for the vSAN traffic service nor the supportability for the creation of custom vSAN TCP/IP stack. To ensure vSAN traffic in Layer 3 network topologies leaves over the vSAN VMkernel network interface, administrators need to add the vSAN VMkernel network interface to the default TCP/IP Stack and define static routes for all of the vSAN cluster members.