When I joined VMware and had read a white paper on memory reclamation techniques a dozen times. I was left with a bunch of questions still and I emailed the engineer who authored it back in the days. I asked him a couple of “simple” questions and received a one pager email full with answers. Even the email I had to read twice. Not because it is insanely complex, but because there was so much information in there that it was impossible to digest at all. Carl Waldspurger was that engineer. I’d seen some of his talks when he was still at VMware but he has gone “dark” for a while.

Carl joined CloudPhysics in the early stages of the company. He has been working on various projects, and one of those projects is called SHARDS. I had not seen the result yet, and a couple of weeks ago I watched the presentation. Excellent presentation skills, but more importantly amazing research with a very important result. Some people may have been wondering what you can do with a platform like CloudPhysics and what you can harvast from the data, well I think it is fair to say that this is one of the results of all the hard data mining work that has been done over the last years. Here is the abstract with a link to the online presentation. I didn’t want to share everything here to drive some traffic to Usenix as support. Before you watch the video, a warning…. this isn’t a high level overview, serious deep dive.

Efficient MRC Construction with SHARDS

Reuse-distance analysis is a powerful technique for characterizing temporal locality of workloads, often visualized with miss ratio curves (MRCs). Unfortunately, even the most efficient exact implementations are too heavyweight for practical online use in production systems.

We introduce a new approximation algorithm that employs uniform randomized spatial sampling, implemented by tracking references to representative locations selected dynamically based on their hash values. A further refinement runs in constant space by lowering the sampling rate adaptively. Our approach, called SHARDS (Spatially HashedApproximate Reuse Distance Sampling), drastically reduces the space and time requirements of reuse-distance analysis, making continuous, online MRC generation practical to embed into production firmware or system software. SHARDS also enables the analysis of long traces that, due to memory constraints, were resistant to such analysis in the past.

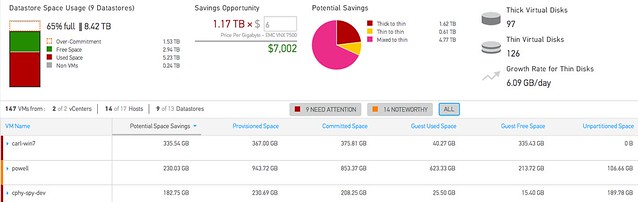

We evaluate SHARDS using trace data collected from a commercial I/O caching analytics service. MRCs generated for more than a hundred traces demonstrate high accuracy with very low resource usage. MRCs constructed in a bounded 1 MB footprint, with effective sampling rates significantly lower than 1%, exhibit approximate miss ratio errors averaging less than 0.01. For large traces, this configuration reduces memory usage by a factor of up to 10,800 and run time by a factor of up to 204.

You can find the slide/paper and video below as a download.

Enjoy 🙂