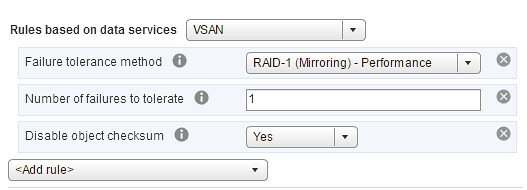

I’ve been talking to a lot of customers the past 12-18 months, if one thing stood out is that about 98% of all our customers used Failures To Tolerate = 1. This means that 1 host or disk could die/disappear without losing data. Most of the customers when talking to them about availability indicated that they would prefer to use FTT=2 but cost was simply too high.

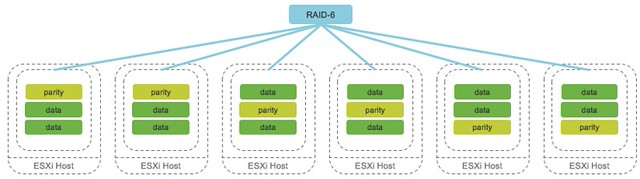

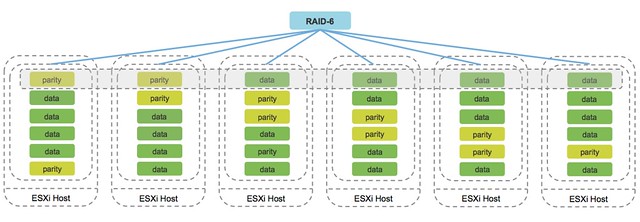

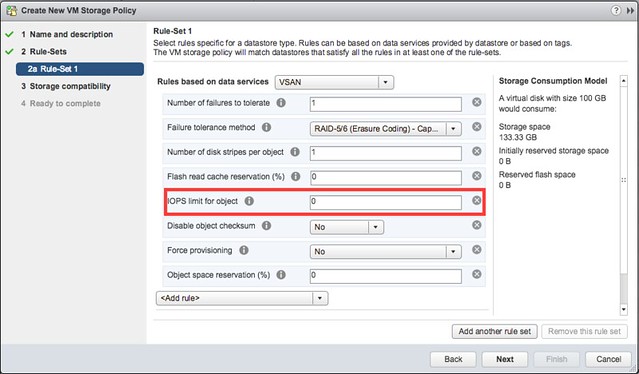

With VSAN 6.2 all of this will change. Today with a 100GB disk FTT=1 results in 200GB of required disk capacity. With FTT=2 you will require 300GB of disk capacity for the same virtual machine, which is an extra 50% capacity required compared to FTT=1. The risk, for most people, did not appear to weigh up against the cost. With RAID-5 and RAID-6 the math changes, and the cost of extra availability also changes.

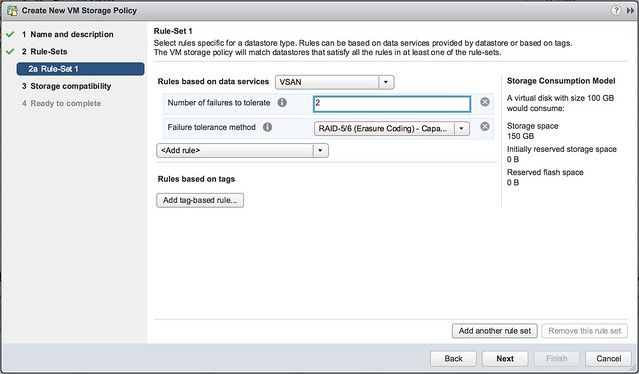

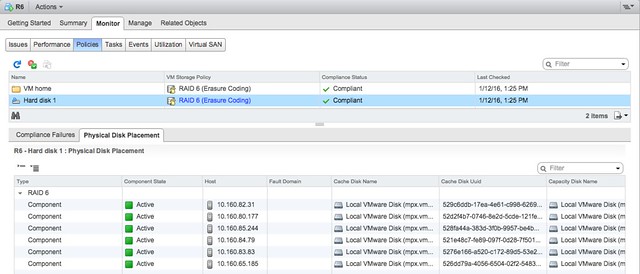

The 100GB disk we just mentioned with FTT=1 and the Failure Tolerance Method set to “RAID-5/6” (only available for all flash) means that the 100GB disk requires 133GB of capacity. Already that is a saving of 67GB compared to “RAID-1”. But that savings is even bigger when going to FTT=2, now that 100GB disk requires 150GB of disk capacity. This is less than “FTT=1” with “RAID-1” today and literally half of FTT=2 and FTM=RAID-1. On top of that, the delta between FTT=1 and FTT=2 is also tiny, for an additional 17GB disk space you can now tolerate 2 failures. Lets put that in to a table, so it is a bit easier to digest. (Note that you can sort the table by clicking on a column header.)

| FTT | FTM | Overhead | VM size | Capacity required |

|---|---|---|---|---|

| 1 | Raid-1 | 2x | 100GB | 200GB |

| 1 | Raid-5/6 | 1.33x | 100GB | 133GB |

| 2 | Raid-1 | 3x | 100GB | 300GB |

| 2 | Raid-5/6 | 1.5x | 100GB | 150GB |

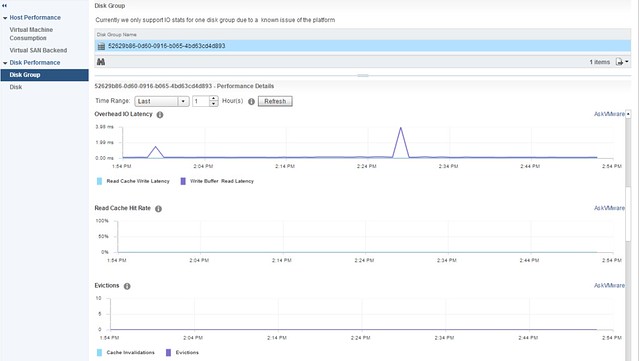

Of course you need to ask yourself if your workload requires it, does it make sense with desktops? Well for most desktops it probably doesn’t… But for your Exchange environment maybe it does, for your databases maybe it does, for your file servers, print servers, for your web farm even it can make a difference. That is why I feel that the standard used “FTT” setting is going to change slowly, and will (should) be FTT=2 in combination with FTM set to “RAID-5/6”. Now let it be clear, there is a performance difference between FTT=2 with FTM=RAID-1 vs FTT=2 with FTM=RAID-6 (same applies for FTT=1) and of course there is a CPU resource cost as well. Make sure to benchmark what the “cost” is for your environment and make an educated decision based on that. I believe though that in the majority of cases the extra availability will outweigh the cost / overhead, but still this is up to you to determine and decide. What is great about VSAN in my opinion is the fact that we offer you the flexibility to decide per workload what makes sense.