When ever I talk to customers about Virtual SAN the question that comes up usually is why Virtual SAN? Some of you may expect it to be performance, or the scale-out aspect, or the resiliency… None of that is the biggest differentiator in my opinion, management truly is. Or should I say the fact that you can literally forget about it after you have configured it? Yes, of course that is something you expect every vendor to say about their own product. I think the reply of one of the users during the VSAN Chat that was held last week is the biggest testimony I can provide: “VSAN made storage management a non-issue for the first time for the vSphere cluster admin”. (see tweet below)

@vmwarevsan VSAN made storage management a non-issue for this first time vSphere cluster admin! #vsanchat http://t.co/5arKbzCdjz

— Aaron Kay (@num1k) September 22, 2015

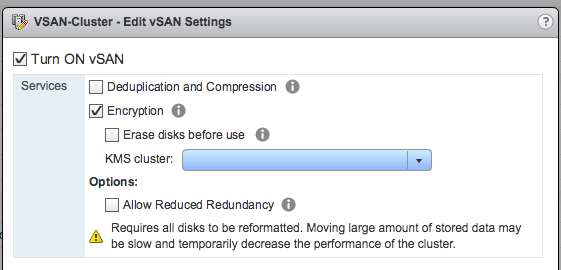

When we released the first version of Virtual SAN I strongly believed we had a winner on our hands. It was so simple to configure, you don’t need to be a VCP to enable VSAN, it is two clicks. Of course VSAN is a bit more than just that tick box on a cluster level that says “enable”. You want to make sure it performs well, all drivers/firmware combinations are certified, the network is correctly configured etc. Fortunately we also have a solution for that, this isn’t a manual process.

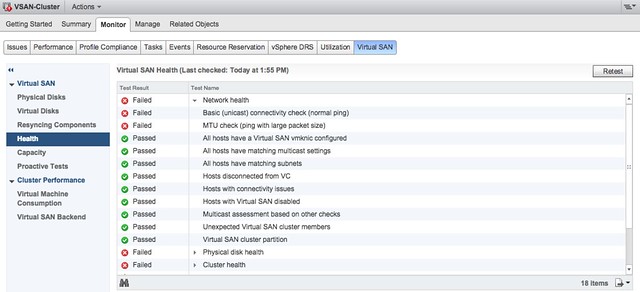

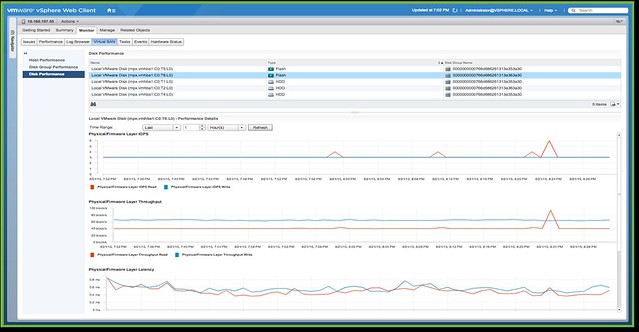

No, you simply go to the VSAN healthcheck section on your VSAN cluster object and validate everything is green. Besides simply looking at those green checks, you can also run certain pro-active tests that will allow you to test for instance multicast performance, VM creation, VSAN performance etc. It all comes as part of vCenter Server as of the 6.0 U1 release. On top of that there is more planned. At VMworld we already hinted at it, advanced performance management inside vCenter based on a distributed and decentralized model. You can expect that at some point in the near future, and of course we have the vROps pack for Virtual SAN if you prefer that!

No, if you ask me, the biggest differentiator definitely is management… simplicity is the key theme, and I guarantee that things will only improve with each release.