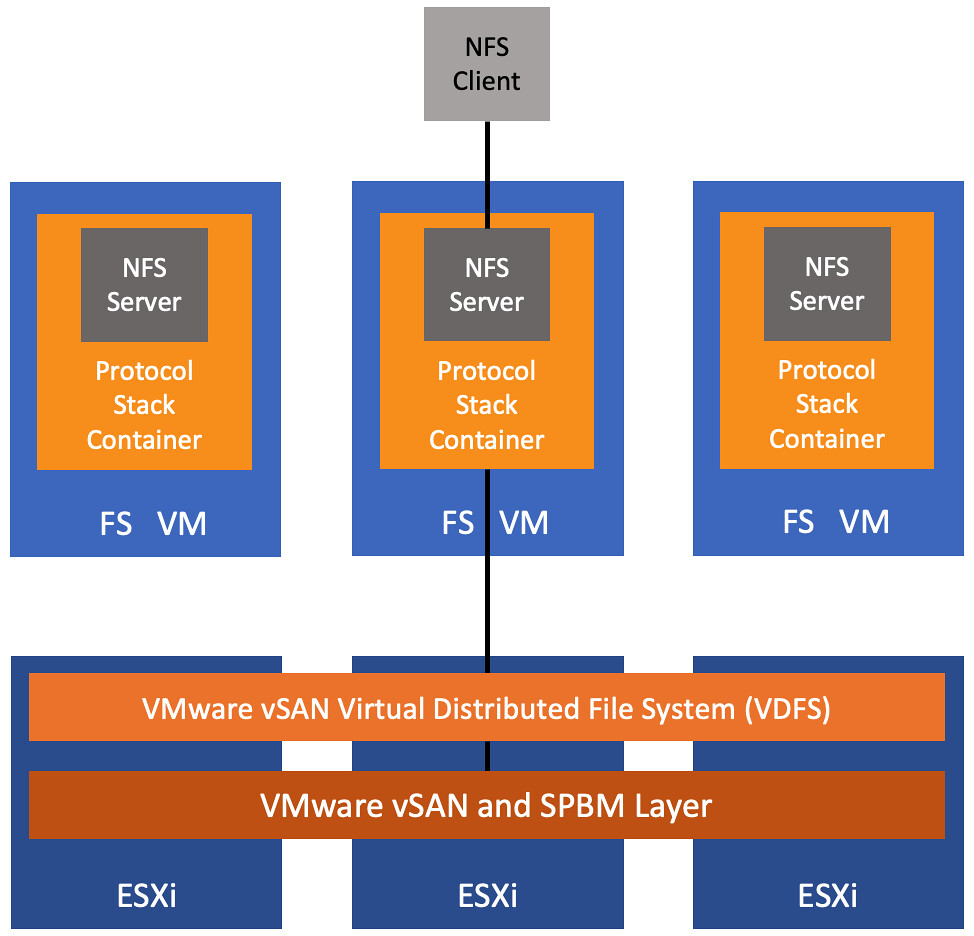

Last week I had this question around vSAN File Services and an imbalance between protocol stack containers and FS VMs. I personally had witnessed the same thing and wasn’t even sure what to expect. So what does this even mean, an imbalance? Well as I have already explained, every host in a vSAN Cluster which has vSAN File Services enabled will have a File Services VM. Within these VMs you will have protocol stack containers running, up to a maximum of 8 protocol stack containers per cluster. Just look at the diagram below.

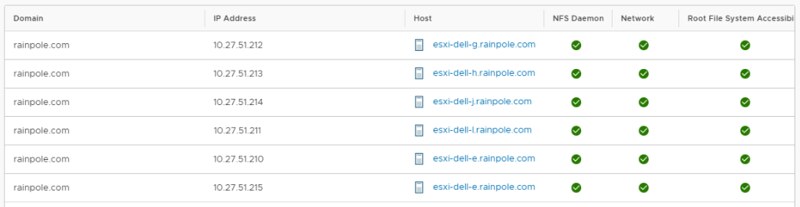

Now this means that if you have 8 hosts, or less, in your cluster that by default every FS VM in your cluster will have a protocol stack container running. But what happens when you go into maintenance mode? When you go into maintenance mode the protocol stack container moves to a different FS VM, so you end up in a situation where you will have 2 (or more even) protocol stack containers running within 1 FS VM. That is the imbalance I just mentioned. More than 1 protocol stack container per FS VM, while you have an FS VM with 0 protocol stack containers. If you look at the below screenshot, I have 6 protocol stack containers, but as you can see we have the bottom two on the same ESXi host, and there’s no protocol stack container on host “dell-f”.

How do you even this out? Well, it is simple, it takes some time. vSAN File Services will look at your distribution of protocol stack containers every 30 minutes. Do mind, it will take the number of file shares associated with the protocol stack containers into consideration. If you have 0 file shares associated with a protocol stack container then vSAN isn’t going to bother balancing it, as there’s no point at that stage. However, if you have a number of shares and each protocol stack container owns one, or more, shares than balancing will happen automatically. Which is what I witness in my lab. Within 30 minutes I saw the situation changing as shown in the screenshot below, a nice evenly balanced file services environment! (Protocol stack container ending with .215 moved to host “dell-f”.)