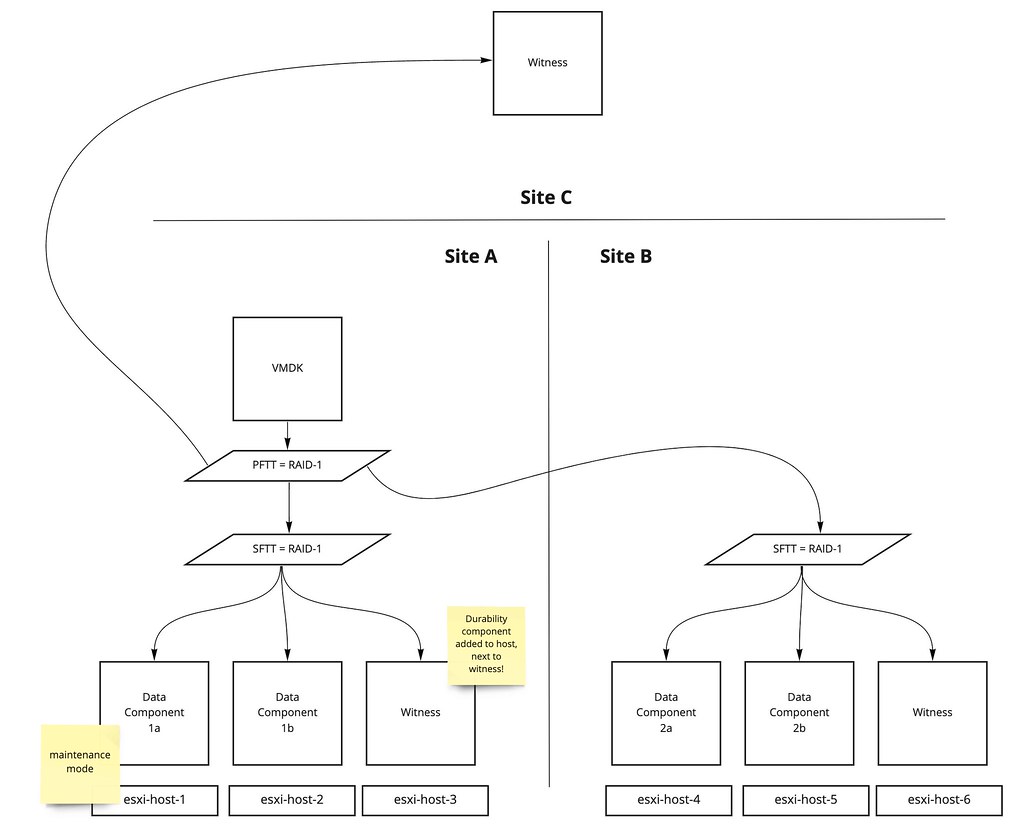

This question has come up multiple times now, so I figured I would write a quick post about it, do you need 2 isolation addresses with a (vSAN) stretched cluster for vSphere HA? This question comes up as the documentation has best practices around the configuration of HA isolation addresses for stretched clusters. The documentation (both for vSAN as well as traditional stretched storage) states that you need to have two reliable addresses, one in each location.

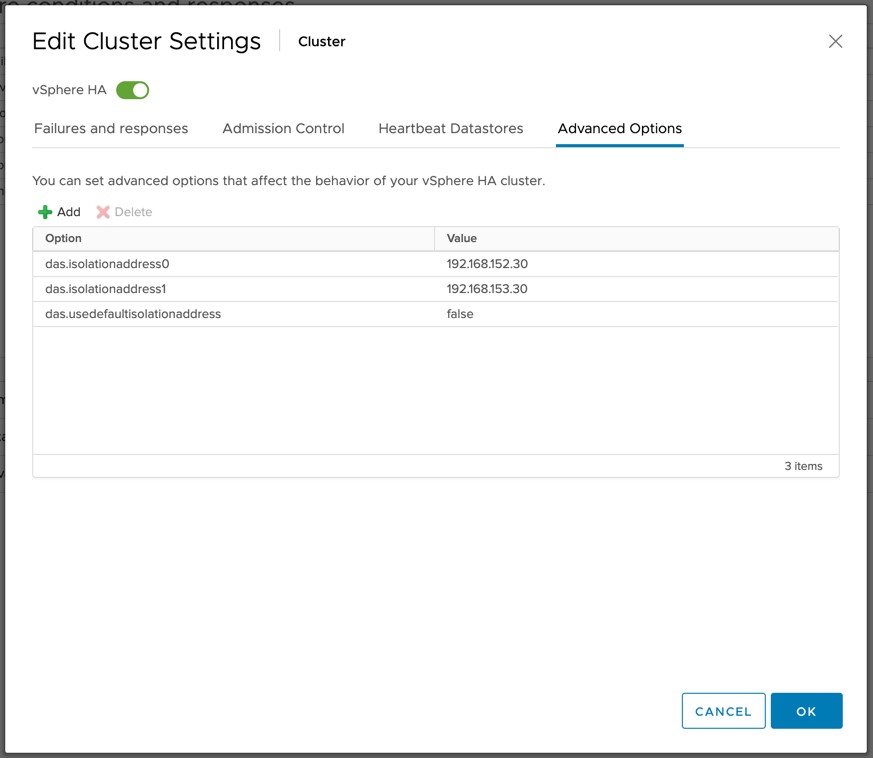

Now I have had the above question multiple times as some folks have mentioned that they can use a Gateway Address with Cisco ACI which would still be accessible in both locations even if there’s a partition due to for instance an ISL failure. If that is the case, and the IP address is indeed available in both locations during those types of failure scenarios then it would suffice to use a single IP address as your isolation address.

You will however need to make sure that the IP address is reachable over the vSAN network when using vSAN as your stretched storage platform. (When vSAN is enabled vSphere HA uses the vSAN network for communications.) If it is reachable you can simply define the isolation address by setting the advanced setting “das.isolationaddress0”. It is also recommended to disable the use of the default gate of the management network by setting “das.usedefaultisolationaddress” to false for vSAN based environments.

I have requested the vSAN stretched clustering documentation to be updated to reflect this.