I just posted the slidedecks that I presented at VMworld on Virtual SAN up on slideshare. The recording and the slides will probably at some point also show up on vmware.com but as I had many requests from people to share the material I figured I would do that straight after the event. If you have any questions don’t hesitate to ask.

Software Defined

NexentaConnect for VSAN for free? Get it now!

I was at VMworld last week and bumped in to the Nexenta team. They told me about this great promotion they are running (limited time!) for NexentaConnect. NexentaConnect provides you NFS storage on top of VSAN, Cormac wrote an article about it a while back which I suggest reading. The promotion gives you NexentaConnect for $ 0,- and that includes:

- NexentaConnect for VMware Virtual SAN license (s)

- Unlimited raw storage capacity to match VMware VSAN Licenses

- Nexenta technical support for first 12 months included

Now I have seen many companies giving away software for free, but can’t recall having seen anyone include free support for 12 months. If you are a Virtual SAN user, or about to deploy/implement Virtual SAN, and looking to include some form of file services on top of it, then NexentaConnect may just be what you are looking for. I definitely recommend giving it a try, it is free and comes with support… what more can you ask for?

Have fun!

VMworld Session: VSAN – Software Defined Storage Platform of the Future #STO6050

Unfortunately I haven’t been able to attend too many sessions, only 2 so far. This is one I didn’t want to miss as it was all about what VMware is working on for VSAN and layers that could sit on top of VSAN. Rawlinson and Christos spoke about where VSAN is today first. Mainly discussion the use cases (monolithic apps like Exchange, SQL etc.) and the simplicity VSAN brings. After which an explanation of the VSAN object/component model was provided which was the lead in to the future.

We are in the middle of an evolution towards cloud native applications Christos said. Cloud native apps scale in a different way then traditional apps, and their requirements differ. Usually not a need for HA and DRS, and will contain this functionality within their own framework. What does this result in for the vSphere layer?

VMware vSphere Integrated Containers and VMware Photon Platform enabled these new types of applications. But how do we enable these from a storage point of view? What kind of scale will we require? Will we need different data services? Will we need to different tools, what about performance?

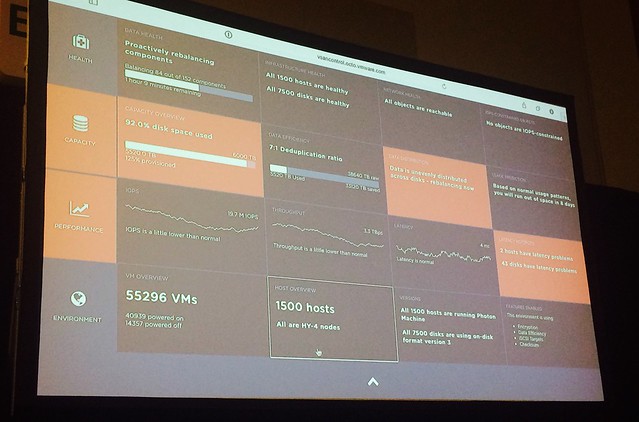

First project being discussed is the Performance Service which will come as part of the Health Check plugin. Providing cluster level, host level, disk group level, disk level… The Performance Service Architecture is very interesting and is not a “standard vCenter Server service”. Providing deep insights using per host traces is not possible as it would not scale. A distributed model is proposed which will enable this, but in a decentralized way. Each host can collect data, each cluster can roll this up, and this can be done for many clusters. Data is both processed and stored in a distributed fashion. The cost for a solution like this should be around 10% of 1 core on a server. Just think what a vCenter Server would look like if you had the same type of scale and cost, with a 1000 host solution could easily result in a 100 vCPU requirement, which is not realistic.

Rawlinson demoes a potential solution for this, in this scenario we are talking 1000s of hosts of which data is gathered, analyzed and presented in what appears to be an HTML-5 interface. The solution doesn’t just provides details on the environment it also allows you to mitigate these problems. Note that this is a prototype of an interface that may or may not at some point in time be released. If you like what you see though, make sure to leave a comment as I am sure that helps making this prototype reality!

Next being discussed is the potential to leverage VSAN not just for virtual machines, but also for containers, having the capabilities to store files on top of VSAN. A distributed file system for cloud native apps is now introduced. Some of the requirements for a distributed file system would be a scalable data path, clones at massive scale, multi-tenancy and multi-purpose.

VMware is also prototyping a distributed file system and have it running in their labs. It sits on top of VSAN and leverages that scalable path and uses it to store its data and metadata. Rawlinson demonstrates how he can create 2000 clones of a file in under a second across a 1000 host and runs his application. Note that this application isn’t copied to those 1000 hosts, but it is a simple mountpoint on 1000 hosts, truly distributed filesystem with extremely scalable clone and snapshot technology.

Christos wraps up, key points are that VSAN will be the enabler of future storage solutions as it provides extreme scale, with at a low resource overhead. Awesome session, great peak in to the future.

Virtual SAN beta coming up with dedupe and erasure coding!

Something I am personally very excited about is the fact that there is a beta coming up soon for an upcoming release of Virtual SAN. This beta is all about space efficiency and in particular will contain two new great features which I am sure all of you will appreciate testing:

- Erasure Coding

- Deduplication

My guess is that many people will get excited about dedupe, but personally I am also very excited about erasure coding. As it stands with VSAN today when you deploy a 50GB VM and have failures to tolerate defined as 1 you will need to have ~100GB of capacity available. With Erasure Coding the required capacity will be significantly lower. What you will be able to configure is a 3+1 or a 4+2 configuration, not unlike RAID-5 and RAID-6. This means that from a capacity stance you will need 1.3x the space of a given disk when 3+1 is used or 1.5x the space when 4+2 is used. Significant improvement over 2x when using FTT=1 in todays GA release. Do note of course that in order to achieve 3+1 or 4+2 you will need more hosts then you would normally need with FTT=1 as we will need to guarantee availability.

Dedupe is the second feature that you can test with the upcoming beta. I don’t think I really need to explain what it is. I think it is great we may have this functionality as part of VSAN at some point in the future. Deduplication will be applied on a “per disk group” basis. Of course the results of deduplication will vary, but with the various workloads we have tested we have seen up to 8x improvements in usable capacity. Again, this will highly depend on your use case and may end up being lower or higher.

And before I forget, there is another nice feature in the beta which is end-to-end checksums (not just on the device). This will protect you not only against driver and firmware bugs, anywhere on the stack, but also bit rot on the storage devices. And yes, it will have scrubber constantly running in the background. The goal is to protect against bit rot, network problems, software and firmware issues. The checksum will use CRC32c, which utilizes special CPU instructions thanks to Intel, for the best performance. These software checksums will complement the hardware-based checksums available today. This will be enabled by default and of course will be compatible with the current and future data services.

If you are interested in being considered for the beta (and apologies in advance that we will not be able to accommodate all requests), then you can summit your information at www.vmware.com/go/vsan6beta.

Go VSAN

What is new for Virtual SAN 6.1?

It is VMworld, and of course there are many announcements being doing one of which is Virtual SAN 6.1 which will come as part of vSphere 6.0 Update 1. Many new features have been added, but there are a couple which stand out if you ask me. In this post I am going to talk about what are in my opinion the key new features. Lets list them first and then discuss some of them individually.

- Support for stretched clustering

- Support for 2 node ROBO configurations

- Enhanced Replication

- Support for SMP-FT

- New hardware options

- Intel NVMe

- Diablo Ultra Dimm

- Usability enhancements

- Disk Group Bulk Claiming

- Disk Claiming per Tier

- On-Disk Format Upgrade from UI

- Health Check Plug-in shipped with vCenter Server

- Virtual SAN Management Pack for VR Ops

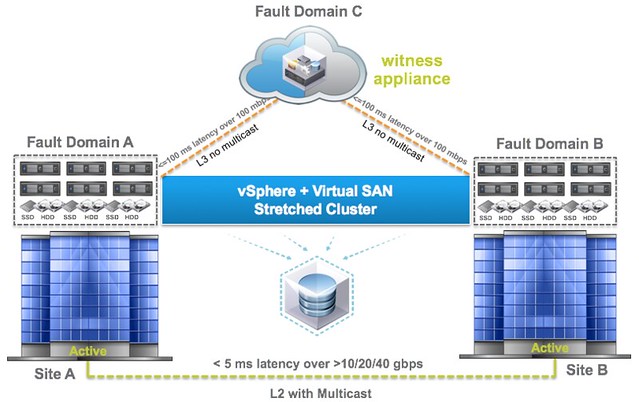

When explaining the Virtual SAN architecture and concepts there is always one question that comes up, what about stretched clustering? I guess the key reason for it being the way Virtual SAN distributes objects across multiple hosts for availability reasons and people can easily see how that would work with datacenters. With Virtual SAN 6.1 we now fully supported stretched clustering. But what does that mean, what does that look like?

As you can see in the diagram above it starts with 3 failure domains, two of which will be “hosting data” and one of which will be a “witness site”. All of this is based on the Failure Domains technology that was introduced with 6.0, and those who have used it now how easy it is. Of course there are requirements when it comes to deploying in a stretched fashion and the key requirements for Virtual SAN are:

- 5ms RTT latency max between data sites

- 200ms RTT latency at most from data sites to witness site

Worth noting from a networking point of view is that from the data sites to the witness site there is no requirement for multicast routing and it can be across L3. On top of that the Witness can be nested ESXi, so no need to dedicate a full physical host just for witness purposes. Of course the data sites can also connect to each other over L3 if that is desired, but personally I suspect that VSAN over L2 will be a more common deployment and it is also what I would recommend. Note that between the data sites there is still a requirement for multicast.

When it comes to deploying virtual machines on a stretched cluster not much has changed. Deploy a VM, and VSAN will ensure that there is 1 copy of your data in Fault Domain A and one copy in Fault Domain B with your witness in Fault Domain C. Makes sense right? If one of the data sites fails then the other can take over. If the VM is impacted by a site failure then HA can take action… It is no rocket science and dead simple to set up. I will have a follow up post with some more specifics in a couple of weeks

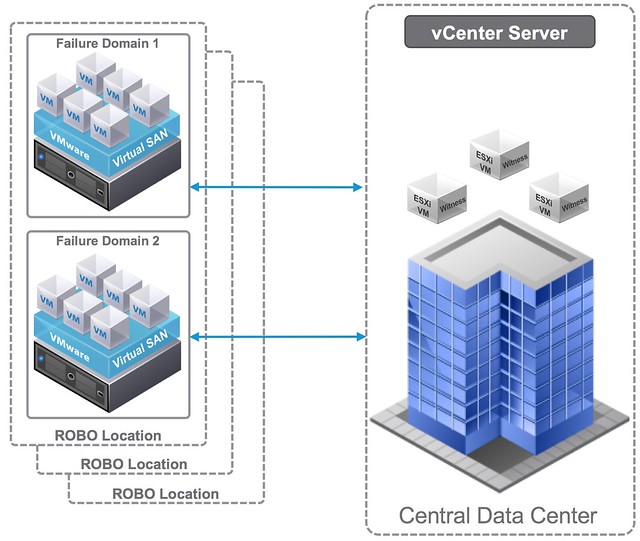

Besides stretched clustering Virtual SAN 6.1 also brings a 2 node ROBO option. This is based on the same technique as the stretched clustering feature. It basically allows you to have 2 nodes in your ROBO location and a witness in a central location. The max latency (RTT) in this scenario is 500ms RTT, which should accommodate for almost every ROBO deployment out there. Considering the low number of VMs typically in these scenarios you are usually okay as well with 1GbE networking in the ROBO location, which further reduces the cost.

When it comes to disaster recovery work has also been done to reduce the recovery point objective (RPO) for vSphere Replication. By default this is 15 minutes, but for Virtual SAN this has now been certified for 5 minutes. Just imagine combining this with a stretched cluster, that would be a great disaster avoidance and disaster recovery solution. Sync replication between active sites and then async to where ever it needs to go.

But that is not it in terms of availability, support for SMP FT has also been added. I never expected this to be honest, but I have had many customers asking for this in the past 12 months. Other common requests I have seen is the support of these super fast flash devices like Intel NVMe and Diablo Ultra Dimm, and 6.1 delivers exactly that.

Another big focus in this release has been usability and operations. Many enhancements have been done to make life easier. I like the fact that the Health Check plugin is now included with vCenter Server and you can do things like upgrading the on-disk format straight from the UI. And of course there is the VR Ops Management Pack, which will enrich your VR Ops installation with all the details you ever need about Virtual SAN. Very very useful!

All of this making Virtual SAN 6.1 definitely a release to check out!