I did a vSAN File Services Considerations posts earlier this year and recently updated it to include some of the changes that were introduced for vSAN 7.0 U1. Considering vSAN HCI Mesh, aka Datastore Sharing, is also a brand new feature, I figured I would do a similar post. In this post, I am not going to do a deep-dive of the architecture, but I simply want to go over some of the considerations and best practices for implementing vSAN HCI Mesh. I collected these recommendations, and requirements, from our documentation and some VMworld sessions.

First of all, for those who don’t know, vSAN HCI Mesh allows you to mount a remote vSAN Datastore to a vSAN Cluster. In other words, if you have two (or more) vSAN Clusters, you can access the storage capacity from a cluster remotely. Why would you? Well, you can imagine that one cluster is running out of disk space for instance. Or, you may have a hybrid cluster and an all-flash cluster and want to provision a VM from a compute perspective on hybrid, but from a storage point of view on all-flash. By using Datastore Sharing you can now mount the other vSAN Datastore and use it as if it is a local datastore.

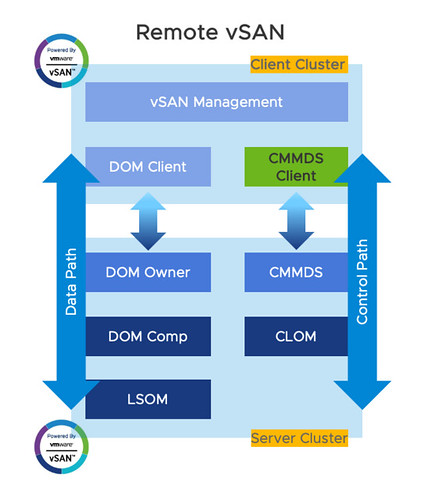

Let’s take a look at the architecture of the solution, which is displayed in the diagram below. The big difference between a “local” vSAN datastore and HCI Mesh is the fact that the DOM Client and the DOM Owner have been split up. So instead of having the “DOM Owner” locally, it is now remote on the remote cluster, pretty smart implementation if you ask me. Now as you can imagine, this will have some impact on your network requirements/design.

Let’s list out all of the recommendations, considerations, and requirements for those who are planning on using HCI Mesh.

- Datastore Sharing requires vSAN 7.0 Update 1 at a minimum.

- Starting with 7.0 Update 2, Datastore Sharing also now supports “compute only”. Meaning that the cluster does not need to run vSAN!

- The compute-only client cluster does not need a vSAN license either!

- The vSAN cluster needs to have the vSAN Enterprise license

- Starting with vSAN 8.0 U1, Datastore Sharing also supports Stretched Clusters and can mount cross vCenter Server for the Original Storage Architecture

- Starting with vSAN 8.0 U1, Datastore Sharing is also supported in combination with vSAN ESA.

- A vSAN Datastore can be mounted by a maximum of 10 vSAN Client Clusters with vSAN 8.0 U1

- A vSAN cluster can mount a maximum of 5 remote vSAN datastores with vSAN 8.0 U1

- A vSAN Datastore can be mounted by up to 64 vSAN hosts for 7.0 U1, and 128 host for 7.0 U2

- This includes the “local hosts” for that vSAN Datastore.

- 10Gbps NICs is the minimum required for hosts.

- 25Gbps NICs is recommended for hosts.

- Use of vDS and Network IO Control is recommended.

- L2 and L3 connectivity are both supported.

- RDMA is not supported.

- IPv6 needs to be enabled on the hosts, otherwise, you may hit the following error while mounting a remote datastore:

Failed to run the remote datastore mount pre-checks - Network Load Balancing: LACP, Load-based teaming, and active/standby are all supported.

- Network Load Balancing: Dual VMkernel configuration / air-gapped configurations are explicitly not supported.

- Keep the number of hops between clusters at a minimum.

- Data in-transit encryption is not supported, data-at-rest encryption is supported!

- VMs cannot span datastores, in other words, you cannot store the first VMDK locally and the second VMDK of the same VM remote.

- Remote provisioning (on a mounted remote vSAN datastore) of vSAN File Shares, iSCSI volumes, and/or CNS persistent volumes is not supported!

- It is supported to mount an All-Flash Datastore from a Hybrid cluster and the other way around.

- In other words, you can provision VMs from a storage point of view, on a remote datastore, with capabilities they would not have locally!

One last thing I want to mention around HA, as this is different than in a “normal” vSAN cluster. When the connection between the local and the remote cluster fails, and as a result a VM is impacted, this will trigger an “APD” (all paths down) response. The APD is declared after 60 seconds, after which the APD response (restart) is triggered automatically after 180 seconds. Mind that this is different than with an APD response with traditional storage, as there it will take 140 seconds before the APD is declared. Note, this does mean that you need to have VMCP aka “Datastore with APD” configured with HA.

Hope that helps. I will be updating the page when I find new considerations.

correction, is this VMCP or VCMP ?

My bad, typo. VMCP of course.

Hey Duncan, thanks for the write up, really really useful. I had a question regarding vCenter and vSAN HCI Mesh, do the local and remote clusters need to be in the same VC, or can the be separate within the same Enhanced Link Mode domain or can they be completely separate. Also is there any guidance on NSX-T Segments for networking (either converged VDS or N-VDS). Thanks!

both need to be managed by the same vCenter Server, linked mode is not supported. I have seen no guidance around NSX so far.