As most of you have seen by the explosion of tweets, Virtual SAN 6.5 was just announced. Some may wonder how 6.5 can be announced so fast after the Beta Announcement, well this is not the same release. This release has a couple of key new features, lets look at those:

- Virtual SAN iSCSI Service

- 2-Node Direct Connect

- Witness Traffic Separation for ROBO

- 512e drive support

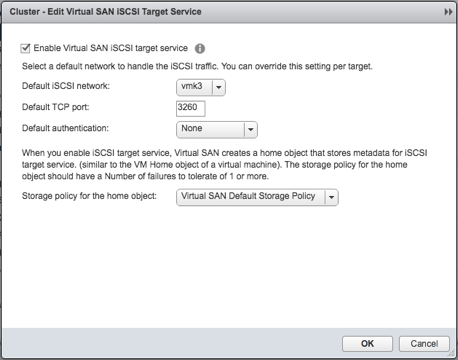

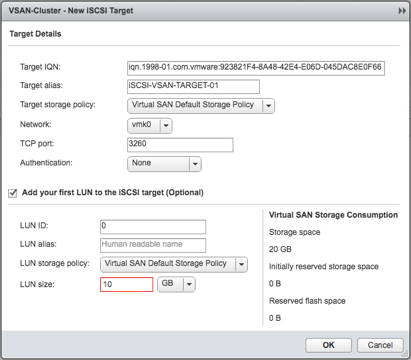

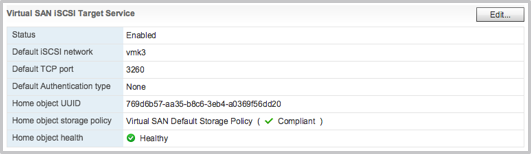

Lets start with the biggest feature of this release, at least in my opinion, Virtual SAN iSCSI Service. This provides what you would think it provides: the ability to create iSCSI targets and LUNs and expose those to the outside world. These LUNs by the way are just VSAN objects, and these objects have a storage policy assigned to them. This means that you get iSCSI LUNs with the ability to change performance and/or availability on the fly. All of it through the interface you are familiar with, the Web Client. Let me show you how to enable it:

- Enable the iSCSI Target Service

- Create a target, and a LUN in the same interface if desired

- Done

How easy is that? Note that there are some restrictions in terms of use cases. It is primarily targeting physical workloads and Oracle RAC. It is not intended for connecting to other vSphere clusters for instance. Also, you can have a max of 1024 LUNs per cluster and 128 targets per cluster at most. LUN capacity limit is 62TB.

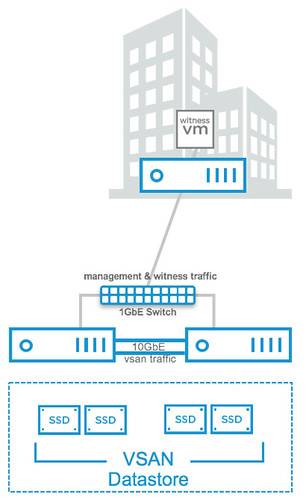

Next up on the list is 2-node direct connect. What does this mean? Well it basically means you can now cross-connect two VSAN hosts with a simple ethernet cable as shown in the diagram in the right. Big benefit of course is that you can equip your hosts with 10GbE NICs and get 10GbE performance for your VSAN traffic (and vMotion for instance) but don’t incur the cost of a 10GbE switch.

Next up on the list is 2-node direct connect. What does this mean? Well it basically means you can now cross-connect two VSAN hosts with a simple ethernet cable as shown in the diagram in the right. Big benefit of course is that you can equip your hosts with 10GbE NICs and get 10GbE performance for your VSAN traffic (and vMotion for instance) but don’t incur the cost of a 10GbE switch.

This can make a huge difference when it comes to total cost of ownership. In order to achieve this though you will also need to setup a separate VMkernel interface for Witness Traffic. And this is the next feature I wanted to mention briefly. For 2-node configurations it will be possible as of VSAN 6.5 to separate witness traffic by tagging a VMkernel interface as a designated witness interface. There’s a simple “esxcli” command for it, note that <X> needs to be replaced with the number of the VMkernel interface:

esxcli vsan network ip set -i vmk<X> -T=witness

Then there is the support for 512e drives, note that 4k native is not supported at this point. Not sure what more to say about it than that… It speaks for itself.

Oh and one more thing… All-Flash has been moved down from a licensing perspective to “Standard“. This means anyone can now deploy all-flash configuration at no additional licensing cost. Considering how fast the world is moving to all-flash I think this only makes sense. Note though that data services like dedupe/compression/raid-5/raid-6 are still part of VSAN Advanced. Nevertheless, I am very happy about this positive licensing change!

Hi Duncan,

Does the physical servers connected to VSAN iSCSI Devices require a VSAN License?

No it does not. It just connects over iSCSI, no additional licenses needed for that.

Hi Duncan,

It’s now possible to configure erasure coding when using multiple fault domains?

No, that feature was announced for the upcoming beta…

Hi Duncan,

Do you think this volumes can be mapped directly to a VM, like a RDM?

That can be useful when you have a legacy Microsof Failover cluster who need shared disks.

not like an RDM, but you create an in-guest iSCSI based solution. So you connect to the iSCSI LUN from within Windows, which then becomes a new block device for your VM and can be used as a quorum. This was one of they key reasons to develop this feature.

Thanks Duncan!

It’s possible to test it on the Hands On Lab?

No NFS Love?

What use case do you have in mind? It is not in the current release…

Hi Duncan,

Why can you not use the iSCSI target with vSphere – this seemed like a great use case to me (i.e. you had some legacy hosts or hosts with minimal storage requirements)?

Many thanks

Mark

It is not the intended use case for this feature. This may or may not change over time depending on feedback and requests.

I assume it would work with vSphere, but it would not be supported – is that correct?

I can’t say at this point, these details will be made available at the time of release.

Does ROBO now support all flash?

Yes it does, and also a new ROBO Advanced Pack will be made available for those who want to use dedupe and compression.

Will vSphere 6.5 include a dedicated TCP/IP stack for the Virtual SAN traffic?

Makes sense to configure the direct connection as jumbo frames (MTU 9000).

Hi, Thanks for sharing.

Did You know about release date?

So will VSAN be supported in the Essentials Plus license? As this would make for a killer SMB setup.

Just want to verify that this does not connect iSCSI storage, just allows iSCSI LUNs to be created and shared to outside devices… sort of a scale-out model I would guess?

correct, it is an option to create iSCSI LUNs from direct attached storage that is managed by vSAN

Thanks!

Hello Duncan,

Can we upgrade the 2-node direct connect configuration to more modes without reinstallation and dowtime ?

Philippe

Hi,

Quick question about your great post.

For a ROBO deployement, Does the link between the ROBO cluster and the witness appliance need to be 10G or can I go with 1G ?

My concern was about performance impact.

Thanks.