About 18 months ago I was asked to be part of a very small team to build a prototype. Back then it was one developer (Dave Shanley) who did all the development including the user experience aspect. I worked on architectural aspects we had an executive sponsor (Mornay van der Walt). After a couple of months we had something to show internally. In March of 2013, after showing the first prototype, we received the green light and the team expanded quickly. A small team within the SDDC Division’s Emerging Solutions Group was tasked with building something completely new, to enter a market where VMware had never gone before, to do something that would surprise many. The team was given the freedom to operate somewhat like a startup within VMware; run fast and hard, prototype, iterate, pivot when needed, with the goal of delivering a game changing product by VMworld 2014. Today I have the pleasure to introduce this project to the world: VMware EVO:RAIL™.

EVO:RAIL – What’s in a name?

EVO represents a new family of ‘Evolutionary’ Hyper-Converged Infrastructure offerings from VMware. RAIL represents the first product within the EVO family that will ship during the second half of 2014. More on the meaning of RAIL towards the end of the post.

The Speculation is finally over!

Over the past 6-plus months there was a lot of speculation over Project Mystic and Project MARVIN. I’ve been wanting to write about this for so long now, but unfortunately couldn’t talk about it. The speculation is finally over with the announcement of EVO:RAIL, and you can expect multiple articles on this topic here in the upcoming weeks! So just to be clear: MARVIN = Mystic = EVO:RAIL

What is EVO:RAIL?

Simply put, EVO:RAIL is a Hyper-Converged Infrastructure Appliance (HCIA) offering by VMware and of qualified EVO:RAIL partners that includes Dell, EMC, Fujitsu, Inspur, Net One Systems and SuperMicro. This impressive list of partners will ensure EVO:RAIL has a global market reach from day one, as well as the assurance of world class customer support and services these partners are capable of providing. For those who are not familiar with hyper-converged infrastructure offerings: it combines Compute, Network and Storage resources into a single unit of deployment. In the case of EVO:RAIL this is a 2U unit which contains 4 independent physical nodes.

But why a different type of hardware? platform What will EVO:RAIL bring to you as a customer? In my opinion EVO:RAIL has a several major advantages over traditional infrastructure:

- Software-Defined

- Simplicity

- Highly Resilient

- Customer Choice

Software-Defined

EVO:RAIL is a scalable Software-Defined Data Center (SDDC) building block that delivers compute, networking, storage, and management to empower private/hybrid-cloud, end-user computing, test/dev, remote and branch office environment, and small virtual private cloud. EVO:RAIL builds on proven technology of VMware vSphere®, vCenter Server™, and VMware Virtual SAN™, EVO:RAIL delivers the first hyper-converged infrastructure appliance 100% powered by VMware software.

Simplicity Transformed

EVO: RAIL enables time to value to first VM in minutes once the appliance is racked, cabled and powered on.. VM creation, radically simplified via the EVO:RAIL management user interface, easy VM deployment, one-click non-disruptive patch and upgrades, simplified management and scale-out.

Highly Resilient by Design

Resilient appliance design starting with four independent hosts and a distributed Virtual SAN datastore ensures zero VM downtime during planned maintenance or during disk, network, or host failures.

Customer Choice

EVO:RAIL is delivered as a complete appliance solution with hardware, software, and support through qualified EVO:RAIL partners; customers choose an EVO:RAIL appliance from their preferred EVO:RAIL partner. This means a single point of contact to buy new equipment (single SKU includes all components), and a single point of contact for support.

So what will each appliance provide you with in terms of hardware resources? Each EVO:RAIL appliance has four independent nodes with dedicated computer, network, and storage resources and dual, redundant power supplies.

Each of the four EVO:RAIL nodes have (at a minimum):

- Two Intel E5-2620 v2 six-core CPUs

- 192GB of memory

- One SLC SATADOM or SAS HDD as the ESXi™ boot device

- Three SAS 10K RPM 1.2TB HDD for the VMware Virtual SAN™ datastore

- One 400GB MLC enterprise-grade SSD for read/write cache

- One Virtual SAN-certified pass-through disk controller

- Two 10GbE NIC ports (configured for either 10GBase-T or SFP+ connections)

- One 1GbE IPMI port for remote (out-of-band) management

All of this leads to a total combined of at least 100GHz CPU resources, 768GB of memory resources, 14.4TB of storage capacity and 1.6TB of flash capacity used by Virtual SAN for storage acceleration services. Never seen one of these boxes before? Well this is what they tend to look like, in this example you see a SuperMicro Twin configuration. As you can see from the rear view, 4 individual nodes with 2 power supplies and in the front you see all the disks which are connected per group of 6 to each of the nodes!

For those of you who read this far and are still wondering why RAIL, and is it an acronym, the short answer is “No, not an acronym”. The RAIL in EVO:RAIL simply represent the ‘rail mount’ attached to 2U/4-node server platform that allows it to slide easily into a datacenter rack. One RAIL for one EVO:RAIL HCIA, which represents the smallest unit measure with respect to compute, network, storage and management within the EVO product family.

By now you are probably all anxious to know what EVO:RAIL looks like. Before I show you, one more thing to know about EVO:RAIL… the user interface uses HTML-5! So it works on any device, nice right!

If you prefer a video over screenshots, make sure to visit the EVO:RAIL product page on vmware.com!

What do we do to get it up and running? First of all rack the appliance, cable it up and power it on! Next, hit up the management interface on https://<ip-address>:7443

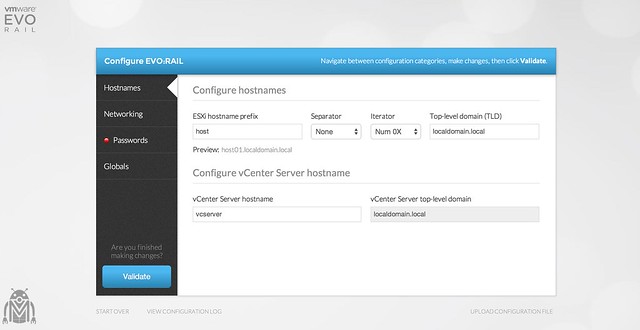

Next you start entering the details of your environment, look the following screenshot to get an idea around how easy it is! You can even define your own naming scheme and it will automatically apply that to joining hosts (both the current set, and any additional appliance added in the future)

Besides a naming scheme, EVO:RAIL allows you to configure the following:

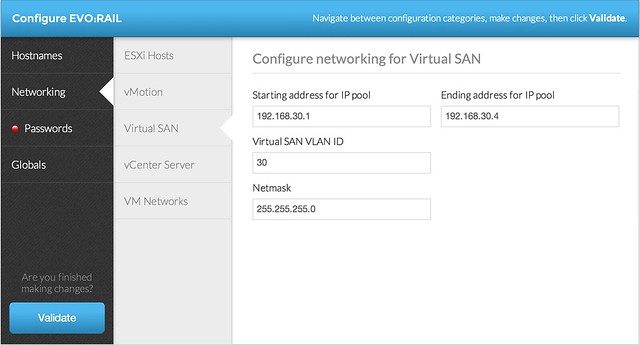

- IP addresses for Management, vMotion, Virtual SAN (by specifying a pool per traffic type, see screenshot below)

- vCenter Server and ESXi passwords

- Globals like: Time Zone, NTP Servers, DNS Servers, Centralized Logging (or configure Log Insight), Proxy

Believe me when I say that it does not get easier then this. Specify your IP ranges and globals once and never think about it any more.

When you are done EVO:RAIL will validate the configuration for you and then when you are ready apply it. Along the way it will indicate the stage and provide an indication of how far it is in terms of configuration.

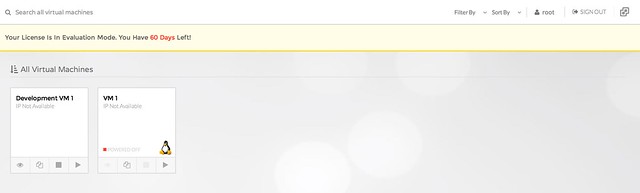

When it is done it will point you to the management interface and from there you can start deploying workloads. Just to be clear, the EVO:RAIL interface is a simplified interface. If for any reason at all you feel the interface does not bring you the functionality required you can switch to the vSphere Web Client as that is fully supported!

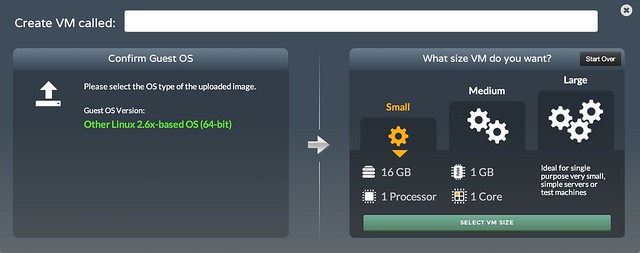

The interface will allow you to manage virtual machines in an easy way. It has pre-defined virtual machine sizes (small / medium / large) and even security profiles that can be applied to the virtual machine configuration!

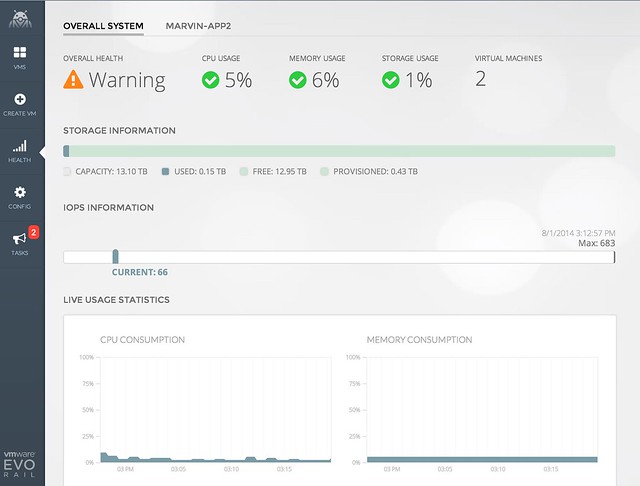

Of course, EVO:RAIL provides you monitoring capabilities, in the same easy fashion as everything else. Simple overview of what is there, what the usage is and what the state is.

With that I think it is time to conclude this already lengthy blog post. I will however follow up on this shortly with a series that looks a bit more in-depth to some of the details around EVO:RAIL with a couple core team members. I think it is fair to say that EVO:RAIL is an exciting development in the space of datacenter infrastructure and more specifically in the world of hyper-convergence! If you are at VMworld and want to know more visit the EVO:RAIL booth, the EVO:RAIL pavilion or one of the following sessions:

- Software-Defined Data Center through Hyper-Converged Infrastructure (SDDC4245) Monday 25th August, 2:00 PM – 3:00 PM – Moscone South, Gateway 103 with Mornay van der Walt and Chris Wolf

- VMware and Hyper-Converged Infrastructure (SDDC2095) Monday 25th of August, 4:00 PM – 5:00 PM – Moscone West, Room 3001 with Bryan Evans

- VMware EVO:RAIL Technical Deepdive (SDDC1337) Tuesday 26th of August – 11:00 AM – Marriott, Yerba Buena Level, Salon 1 with Dave Shanley and Duncan Epping

- VMware Customers Share Experiences and Requirements for Hyper-Converged (SDDC1818) – Tuesday 26th of August, 12:30 PM – 1:30 PM – Moscone West, Room 3014 with Bryan Evans and Michael McDonough

- VMware EVO:RAIL Technical Deepdive (SDDC1337) Wednesday 27th of August – 11:30 AM – Marriott, Yerba Buena Level, Salon 7 with Dave Shanley and Duncan Epping

Isn’t that a bit similar to what Nutanix is already offering? Would love to see a deeper description on how this product differentiates with the existing competition in terms of compatibility, integration, perfrormance and/or other terms.

Great blog, keep it up! 🙂

Very good article as always Duncan. Is there any place where the cost ($) of this solution can be found to be compared with other hyper-converged competitors?

The price point is determined by the OEM partners. I am guessing that it will show up as soon as they have their websites updated etc.

That will be the interesting part for sure. Great job at the ask the experts session on Day 1. I really enjoyed it.

Great post and a great product. This is almost a N.U.C. of VMware.

Questions

– as always is the host licencing part of the SKU? ie is this a true one order code get you up and running.

– Take it vsphere licencing is kept separate for ease of configuration.

ALL licenses required are included! Single SKU containing:

vCenter + ESXi + Log Insight + VSAN + EVO + ALL HARDWARE + ALL Support etc!

I didn’t realize that. Now that would be nice. Simplified licensing for once 🙂

Great Post! This is going to give the big competition to Nutanix and Simplivity

Not yet. It is a good direction, however product itself need more time (maybe next release or release after next release) to become real competitor to Nutanix.

BTW, Duncan, congrats on lunching new product and welcome in hyper-converged family 🙂

I am pretty sure Artur that that is not up to you to decide… but up to our customers 🙂

Cannot agree more 🙂

But I thought he was speaking as a “customer”. Gee what an ego.

Is there something wrong with the screenshot for the pre-defined virtual machine screen?

I can see all screenshots fine?

Oh. I just thought

Small = ideal for simple servers and test machines

Large = 16GB + 1 Processor

Not the other way around?

Hi,

Since this is sold in a single SKU as a Single block on pre-defined hardware to ensure functionality during installation and long term evolution, i was wondering if the EVO:Rail engine will also manage firmware upgrade as part of the upgrade process (assuming right level of firmware will be present at day 1)? Firmware and vSphere version are becoming more and more cirtical for some vendors, like HP is providing cookbooks for matching hardware vs vSphere versions. I was wondering if this was part of the upgrade process today, if not is it in the plan for the future or has this been considered. Maybe my question is not relevant to this platform. Also HP may not be the best reference these days, as we had many issues with this lately.

Thanks!

It is not part of the current version, however we do understand that this is important. I am not qualified to make public roadmap statements however on my website. If you would like to know more about the roadmap I recommend contacting the local VMware SE team for a session under NDA.

Nice… Now it is explains why your blog is offly quiet… I can’t help not compare it to obviously Nutanix, like everyone else… The html web interface looks impressive and well thought out. If it is going to compete with the best; and market under VDI, VSAN will need good dedupe and compression features which I would assume is on the top of the list the VSAN team is working… Very excited for this form factor; congrats on a very good project.. I am interested on the detailed licensing…

Great Article and good time to include such an offering in the portfolio, considering the success of companies like nutanix.

I have looked into Nutanix as well as these quad SuperMicro units but it seems odd to me why people would go with quad systems opposed to a dual system.

The quad systems can only have 192GB RAM per node.

We know VMware typically has high demand on RAM, so more RAM and less CPU seems the way to go.

I have looked at SuperMicro Twin units which allow more RAM. Yes this would be 2U to have 4 nodes but the RAM can go to 512GB per node. 1TB RAM per 2U.

Thanks for your site, it is great.

Not sure which systems you have looked at. There are many different “TWIN” systems by Supermicro. Some take up to 1TB of memory.

Anyway, 3 is the bare minimum for VSAN. Doing just 3 hosts would put VMs immediately at risk when 1 host fails. We wanted to provide additional “insurance” and as such made 4 the minimum for EVO:RAIL.

@ANeil – the min. recommended cluster size for VSAN is 4 nodes so that could be the reason.

Hello @Enrico. only 3 nodes are required for VSAN.

http://kb.vmware.com/selfservice/microsites/search.do?language=en_US&cmd=displayKC&externalId=2058424

Host requirements:

A minimum of three ESXi 5.5 hosts, all contributing local disks (1 SSD and 1 HDD).

Each host must have a minimum of 6 GB of RAM.

The host must be managed by vCenter Server 5.5 and configured as a Virtual SAN cluster.

See my comment above

One SLC SATADOM or SAS HDD as the ESXi™ boot device

Three SAS 10K RPM 1.2TB HDD for the VMware Virtual SAN™ datastore

One 400GB MLC enterprise-grade SSD for read/write cache

How does this result in 13TB usable (seen in other posts)?

It is 13TB RAW. Usable will depend on your FTT defined.

Very Nice…And here we are in hyper-converged market…Although I think scale out option should be 4+ to suit large enterprise.