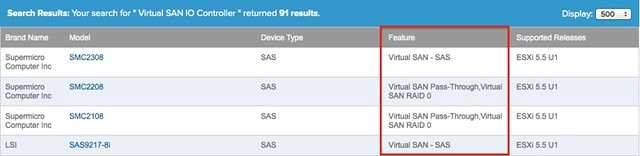

As this was completely unclear to me as well and I started a thread on it on our internal social platform I figured I would share this with you. When you go through the exercise of selecting a disk controller for VSAN using the VMware Compatibility Guide (vmwa.re/vsanhcl) you will see that there are 4 “features” listed. The four features describe how you can use your disk controller to manage the disks in your host. This is important as selecting the wrong disk controller could lead to unwanted side effects.

Let me list the four features and explain what they actually mean:

- Virtual SAN – SAS

- Virtual SAN – SATA

- Virtual SAN Pass-Through

- Virtual SAN RAID 0

Virtual SAN – SAS / SATA and Pass-through are essentially the same thing. Well not entirely as it is implemented in a different way, but the result is the same. What this does is serving the disks straight up to the hypervisor. This functionality literally passes the disk through to ESXi, and avoids the need to create a RAID set or volume for your disks. This is by far the easiest way to pull your disks in to a VSAN datastore if you ask me.

Virtual SAN RAID 0 means that in order to use the disks you will need to create a single disk RAID 0 set for each disk in your system. The downside is when using this that things like hot-swap will be impossible as your Disk (ID) is bound to the RAID 0 set. However there is also a positive thing, many of these disk controllers support things like encryption of data at rest and if your disks support this you could potentially use this. It should be noted however that as far as I know today this functionality has not been tested (extensively) and support could be an issue. However, I could see why one would want to buy a controller that offer this functionality to be future proof.

Then there is another aspect, I have been asked about this a couple of times already and that is the performance capability of the controller. As far as I have seen the HCL today consists of 3Gbps and 6Gbps controllers. In most cases there is little to no cost difference, so if supported I would always recommend to go with the faster controller. But there is another thing here that is often overlooked and that is the queue depth. Before you pull the trigger and decide to buy controller-A over controller-B you may want to verify what the queue depth is of both of them. In some cases, and especially the cheaper disk controllers, the queue depth is low (32) where others offer 256 and higher. Especially when you are building an environment where a lot of IO is expected these are things to take in to consideration, plus you wouldn’t want to buy a screaming fast SSD and then find out your bottleneck is the queue depth of your disk controller right?

<update>A very good point made by Tom Fenton, if you select a controller and are at the point of rolling out VSAN make sure you validate the firmware and the driver used. If you click on the “Model” you will be able to see those details. This also applies for SSDs and HDDs!</update>

I hope that helps,

Duncan,

I’m working on a VSAN brick design and wanted to get your input without completely spilling the beans on everything publicly just yet. Are you able to see my email address from this comment?

One also needs to be aware of the firmware on the device as well.

For example the LSI SAS2308 is only supported with Firmware 18.00.00.00

Mine is FW 14 worked fine with the beta bits but the GA, not so well.

Very valid point, I should have called that out.

fyi, the link to the vsan hcl looks incorrectly formatted so it’s not working…

thanks, fixed it.

Does that mean in Pass-Through mode you still have the ability to hot swap failed disks, providing it’s supported on the server?

failed magnetic disks: yes

Duncan,

Can you tell me if the Dell PERC H730 will be added to the VSAN HCL? All the other Dell PERC controllers are, but this is their newest one, only been out about a month or so.

Has there been any testing done regarding the effect of the controller’s onboard memory? E.g. does a controller with 2 GB onboard outperform an identical controller with only 1 GB?