Something that I have seen popping up multiple times now is the discussion around VSAN and spindles for performance. Someone mentioned on the community forums they were going to buy 20 x 600GB SAS drives for their VSAN environment for each of their 3 hosts. These were 10K SAS disks, which obviously outperform the 7200 RPM SATA drives. I figured I would do some math first:

- Server with 20 x 600GB 10K SAS = $9,369.99 per host

- Server with 3 x 4TB Nearline SAS = $4,026.91 per host

So that is about a 4300 dollar difference. Note that I did not spec out the full server, so it was a base model without any additional memory etc, just to illustrate the Perf vs Capacity point. Now as mentioned, of course the 20 spindles would deliver additional performance. Because after all you have additional spindles and better performing spindles. So lets do the math on that one taking some average numbers in to account:

- 20 x 10K RPM SAS with 140 IOps each = 2800 IOps

- 3 x 7200 RPM NL-SAS with 80 IOps each = 240 IOps

That is a whopping 2560 IOps difference in total. That does sound like an awe full lot doesn’t it? To a certain extent it is a lot, but will it really matter in the end? Well the only correct answer here is: it depends.

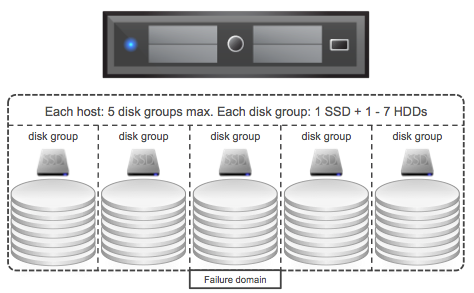

I mean, if we were talking about a regular RAID based storage system it would be clear straight away… the 20 disks would win for sure. However we are talking VSAN here and VSAN heavily leans on SSD for performance. Meaning that each diskgroup is fronted by an SSD and that SSD is used for both Read Caching (70% of capacity) and write buffering (30%) of capacity. Illustrated in the diagram below.

The real question is what is your expected IO pattern? Will most IO come from read cache? Do you expect a high data change rate and as such could de-staging be problematic when backed by just 3 spindles? Then on top of that, how and when will data be de-staged? I mean, if data sits in write buffer for a while it could be the data changes 3 or 4 times before being destaged, preventing the need to hit the slow spindles. It all depends on your workload, your IO pattern, your particular use case. Looking at the difference in price, I guess it makes sense to ask yourself the question what $ 4300 could buy you?

Well for instance 3 x 400GB Intel S3700 capable of delivering 75k read IOps and 35k write IOps (~800 dollars per SSD). That is extra, as with the server with 20 disks you would also still need to buy SSD and as the rule of thumb is roughly 10% of your disk capacity you can see what either the savings are or the performance benefits could be. In other words, you can double up on the cache without any additional costs compared to the 20-disk server. I guess personally I would try to balance it a bit, I would go for higher capacity drives but probably not all the way up to 4TB. I guess it also depends on the server type you are buying, will they have 2.5″ drive slots or 3.5″? How many drive slots will you have and how many disks will you need to hit the capacity requirements? Are there any other requirements? As this particular user mentioned for instance he expected extremely high sustained IOs and potentially full backups daily, as you can imagine that could impact the number of spindles desired/required to meet performance expectations.

The question remains, what should you do? To be fair, I cannot answer that question for you… I just wanted to show that these are all things one should think about before buying hardware.

Just a nice little fact, today a VSAN host can have 5 Disk Groups with 7 disks, so 35 disks in total. With 32 hosts in a cluster that is 1120 disks… That is some nice capacity right with 4TB disks that are available today.

I also want to point out that a tool is being developed as we speak which will help you making certain decisions around hardware, cache sizing etc. Hopefully more news on that soon,

** Update, as of 26/11/2013 the VSAN Beta Refresh allows for 7 disks in a disk group… **

I still have to see any real world bench marks? are they some?

Afaik, at the moment there’s a NDA to benchmarks in VSAN Beta. There hard to find on the http://www...

No official benchmarks has been published. I have seen internal numbers, but cannot share for now.

The only published benchmark so far is a VDI one – there is more detail here: http://blogs.vmware.com/performance/2013/10/vdi-benchmarking-using-view-planner-on-vmware-virtual-san-vsan.html

In addition to SSDs (10%) you also need a certain controller type and potentially disk items for the OS (if it was local before). The performance/price should be compared against the storage you using today for the virtual machine hosting. In our assessment, a VSAN configured host is approaching tier one price per TB when compared to our block based storage (not including licensing).

Really comparable? What did you compare it with, would be interesting to know… as I found it very difficult to make a 1:1 comparison.

I guess what needs to be factored in is that you can use the hosts both for storage and compute.

Good post that sums it up. I would love if you moved into the “it depends” a little more and showed different use cases with benchmarks.

Considering VSAN currently does not support the use of SAN/NAS datastores. Can this algorithm change once say an external ZFS NAS comes into play which can have significantly more IOPs?

@Duncan

vSAN looks very interesting… I suppose; my greatest hesitation in even trying out the beta, what the cost will look like. I wonder if VMware will want like 50 points/month per physical CPU for the vSAN feature; or something on the order of $5000 for 3 hosts, like with the SVA. In that case, the beta would have been a waste of time, since, more Equallogic or Netapp capacity will clearly be a better value, due to similar cost and greater software functionality of the non-virtual SAN environment.

@Erik Krogstad

I think a ZFS NAS is another SAN competitor; not something that makes sense for VSAN to be consuming as a backend disk; as it would double up on the latency.

The major performance advantage of triple-mirrored disk pools in ZFS is that ZFS is esentially a write-optimized filesystem; with a SSD-backed ZIL, and sufficient free disk space, there is essentially no such thing as a “random” write or partial stripe write. Which is useful, because random writes are expensive. And large amounts of read cache, using fast SDRAM is more effective and safer.

If the licensing would be 5000 for 3 hosts… those 3 hosts can roughly provide 360TB of storage. On top of that you can run your VMs on it. Pretty sure the price per GB would be very good. I don’t know what EQL or NetApp does in comparison though, not involved with those kind of projects any longer.

PS: I am not involved in anyway with pricing and packaging… so have no clue what it will look like.

Not sure I am following with regards to your comment around algorithm.

James agree with you regarding the triple mirroring of a RaidZ3 on that front but if you were to use a RAIDZ1 since it doesn’t have to be super redundant you could get the performance out of the lower end disks and dump the ZIL log off to a separate partition locally. I was really just thinking on how to tinker with VSAN and lower end disks to bump up the IOPs.

Just thinking even more outside the box for a moment one of the main selling points for VSAN is scalability in the fact that you can hot add disks. If you were to add FreeBSD ZFS pools via ISCSI then it would be a matter of new pools which you could then do ZSYNCs off of almost like a cheap SRM and distribute them across a backend or hot-site for further redundancy.

Again just thinking outside the box to get the most bang for the buck out of lower end disks.

If you want to bump up the IOps, use more cache instead… Makes more sense to me then making your environment 2 / 3 x as complex by adding an additional layer in between + more latency. You are creating a hyperconverged host local storage system and then add network overhead for more IOps?

I think this Nutanix article describes it very well: http://www.nutanix.com/blog/2013/11/14/there-was-a-big-flash-and-then-the-dinosaurs-died/

I completely understand that server side cache will without a doubt be faster on that local host rather than my workaround stated above. Where I was going with it was ‘how to make the 10k SAS drives more efficient and get as close to the IO pattern you talked about above’ for the VSAN. I would have started out my idea or theory with using a flash array ZFS if I had intended on actually implementing this solution (which I really wouldn’t in production) Here is a ZFS array on flash model that I would have introduced rather than my FreeBSD idea http://www.tegile.com/blog/george_tintri.

I also get the network overhead part but VSAN cluster creates one scale-out datastore across participating hosts and I looked at that as a networking model. I figured that since the cluster was really over the network anyway I could throw the SAS drives in between the hosts and bump up their performance and redundancy utilizing ZFS. It was the first thing I was thinking about when I saw your image with 1 SSD and the rest hard drives.

Either way I was just attempting an outside the box concept on making the most from cheaper drives.

On one hand we took the price of one of our servers today. Then modified that server spec to be vSAN capable (removed HBAs, added disks, certain controller etc). We then took the difference in price and found the price per TB from that. The outcome for us was a price that was comparable to teir 1 block based storage we use today.

What kind of Storage are you running then? I find that hard to believe to be honest…

And completely agree, that this makes really good sense for a smaller shop that perhaps hasn’t purchase shared storage yet etc.

I can’t see 3x 4 TB spindles, and a SSD cache being good for real-world virtualization workloads.. Yes it is better than 3 4 TB spindles.

Since IOPS that the SSD can take care of don’t go to the HDDs.

Things should be great if your workload is just reads, and the working set is smaller than the SSD with plenty of hot spots and few cold spots; cache hit rates such as 99%, with few rights, is an idealistic workload though; real-world applications are mixed.

On the other hand, if you have workloads that are ever write-heavy, or ever change; all of those I/Os have to get to the platters, and on avereage, they can’t be at a faster rate than those platters can write them….

I believe best practice here for Tier 1 workloads; is to size the number of platters, for your applications’ sustained I/O patterns and expected growth; and use cache exclusively to help you with performance during the peak times.

3 massive spindles, and a SSD cache is a major risk point for potential storage unreliability; once applications change, or during occasional workload shifts.

What happens when everything’s fine… your workload leans on cache, but at 2:00 PM; a minor change occurs in the application access pattern, and the VM goes down, because the storage can’t take it? Ooops…..

Our per TB cost for the vSAN config came to ~$2700/TB (3 factor replication). I’d consider that teir one pricing. Hit my email up if you want to discuss more.

Tier1 storage is much more than $2700/TB; if are buying between 1 and 20 TB, and you don’t have controllers/heads. The Tier1 arrays start at a minimum of $50,000; including no SSDs (Storage vendor branded SSDS ~$2000+ each), and just 7200 RPM SATA.

I figure a 3 node ESXi cluster dual socket 256gb of RAM, (unless buying refurb hardware) itself costs about $36,000 with hardware and host licensing.

Say you buy a 20TB Tier1 array at $45k.

$45000 + $36000 = ~$4050/TB (Storage + Compute)

You can get 16 x 3 = 48 2TB SAS nearline spindles, for approximately $150 each, add $2400 in SAS HBAs, and let’s just say $15k in extra licensing, and $3000 in SSDs; you have…

$63600 = ~$3200/TB (Storage + Compute)

Expandability…. as long as you picked servers having SAS backplane with enough hot plug ports; $450 for 3 more 2TB HDDs; when fill scale by adding more spindles to an External SAS HBA or ~$3000 to add 3 more SAS expanders to 3 servers, to add 16 more HDDs.

Hi Duncan – Makes me wonder what EMC is thinking?

Does each disk group on the host will have its own dedicated SSD or SSD will be shared between disk groups on the host

@vsoftinfotech; The vSAN design and sizing guide may be helpful for you http://www.vmware.com/files/pdf/products/vsan/VSAN_Design_and_Sizing_Guide.pdf

See [5.] limits; for the beta, there is a minimum of 1 SSD per disk group; and a maximum of 1 SSD per disk group: you cannot have more than 5 disk groups per host,

and, the maximum number of HDDs per disk group is 6.

I read this as: you need 3 disk groups with 6 HDDs and 3 SSDs in total, to achieve 18 spindles, for example, and you are recommended to have a minimum of a SSD with 10% of the capacity (or higher) of the disk group.

And 30 is the maximum number of spindles you can have on a host at all, until they increase the limit.

Which gives another advantage to 1TB or 2TB NL-SAS spindles, with lots of SSD, at least until that SSD fails.

IMO; when they go out of beta, to be taken seriously — they absolutely must offer a way to have 2 SSDs, with somewhat graceful degradation, if a SSD fails in a disk group.

I can’t seem to find an answer to this anywhere, but can fast and slow disks be mixed in the same group? What are the ramifications? Is vsan smart enough to see the performance difference and utilize the resources appropriately, or would it degrade the performance of my existing disks? I have a bunch of pre-existing hardware that I’d like to utilize. It already has 6, 10k spindles/host, but I’d like to throw an additional 7200 NL SAS on each one. Would it be smart to add a second SSD per host, and put these new NL SAS spindles in their own groups maybe?

VSAN does, as far as I am aware, not take the speed of the drive in to consideration when placing data. Personally I would recommend a consistent configuration throughout to prevent issues around inconsistent performance.

Hello Duncan,

Before we get started a quick thank you for your articles to date and the information provided, I have enjoyed reading it as part of my learning.

Topic: VSAN, sustained throughput, writes and cache misses.

I am been working on some configurations for VSAN and to date I am a little hung up on sustained throughput write metrics in addition to read cache misses. A quick fact check to ensure we are on the same page.

Object less than 256GB with a component stripe set to 0 and FT=2 will reside after cache on a total of three spinning disks (say 7.2k) over three hosts requiring a 4 host cluster to account for the witness. I am struggling to find any real information on the de-staging mechanisms behind the scenes for the write operations. How are writes organized and then de-staged from the cache to the disk?

In the above example, if we assume 80 iops for the 7.2k disk are we limited to 240iops for the VM that resides on these disks? I appreciate that I have to have continual new writes that fill the cache before the disk becomes the limitation. When the cache reaches saturation (hard/soft limit) does it do anything cleaver to de-stage that data, coalesce for example? The same 7.2k drive may manage 200MB/s of throughput (optimistic I know) assuming sequential in nature. The above figures are in the assumption that we are not dealing with cache misses at the same time. Cache misses can only add to the complication of this calculation!

Does vSAN architecture require that we calculate the sustained throughput of the environment over a 24 hour period and ensure that there is enough spinning disk in the backend to deal with the unique base line? Differentials from the incremental backups can give an indication of this but no real metric given that the data may have had many re-writes between the two backup windows.

Is maximum new write fully sustained throughput, after cache saturation, governed solely by the spinning disk (plus component stripe) behind the scenes?

I hope the above makes sense? If not please let me know and I will elaborate.

Your insight would be appreciated, especially if you have information on the de-staging algorithms.

Thanks,