For the last 12 months people have been saying that all-flash and hybrid configurations are getting really close in terms of pricing. During the many conversations I have had with customers it became clear that this is not always the case when they requested quotes from server vendors and I wondered why. I figured I would go through the exercise myself to see how close we actually are and to what we are getting close. I want to end this discussion once and for all, and hopefully convince all of you to get rid of that spinning rust from your VSAN configurations, especially those who are now at the point of making their design.

For the last 12 months people have been saying that all-flash and hybrid configurations are getting really close in terms of pricing. During the many conversations I have had with customers it became clear that this is not always the case when they requested quotes from server vendors and I wondered why. I figured I would go through the exercise myself to see how close we actually are and to what we are getting close. I want to end this discussion once and for all, and hopefully convince all of you to get rid of that spinning rust from your VSAN configurations, especially those who are now at the point of making their design.

For my exercise I needed to make up some numbers, I figured I would use an example of a customer to make it as realistic as possible. I want to point out that I am not looking at the price of the full infrastructure here, just comparing the “capacity tier”, so if you received a quote that is much higher than that does make sense as you will have included CPU, Memory, Caching tier etc etc. Note that I used dollar prices and took no discount in to account, discount will be different for every customer and differ per region, and I don’t want to make it more complex than it needs to be. This applies to both the software licenses as the hardware.

What are we going to look at:

- 10 host cluster

- 80TB usable capacity required

- Prices for SAS magnetic disks and an all-flash configuration

I must say that the majority of my customers use SAS, some use NL-SAS. With NL-SAS of course the price point is different, but those customers are typically also not overly concerned about performance, hence the SAS and all-flash comparison is more appropriate. I also want to point out that the prices below are “list prices”. Of course the various vendors will give a substantial discount, and this will be based on your relationship and negotiation skills.

- SAS 1.2TB HDD = $ 579

- SAS 960GB SSD = $ 1131

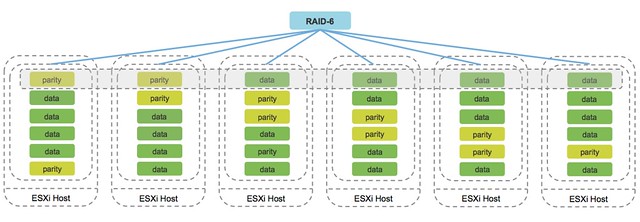

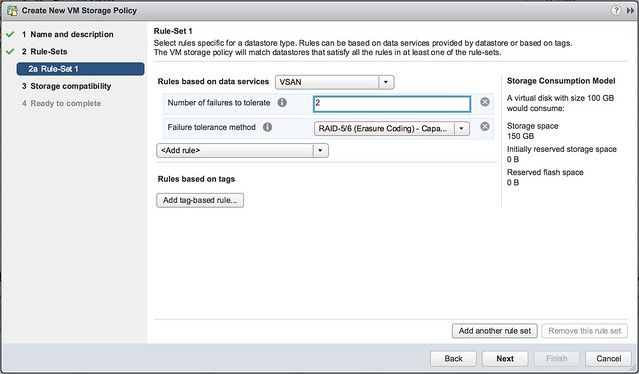

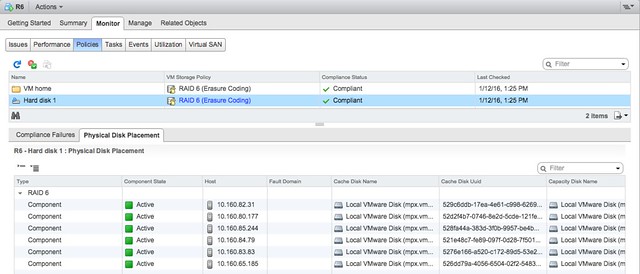

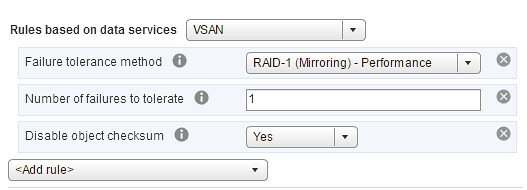

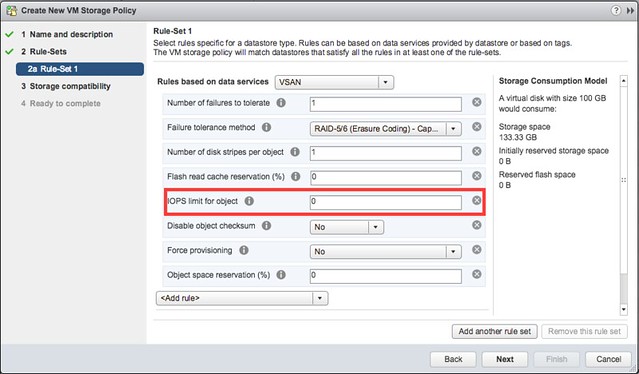

The above prices were taken from dell.com and the SSD is a read intensive SSD. Now lets take a look at an example. With FTT=1 and RAID-1 for Hybrid and RAID-5 for all-flash. In order to get to 80TB usable we need to calculate the overhead and then divide it by the size of the device to find out how many devices we need. Multiply that outcome by the cost of the device and you end up with the cost of the 80TB usable capacity tier.

80TB * 2 (FTT factor) = 160TB / 1.2TB device = 133.33 devices needed 134* 579 = $ 77586

80TB * 1,33 (FTT factor) = 106.4TB / 0.960TB device = 110.8 devices needed 111 * 1131 = $ 125542

Now if you look at the price per solution, hybrid costs are $ 77.5k while all-flash is $125.5k. A significant difference, and then there also is the 30k licensing delta (list price) you need to take in to account, which means the cost for the capacity is actually $155.5k. However, we have not included ANY form of deduplication and compression in to account. Of course the results will differ per environment, but I feel 3-4x is a reasonable average across hundreds of VMs with 80TB of usable capacity in total. Lets do the math with 3x just to be safe.

I can’t simply divide the cost by 3 as unfortunately dedupe and compression do not work on licensing cost, unless of course the lower number of devices would result in a lower number of hosts needed, but lets not assume that that is the case. In this case I will divide 111 devices required by 3 which means we need 37 devices in total:

37 * 1131 = $ 41847

As you can see, from a storage cost perspective we are now much lower than hybrid, 41k for all-flash versus 78k for hybrid. We haven’t factored in the license yet though, so if you add 30K for licensing then (delta between standard/advanced * 20 CPUs) it means all-flash is 71k and hybrid 78k. The difference being $ 7000 between these configurations with all-flash being cheaper, well that is not the only difference, the biggest difference of course will be the user experience, much higher number of IOPS but more importantly also an extremely low latency. Now, as stated the above is an example with prices taken from Dell.com, if you do the same with SuperMicro then of course the results will be different. Prices will differ per partner, but Thinkmate for instance charges $379 for a 10K RPM 1.2TB SAS drive, and $549 for a Micron DC510 with 960GB of capacity. Which means that the base price with just taking RAID-5 in to consideration and no dedupe and compression benefit will be really close.

Yes, the time is now, all-flash will over take “performance” hybrid configurations for sure!