I was asked today if it was possible to create a 2 host stretched cluster using a vSAN Standard license or a ROBO Standard license. First of all, from a licensing point of view the EULA states you are allowed to do this with a Standard license:

A Cluster containing exactly two Servers, commonly referred to as a 2-node Cluster, can be deployed as a Stretched Cluster. Clusters with three or more Servers are not allowed to be deployed as a Stretched Cluster, and the use of the Software in these Clusters is limited to using only a physical Server or a group of physical Servers as Fault Domains.

I figured I would give it a go in my lab. Exec summary: worked like a charm!

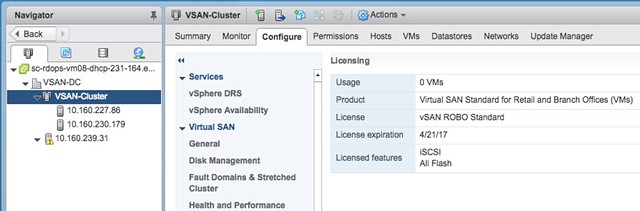

Loaded up the ROBO license:

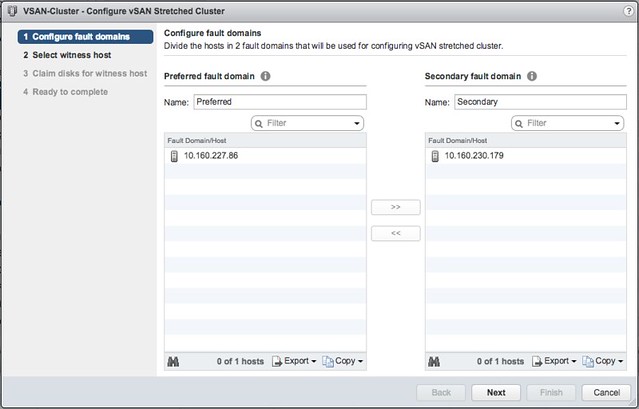

Go to the Fault Domains & Stretched Cluster section under “Virtual SAN” and click Configure. And one host to “preferred” and one to “secondary” fault domain:

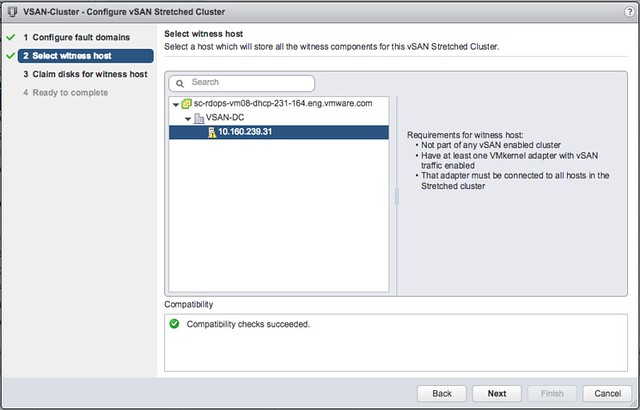

Select the Witness host:

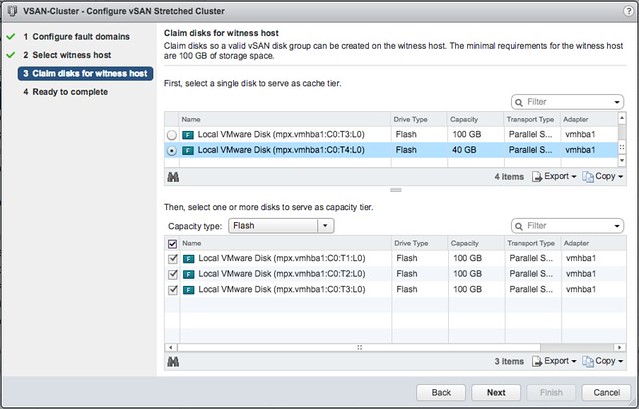

Select the witness disks for the vSAN cluster:

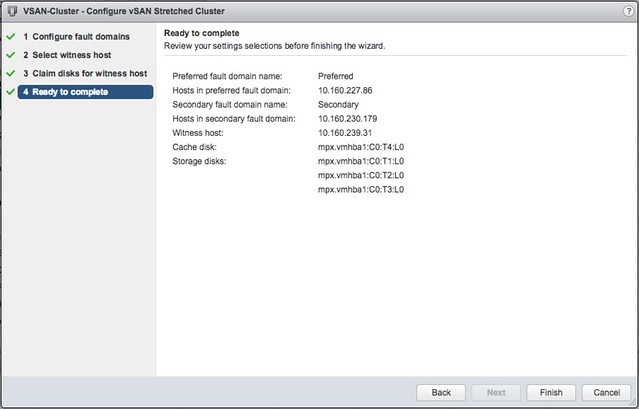

Click Finish:

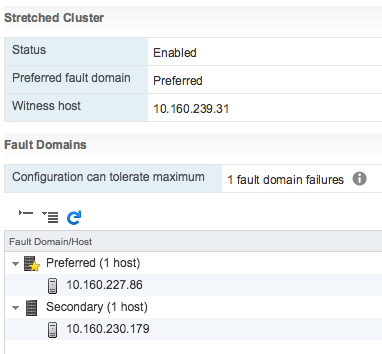

And then the 2-node stretched cluster is formed using a Standard or ROBO license:

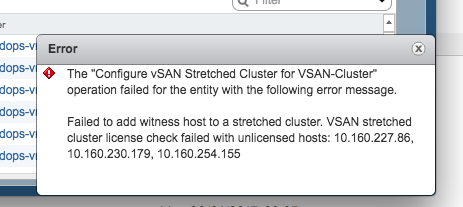

Of course I tried the same with 3 hosts, which failed as my license does not allow me to create a stretched cluster larger than 1+1+1. And even if it would succeed, the EULA clearly states that you are not allowed to do so, you need Enterprise licenses for that.

There you have it. Two host stretched using vSAN Standard, nice right?!

I got this question today and I thought I already wrote something on the topic, but as I cannot find anything I figured I would write up something quick. The question was if a disk controller for vSAN should have cache or not? It is a fair question as many disk controllers these days come with 1GB, 2GB or 4GB of cache.

I got this question today and I thought I already wrote something on the topic, but as I cannot find anything I figured I would write up something quick. The question was if a disk controller for vSAN should have cache or not? It is a fair question as many disk controllers these days come with 1GB, 2GB or 4GB of cache.