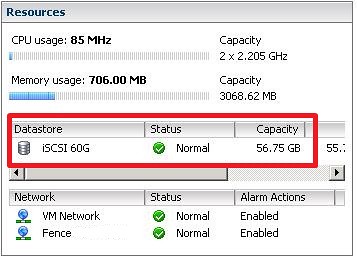

I was exploring the next version of ESX / vCenter again today and did a Storage VMotion via the vSphere client. I decided to take a couple of screenshots to get you guys acquainted with the new look/layout.

Doing a Storage VMotion via the GUI is nothing spectacular cause we all have used the 3rd party plugins. But changing the disk from thick to thin is. With vSphere it will be possible to migrate to thin provisioned disks, which can and will save disk space and might me desirable for servers that have low disk utilization and disk changes. [Read more…] about Storage VMotion, exploring the next version of ESX/vCenter