Starting with vSAN 8.0 ESA (Express Storage Architecture) VMware has introduced an adaptive RAID-5 mechanism. What does this mean? Essentially, vSAN deploys a particular RAID-5 configuration depending on the size of the cluster! There are two options, let’s list them out and discuss them individually.

- RAID-5, 2+1, 3-5 hosts

- RAID-5, 4+1, 6 hosts or more

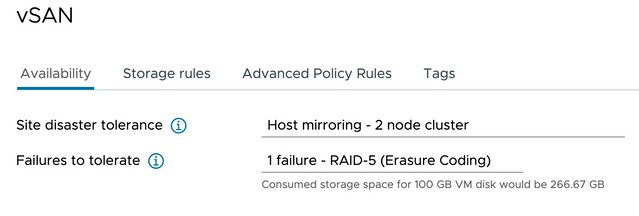

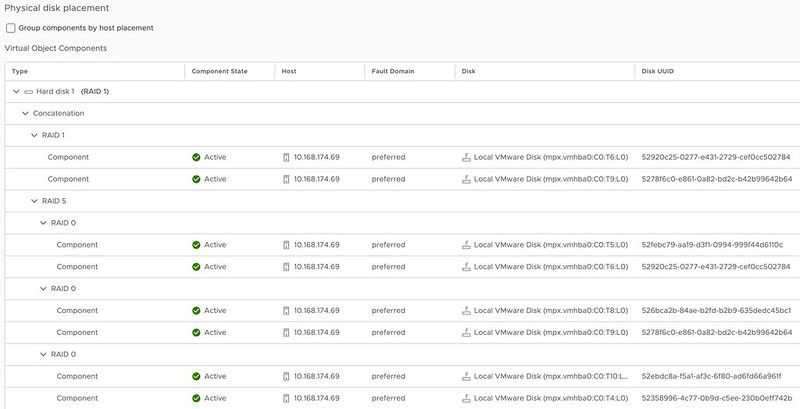

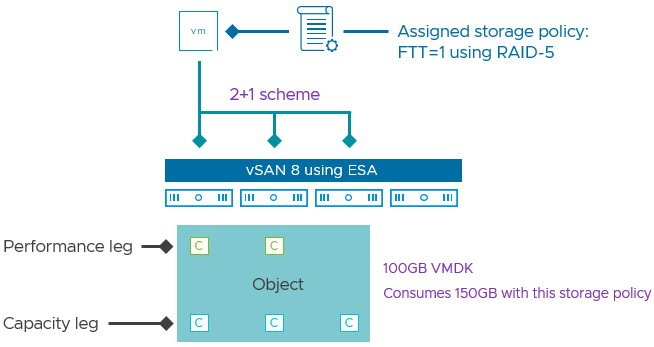

As mentioned in the above list, depending on the cluster size, you will see a particular RAID-5 configuration. Clusters of up to 5 hosts will see a 2+1 configuration when RAID-5 is selected. For those wondering, the below diagram will show what this looks like. 2+1 configurations will have a 150% overhead, meaning that when you store 100GB of data, this will consume 150GB of capacity.

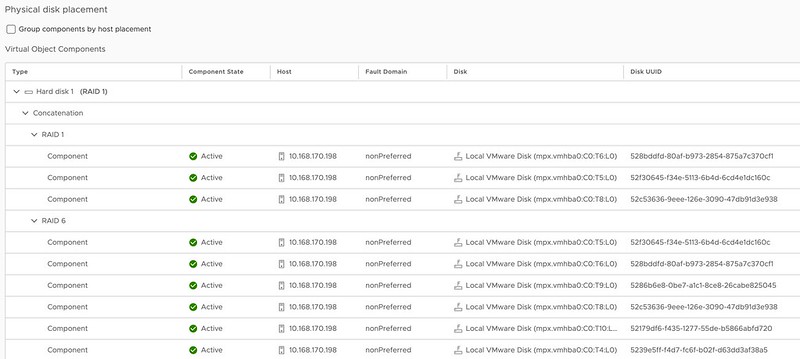

Now, when you have a larger cluster, meaning 6 hosts or more, vSAN will deploy a 4+1 configuration. The big benefit of this is that the “capacity overhead” goes down from 150% to 125%, in other words, 100GB of data will consume 125GB of capacity.

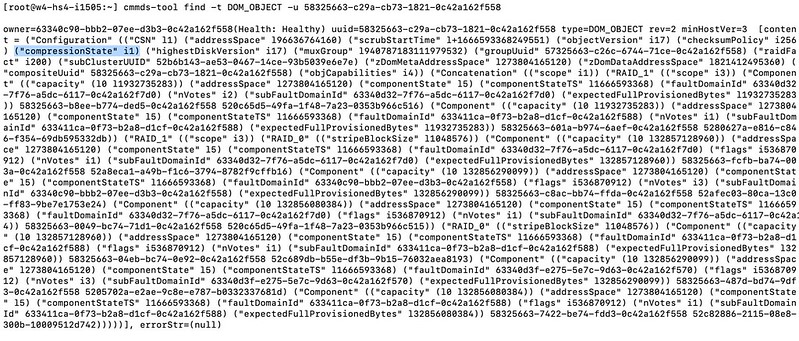

What is great about this solution is that vSAN will monitor the cluster size. If you have 6 hosts and a host fails, or a host is placed into maintenance mode etc, vSAN will automatically scale down the RAID-5 configuration from 4+1 to 2+1 after a time period of 24 hours. I of course had to make sure that it actually works, so I created a quick demo that shows vSAN changing the RAID-5 configuration from 4+1 to 2+1, and then back again to 4+1 when we reintroduce a host into the cluster.

One more thing I need to point out. The Adaptive RAID-5 functionality also works in a stretched cluster. So if you have a 3+3+1 stretched cluster you will see a 2+1 RAID-5 set. If you have a 6+6+1 cluster (or more in each location) then you will see a 4+1 set. Also, if you place a few hosts into maintenance mode or hosts have failed then you will see the configuration change from 4+1 to 2+1, and the other way around when hosts return for duty!

For more details, watch the demo, or read this excellent post by Pete Koehler on the VMware website.