VMware Cloud Foundation 9.0 was recently launched, and that means vSAN 9.0 is also available. There are many new features introduced in 9.0, so a perfect time to ask Pete Koehler to join the podcast once again and go over some of these key enhancements. Below, you can find the links we discussed during the episode, as well as the embedded player to listen to the episode. Alternatively, you can also listen to the episode via Spotify, Apple, or any other podcast app you may use. Make sure to like and subscribe!

vmware vsan

Introducing vSAN 9.0!

As most have probably seen, Broadcom has just announced VMware Cloud Foundation 9.0. Of course, this means that there’s also a brand new shiny version of vSAN available, namely vSAN 9.0. Most of the new functionality released was already previewed at VMware Explore by Rakesh and I, but I feel it is worth going over some key new functionality anyway. I am not going to go over every single item, as I know folks like Pete Koehler will do this on the VMware Blog anyway.

The first big item I feel is worth discussing is vSAN ESA Global Deduplication. This is probably the main feature of the release. Now I have to be fair, it is released as “limited availability” only at this stage, and that basically means you need to request access to the feature. The reason for this is that in the first release it is not supported yet in combination with for instance stretched clustering, so Broadcom/VMware will want to make sure you meet the requirements for this feature before it is enabled. Hopefully that will change soon though!

Now what is so special about this feature compared to vSAN OSA Deduplication? Well first of all, vSAN OSA is on a per diskgroup basis, whereas vSAN ESA is global deduplication. This should result in a much higher deduplication ratio, as the chances of finding duplicates are simply much higher across hosts than within a single disk group. Dedupe is also post-process, which removes the risk of a potential performance impact. On top of that, the layout of the data is done in such a way that vSAN ESA can still efficiently read large contiguous blocks of data, even when it is deduplicated.

The next feature, which is worth discussing, is specifically introduced for vSAN Storage Clusters (the architecture formerly known as vSAN Max) and is all about network separation. This new capability allows you to differentiate between Client traffic and Server traffic for a vSAN Storage Cluster. Which means that you could have east-west traffic within a rack for instance to a top-of-rack 100GbE switch, but do north-south to connecting clusters via a 10GbE switch, or any other speed. It not only provides a huge amount of flexibility, but it also improves efficiency, performance and security at the same time by isolating these traffic streams from each other as visualized below.

Then, the next big-ticket item isn’t necessarily a vSAN feature, but rather a vSAN Data Protection and VMware Live Recovery feature. Starting with 9.0 it will be possible to replicate VMs between clusters using the snapshot technology, which is part of vSAN ESA. This provides the big benefit of being able to go 200 snapshots deep at no (significant, single-digit) performance loss. On top of that, vSAN Data Protection can do this at a 1 minute RPO and leverages the already familiar UI and protection group capabilities that were introduced in 8.x. Big difference though being that you no longer have to download the vSAN Data Protection appliance, but that everything is now available as part of the VLR appliance.

The last thing I want to discuss is the vSAN Stretched Cluster functionality we introduced. I’ve already discussed this previously as a preview, but now it is available for stretched cluster customers to test out (Note, you do have to file an RPQ for both). vSAN Stretched Cluster Site Maintenance Mode is available starting with vSAN 9 for OSA via RPQ, and it allows you to place a whole site into maintenance while maintaining data consistency within the site. This solves a major operational hurdle for customers, as previously, customers would have to place a site into maintenance mode one host at a time. If you had a 10+10+1 configuration, that indeed meant you had to place 10 hosts into maintenance mode sequentially. This issue is now solved via a simple UI button!

Lastly, also for vSAN Stretched Clustering we are introducing, in “limited availability,” the vSAN Stretched Cluster Manual Take Over functionality. This will help customers who have lost a site that was placed into maintenance to regain access to their data. Of course, the idea here is though that over time this feature will also help customers to regain access to data when a data site and the witness fails simultaneously. It is a fairly delicate and complicated process, so as you can imagine, this is “limited availability” for now, as it requires some education/explanation of how this works and what the potential impact is of running the manual take over command.

I hope that provides an overview of some of the key functionality. I am also recording a podcast with Pete Koehler where we will discuss these capabilities soon, I will add the link to the podcast, and to the videos, when they are released.

What does Datastore Sharing/HCI Mesh/vSAN Max support when stretched?

This question has come up a few times now, what does Datastore Sharing/HCI Mesh/vSAN Max support when stretched? It is a question which keeps coming up somehow, and I personally had some challenges to find the statements in our documentation as well. I just found the statement and I wanted to first of all point people to it, and then also clarify it so there is no question. If I am using Datastore Sharing / HCI Mesh, or will be using vSAN Max, and my vSAN Datastore is stretched, what does VMware (or does not) support?

We have multiple potential combinations, let me list them and add whether it is supported or not, not that this is at the time of writing with the current available version (vSAN 8.0 U2).

- vSAN Stretched Cluster datastore shared with vSAN Stretched Cluster –> Supported

- vSAN Stretched Cluster datastore shared with vSAN Cluster (not stretched) –> Supported

- vSAN Stretched Cluster datastore shared with Compute Only Cluster (not stretched) –> Supported

- vSAN Stretched Cluster datastore shared with Compute Only Cluster (stretched, symmetric) –> Supported

- vSAN Stretched Cluster datastore shared with Compute Only Cluster (stretched, asymmetric) –> Not Supported

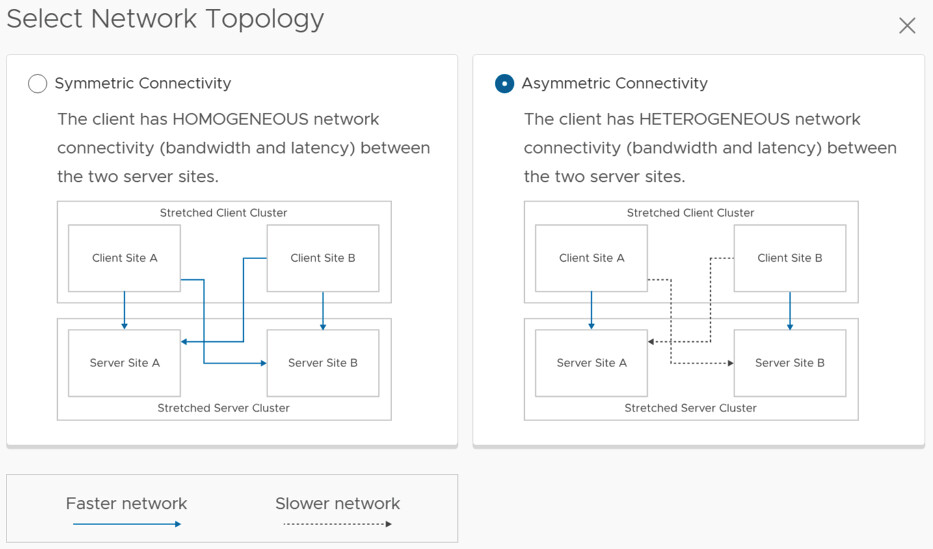

So what is the difference between symmetric and asymmetric? The below image, which comes from the vSAN Stretched Configuration, explains it best. I think Asymmetric in this case is most likely, so if you are running Stretched vSAN and a Stretched Compute Only, it most likely is not supported.

This also applies to vSAN Max by the way. I hope that helps. Oh and before anyone asks, if the “server side” is not stretched it can be connected to a stretched environment and is supported.

Witness resiliency feature with a 2-node cluster

A few weeks ago I had a conversation with a customer about a large vSAN ESA 2-node deployment they were planning for. One of the questions they had was if they would have a 2-node configuration with nested fault domains if they would be able to tolerate a witness failure after one of the node had gone down. I tested this for a stretched cluster, but I hadn’t tested it with a 2-node configuration. Will we actually see the votes be re-calculated after a host failure, and will the VM remain up and running when the witness fails after the votes have been recalculated?

Let’s just test it, and look at RVC at what happens in each case. Let’s look at the healthy output first, then we will look at a host failure, followed by the witness failure:

Healthy

DOM Object: 71c32365-667e-0195-1521-0200ab157625

RAID_1

Concatenation

Component: 71c32365-b063-df99-2b04-0200ab157625

votes: 2, usage: 0.0 GB, proxy component: true

RAID_0

Component: 71c32365-f49e-e599-06aa-0200ab157625

votes: 1, usage: 0.0 GB, proxy component: true

Component: 71c32365-681e-e799-168d-0200ab157625

votes: 1, usage: 0.0 GB, proxy component: true

Component: 71c32365-06d3-e899-b3b2-0200ab157625

votes: 1, usage: 0.0 GB, proxy component: tru

Concatenation

Component: 71c32365-e0cb-ea99-9c44-0200ab157625

votes: 1, usage: 0.0 GB, proxy component: false

RAID_0

Component: 71c32365-6ac2-ee99-1f6d-0200ab157625

votes: 1, usage: 0.0 GB, proxy component: false

Component: 71c32365-e03f-f099-eb12-0200ab157625

votes: 1, usage: 0.0 GB, proxy component: false

Component: 71c32365-6ad0-f199-a021-0200ab157625

votes: 1, usage: 0.0 GB, proxy component: false

Witness: 71c32365-8c61-f399-48c9-0200ab157625

votes: 4, usage: 0.0 GB, proxy component: false

1 host down, as you can see the votes for the witness changed, of course the staste also changed from “active” to “absent”.

DOM Object: 71c32365-667e-0195-1521-0200ab157625

RAID_1

Concatenation (state: ABSENT (6)

Component: 71c32365-b063-df99-2b04-0200ab157625

votes: 1, proxy component: false

RAID_0

Component: 71c32365-f49e-e599-06aa-0200ab157625

votes: 1, proxy component: false

Component: 71c32365-681e-e799-168d-0200ab157625

votes: 1, proxy component: false

Component: 71c32365-06d3-e899-b3b2-0200ab157625

votes: 1, proxy component: false

Concatenation

Component: 71c32365-e0cb-ea99-9c44-0200ab157625

votes: 2, usage: 0.0 GB, proxy component: false

RAID_0

Component: 71c32365-6ac2-ee99-1f6d-0200ab157625

votes: 1, usage: 0.0 GB, proxy component: false

Component: 71c32365-e03f-f099-eb12-0200ab157625

votes: 1, usage: 0.0 GB, proxy component: false

Component: 71c32365-6ad0-f199-a021-0200ab157625

votes: 1, usage: 0.0 GB, proxy component: false

Witness: 71c32365-8c61-f399-48c9-0200ab157625

votes: 1, usage: 0.0 GB, proxy component: false

And after I failed the witness, of course we had to check if the VM was still running and didn’t show up as inaccessible in the UI, and it did not. vSAN and the Witness Resilience feature worked as I expected it would work. (Yes, I double checked it through RVC as well, and the VM was “active”.)

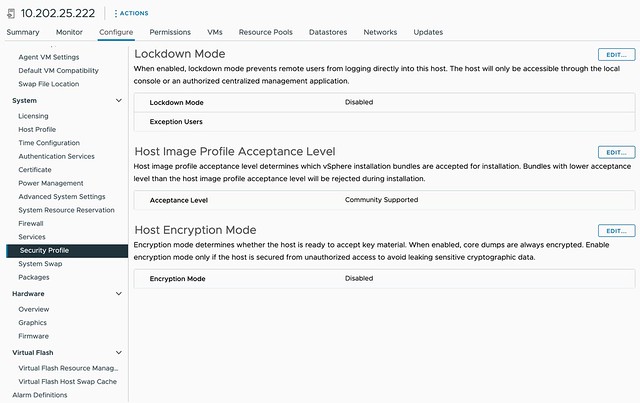

Can I change the “Host Image Profile Acceptance Level” for the vSAN Witness Appliance?

On VMTN a question was asked around the Host Image Profile Acceptance Level for the vSAN Witness Appliance, this is configured to “community supported”. The question was around whether it is supported to change this to “VMware certified” for instance. I had a conversation with the Product Manager for vSAN Stretched Clusters and it is indeed fully supported to make this change, I also filed a feature request to have the Host Image Profile Acceptance Level for the vSAN Witness increased to a higher, more secure, level by default.

So if you want to make that change, feel free to do so!