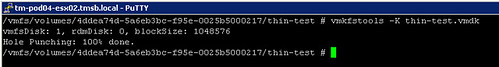

I was just playing around with vSphere 5.0 and noticed something cool which I hadn’t noticed before. I logged in to the ESXi Shell and typed a command I used a lot in the past, vmkfstools, and I noticed an option called -K. (Just been informed that 4.1 has it as well, I never noticed it though… )

-K –punchzero

This option deallocates all zeroed out blocks and leaves only those blocks that were allocated previously and contain valid data. The resulting virtual disk is in thin format

This is one of those options which many have asked for as in order to re”thin” their disks it would normally require a Storage vMotion. Unfortunately though it only currently works when the virtual machine is powered off, but I guess that is just the next hurdle that needs to be taken.