I was driving back home from Germany on the autobahn this week when thinking about 5-6 conversations I have had the past couple of weeks about performance tests for HCI systems. (Hence the pic on the rightside being very appropriate ;-)) What stood out during these conversations is that many folks are repeating the tests they’ve once conducted on their legacy array and then compare the results 1:1 to their HCI system. Fairly often people even use a legacy tool like Atto disk benchmark. Atto is a great tool for testing the speed of your drive in your laptop, or maybe even a RAID configuration, but the name already more or less reveals its limitation: “disk benchmark”. It wasn’t designed to show the capabilities and strengths of a distributed / hyper-converged platform.

I was driving back home from Germany on the autobahn this week when thinking about 5-6 conversations I have had the past couple of weeks about performance tests for HCI systems. (Hence the pic on the rightside being very appropriate ;-)) What stood out during these conversations is that many folks are repeating the tests they’ve once conducted on their legacy array and then compare the results 1:1 to their HCI system. Fairly often people even use a legacy tool like Atto disk benchmark. Atto is a great tool for testing the speed of your drive in your laptop, or maybe even a RAID configuration, but the name already more or less reveals its limitation: “disk benchmark”. It wasn’t designed to show the capabilities and strengths of a distributed / hyper-converged platform.

Now I am not trying to pick on Atto as similar problems exist with tools like IOMeter for instance. I see people doing a single VM IOMeter test with a single disk. In most hyper-converged offerings that doesn’t result in a spectacular outcome, why? Well simply because that is not what the solution is designed for. Sure, there are ways to demonstrate what your system is capable off with legacy tools, simply create multiple VMs with multiple disks. Or even with a single VM you can produce better results when picking the right policy as vSAN allows you to stripe data across 12 devices for instance (which can be across hosts, diskgroups etc). Without selecting the right policy or having multiple VMs, you may not be hitting the limits of your system, but simply the limits of your VM virtual disk controller, host disk controller, single device capabilities etc.

But there is even a better option, pick the right toolset and select the right workload(Surely only doing 4k blocks isn’t representative of your prod environment). VMware has developed a benchmarking solution that works with both traditional as well as with hyper-converged offerings called HCIBench. HCIBench can be downloaded for free, and used for free, through the VMware Flings website. Instead of that single VM single disk test, you will now be able to test many VMs with multiple disks to show how a scale-out storage system behaves. It will provide you great insights of the capabilities of your storage system, whether that is vSAN or any other HCI solution, or even a legacy storage system for that matter. Just like the world of storage has evolved, so has the world of benchmarking.

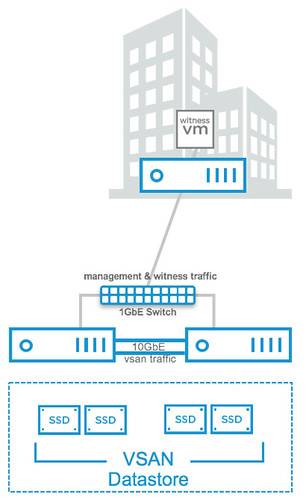

Next up on the list is 2-node direct connect. What does this mean? Well it basically means you can now cross-connect two VSAN hosts with a simple ethernet cable as shown in the diagram in the right. Big benefit of course is that you can equip your hosts with 10GbE NICs and get 10GbE performance for your VSAN traffic (and vMotion for instance) but don’t incur the cost of a 10GbE switch.

Next up on the list is 2-node direct connect. What does this mean? Well it basically means you can now cross-connect two VSAN hosts with a simple ethernet cable as shown in the diagram in the right. Big benefit of course is that you can equip your hosts with 10GbE NICs and get 10GbE performance for your VSAN traffic (and vMotion for instance) but don’t incur the cost of a 10GbE switch.

In 2013 VSAN saw the day of light, the beta was released. Now 3 years later you have the opportunity to

In 2013 VSAN saw the day of light, the beta was released. Now 3 years later you have the opportunity to