I have many customers going through the plan and design phase for implementing a vSAN based infrastructure. Many of them have conversations with OEMs and this typically results in a set of recommendations in terms of which hardware to purchase. One thing that seems to be a recurring theme is the question which disk controller a customer should buy. The typical recommendation seems to be the most beefy disk controller on the list. I wrote about this a while ago as well, and want to re-emphasize my thinking. Before I do, I understand why these recommendations are being made. Traditionally with local storage devices selecting the high-end disk controller made sense. It provided a lot of options you needed to have a decent performance and also availability of your data. With vSAN however this is not needed, this is all provided by our software layer.

When it comes to disk controllers my recommendation is simple: go for the simplest device on the list that has a good queue depth. Just to give an example, the Dell H730 disk controller is often recommended, but if you look at the vSAN Compatibility Guide then you will also see the HBA330. The big difference between these two is the RAID functionality offered on the H730 and the cache on the controller. Again, this functionality is not needed for vSAN, by going for the HBA330 you will save money. (For HP I would recommend the H240 disk controller.)

Having said that, I would at the same time recommend customers to consider NVMe for the caching tier instead of SAS or SATA connected flash. Why, well for the caching layer it makes sense to avoid the disk controller. Place the flash as close to the CPU as you can get for low latency high throughput. In other words, invest the money you are saving on the more expensive disk controller in NVMe connected flash for the caching layer.

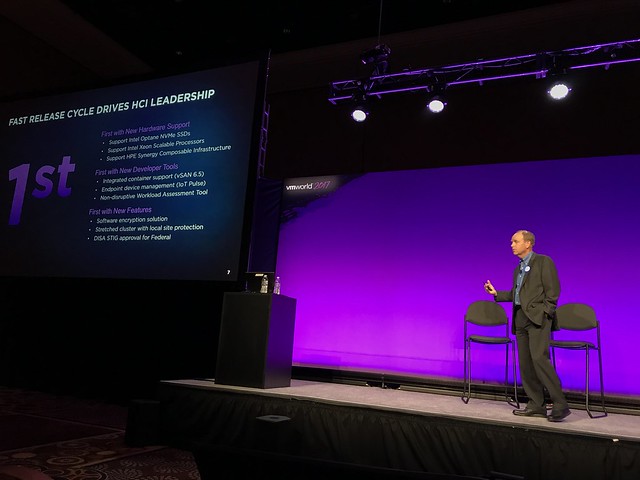

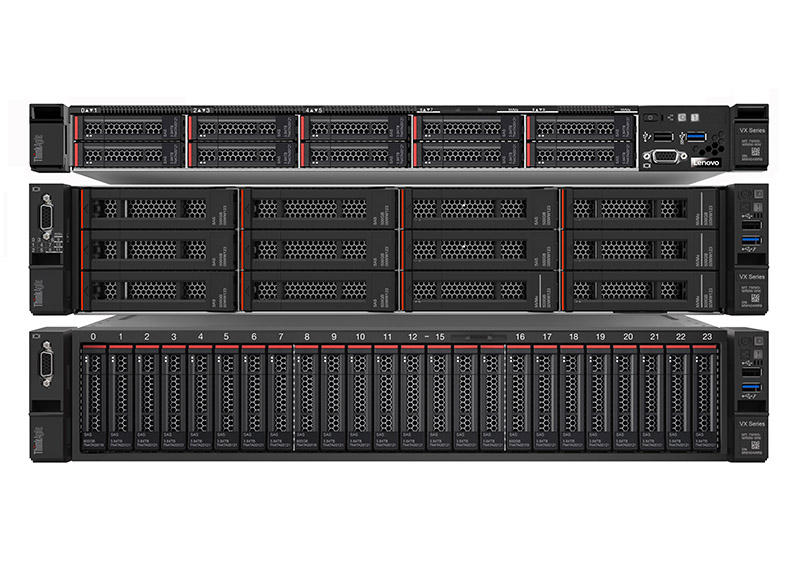

vSAN had 2 major releases in the past year, with some “firsts” during the past 12 months. Like first in HCI to support Intel Optane NVMe SSDs and HPE Synergy support etc. Today Lenovo released a new integrated HCI system called ThinkAgile VX. ServeTheHome has a great

vSAN had 2 major releases in the past year, with some “firsts” during the past 12 months. Like first in HCI to support Intel Optane NVMe SSDs and HPE Synergy support etc. Today Lenovo released a new integrated HCI system called ThinkAgile VX. ServeTheHome has a great