Cormac Hogan and I have been working hard over the past couple of months to update the Virtual SAN book. It was a lot of work with all the changes that were introduced to Virtual SAN since the first version, but we managed to get it done. We just finalized the last chapter and it looks like the Rough Cuts have just gone been published through Safari. If you have a Safari account you can find the book here.

The book will go over the basics, but also describe specific subjects in-depth like stretched clustering that was introduced in 6.1 and the data services and perf monitoring which was introduced in 6.2. About to go on a VSAN journey? Well this is where you should start!

Foreword was written by Christos Karamanolis (VMware Fellow and CTO) and we had the pleasure of working with Christian Dickmann (VSAN Dev Architect) and John Nicholson (VSAN Tech Marketing) as technical reviewers. Thanks guys for keeping us honest.

Hopefully the official version will be out soon as well through Amazon and other book stores. We have asked for a “digital first approach” which means that the Mobi/Epub version should be out first followed by a paper version. When it is, I will definitely let you guys know.

Before I forget, thanks Cormac… Always a pleasure working with you on projects like these. Go VSAN!

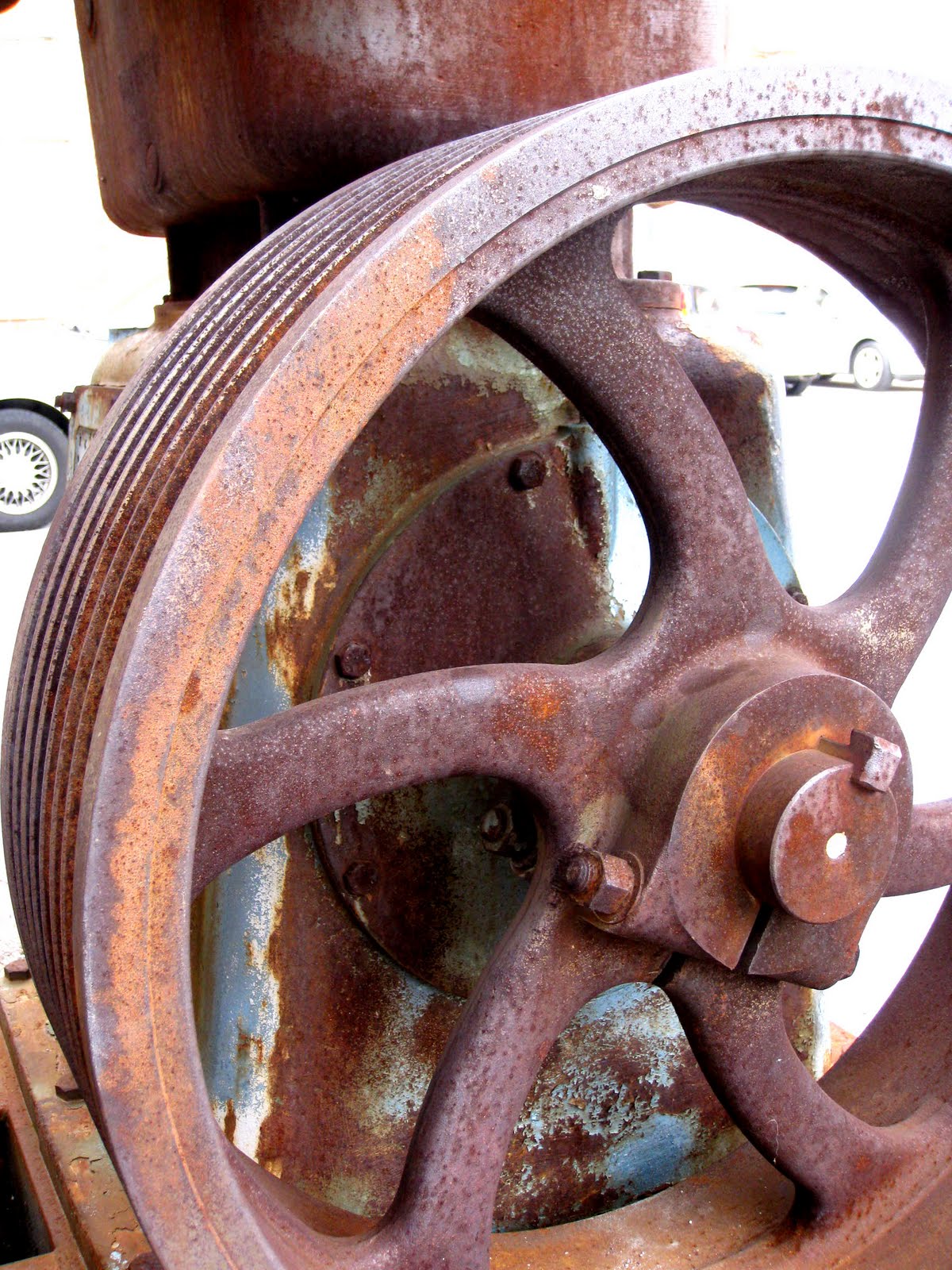

For the last 12 months people have been saying that all-flash and hybrid configurations are getting really close in terms of pricing. During the many conversations I have had with customers it became clear that this is not always the case when they requested quotes from server vendors and I wondered why. I figured I would go through the exercise myself to see how close we actually are and to what we are getting close. I want to end this discussion once and for all, and hopefully convince all of you to get rid of that spinning rust from your VSAN configurations, especially those who are now at the point of making their design.

For the last 12 months people have been saying that all-flash and hybrid configurations are getting really close in terms of pricing. During the many conversations I have had with customers it became clear that this is not always the case when they requested quotes from server vendors and I wondered why. I figured I would go through the exercise myself to see how close we actually are and to what we are getting close. I want to end this discussion once and for all, and hopefully convince all of you to get rid of that spinning rust from your VSAN configurations, especially those who are now at the point of making their design.